Although it has not been maintained for more than a year gbox This graphic library project has run out of time recently...

The focus this year is to xmake Completely just, at least in terms of architecture and major functions (package dependency management), we should fully implement them. Later, we will have sporadic maintenance and plug-in function expansion..

I will continuously carry out some small-scale updates on tbox. After a slight restructuring of some modules in the first half of next year, I will start to focus on the re development of gbox. This is the project I have always wanted to do, and also my favorite project

So I would rather develop slowly, but also do it well, to do the best..

Well, back to the point, although gbox is still in early development and cannot be used in actual projects, some of its algorithms are still valuable for reference and learning..

I have nothing to share these two days. If you are interested, you can read the source code directly: monotone. c

After all, it took me a whole year to figure out and implement this algorithm..

Why did it take so long? Maybe I was too stupid.. hey.. Of course, there are also work reasons..

Let me briefly talk about the background of the research and implementation of this complex polygon rasterization algorithm:

My gbox currently has two sets of rendering devices, one is direct pure algorithm rendering, and its core algorithm is the scanning polygon filling algorithm. This algorithm is very common, mature, and efficient. But in my other opengl es based rendering device (in order to use gpu to accelerate rendering), when rendering complex polygons, I ran into a problem: Opengl does not support the filling of complex polygons

Later, I thought a lot of ways and went to Google. I found that it can be implemented through the opengl template, and then I started writing..

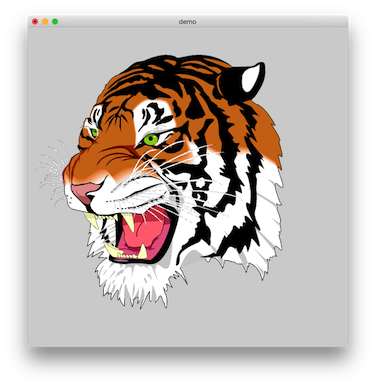

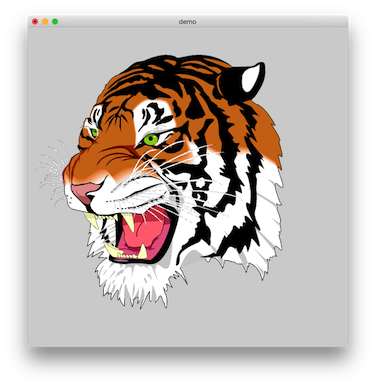

In the middle of the writing, the overall effect came out. I thought I had done it, but I encountered a bottleneck that was difficult to cross. The efficiency was too low. In this way, a tiger head was rendered with a frame rate of only 15 fps

It is slower than my pure algorithm implementation. Later I thought about why it is so slow. One reason is that templates are really slow...

The second reason is that I want to implement a general rendering interface and support various filling rules and clipping rules. These complexities also make the template based approach less optimized..

After half a year of trouble, it was finally decided to reconstruct the gbox as a whole, without using templates at all, and adopt another method:

First, preprocess the complex polygon on the upper layer according to various filling rules and clipping. The core algorithm is: Triangulate and segment complex polygons and merge them into convex polygons Then send it to opengl for fast rendering...

So, what if we can efficiently segment polygons and support various filling rules?

Continue to Google, and finally find that the algorithm in the raster code of libtess2 can be completely implemented, but I can't directly use its code. One reason is that it is not convenient to maintain, and the other reason is that its implementation is not very efficient in many places, and what I want to implement is more efficient and stable than it...

Then we must first see through its implementation logic, and then improve and optimize the algorithm implementation...

Although there is not much code in it, it took me another half year just to see through it. Finally, I continued to write for half a year, and finally it was completely solved..

The final effect is good. At least on my mac pro, I can use opengl to render the tiger head, and the frame rate can reach 60 fps

Of course, there must be a lot of problems in it. If we don't do it recently, we really don't have time to fix it. We can only put it aside and optimize it later...

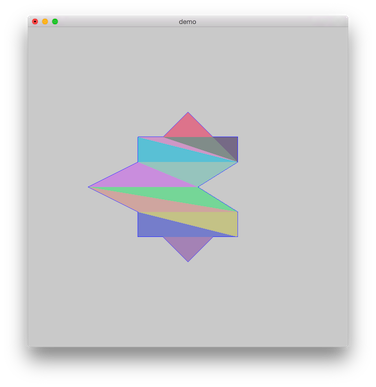

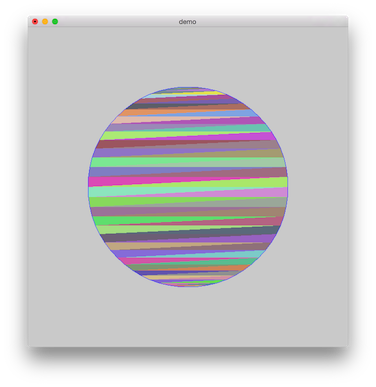

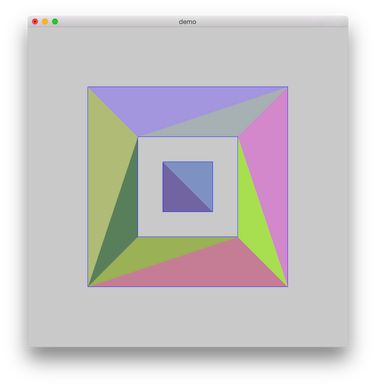

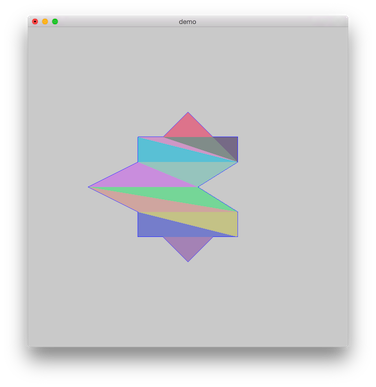

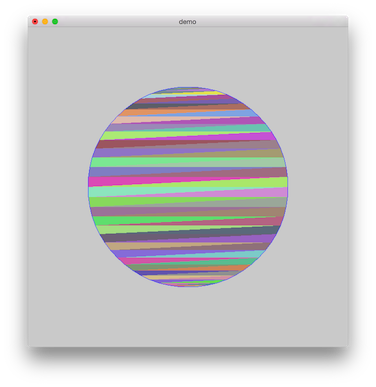

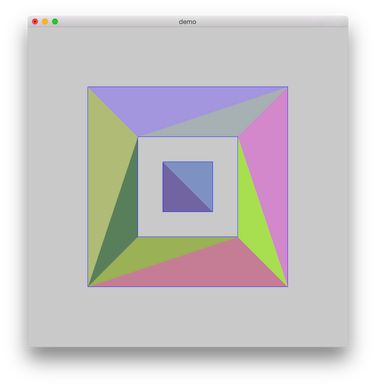

The effect of first drying and triangulation:

Then sun a tiger's head:

Then I will briefly describe the segmentation algorithm:

Some differences and improvements between the implementation algorithm in gbox and the libtess2 algorithm:

- The overall scanning line direction is changed from longitudinal scanning to transverse scanning, which is more consistent with the seat logic of image scanning and more convenient for code processing

- We removed the process of 3D vertex coordinate projection, because we only deal with 2d polygons, which is faster than libtess2

- More intersections are processed and more places where intersection error calculation exists are optimized, so our algorithm will be more stable and more accurate

- It supports floating point and fixed point switching, and can be adjusted in terms of efficiency and precision

- It adopts its own unique algorithm to realize the comparison of active edges, with higher accuracy and better stability

- The algorithm of merging triangulated mesh into convex polygon is optimized, which is more efficient

- Traversal of each region removes unnecessary fixed-point counting process, so the efficiency will be much faster

The whole algorithm has four stages:

- Construct DCEL mesh network from original complex polygon (DCEL double connected edge linked list, similar to quad edge, is a simplified version)

- If the polygon is concave or complex, it should be divided into monotone polygon areas first (mesh structure maintenance)

- Fast triangulation of mesh based monotone polygons

- Merge triangulated areas to convex polygons

The implementation of rasterization algorithm can be divided into seven stages:

- Simplify the mesh network and preprocess some degenerate cases (for example, sub regions degenerate into points, lines, etc.)

- Build a list of vertex events and sort it (based on the priority of the smallest heap)

- Build a list of active edge regions and sort it (use the insertion sorting of local regions. In most cases, it is O (n), and the number is small)

- use

Bentley-Ottman Scan algorithm, scan all vertex events from the event queue, and calculate the intersection point and winding value (for filling rule calculation)

- If the intersection point changes the topology of the mesh network or the active edge list changes, the consistency of the mesh needs to be repaired

- When we process, something happens

mesh face In case of degradation, it also needs to be treated

- Replace the

left face Mark as "inside", that is, the final output area to be obtained

If you want to know more algorithm details, you can refer to: libtess2/alg_outline.md

The use example of raster interface comes from the source code: gbox/gl/render. c :

For more detailed algorithm implementation details, please refer to my implementation: monotone. c

static tb_void_t gb_gl_render_fill_convex(gb_point_ref_t points, tb_uint16_t count, tb_cpointer_t priv) { // check tb_assert(priv && points && count); // apply it gb_gl_render_apply_vertices((gb_gl_device_ref_t)priv, points); #ifndef GB_GL_TESSELLATOR_TEST_ENABLE // draw it gb_glDrawArrays(GB_GL_TRIANGLE_FAN, 0, (gb_GLint_t)count); #else // the device gb_gl_device_ref_t device = (gb_gl_device_ref_t)priv; // make crc32 tb_uint32_t crc32 = 0xffffffff ^ tb_crc_encode(TB_CRC_MODE_32_IEEE_LE, 0xffffffff, (tb_byte_t const*)points, count * sizeof(gb_point_t)); // make color gb_color_t color; color.r = (tb_byte_t)crc32; color.g = (tb_byte_t)(crc32 >> 8); color.b = (tb_byte_t)(crc32 >> 16); color.a = 128; // enable blend gb_glEnable(GB_GL_BLEND); gb_glBlendFunc(GB_GL_SRC_ALPHA, GB_GL_ONE_MINUS_SRC_ALPHA); // apply color if (device->version >= 0x20) gb_glVertexAttrib4f(gb_gl_program_location(device->program, GB_GL_PROGRAM_LOCATION_COLORS), (gb_GLfloat_t)color.r / 0xff, (gb_GLfloat_t)color.g / 0xff, (gb_GLfloat_t)color.b / 0xff, (gb_GLfloat_t)color.a / 0xff); else gb_glColor4f((gb_GLfloat_t)color.r / 0xff, (gb_GLfloat_t)color.g / 0xff, (gb_GLfloat_t)color.b / 0xff, (gb_GLfloat_t)color.a / 0xff); // draw the edges of the filled contour gb_glDrawArrays(GB_GL_TRIANGLE_FAN, 0, (gb_GLint_t)count); // disable blend gb_glEnable(GB_GL_BLEND); #endif } static tb_void_t gb_gl_render_fill_polygon(gb_gl_device_ref_t device, gb_polygon_ref_t polygon, gb_rect_ref_t bounds, tb_size_t rule) { // check tb_assert(device && device->tessellator); #ifdef GB_GL_TESSELLATOR_TEST_ENABLE // set mode gb_tessellator_mode_set(device->tessellator, GB_TESSELLATOR_MODE_TRIANGULATION); // gb_tessellator_mode_set(device->tessellator, GB_TESSELLATOR_MODE_MONOTONE); #endif // set rule gb_tessellator_rule_set(device->tessellator, rule); // set func gb_tessellator_func_set(device->tessellator, gb_gl_render_fill_convex, device); // done tessellator gb_tessellator_done(device->tessellator, polygon, bounds); }