What is robots.txt

How to create a robots.txt file

How to write the robots.txt rule

User agent: * here * represents all search engine types, * is a wildcard Disallow:/admin/It is defined here to prohibit crawling the directory under the admin directory Disallow:/require/The definition here is to prohibit crawling the directory under the require directory Disallow:/ABC/The definition here is to prohibit crawling the directory under the ABC directory Disallow:/cgi bin/*. htm Disable access to all URLs (including subdirectories) under the/cgi bin/directory with the suffix ". htm". Disallow: /*?* Prohibit access to all websites containing question mark (?) Disallow:/. jpg $Forbid fetching all pictures in. jpg format on the web page Disallow:/ab/adc.html Do not crawl the adc.html file under the ab folder. Allow:/cgi bin/The definition here is to allow crawling the directory under the cgi bin directory Allow:/tmp This definition allows you to crawl the entire directory of tmp Allow:. htm $Only URLs with the suffix ". htm" are allowed. Allow:. gif $Allow fetching web pages and gif format pictures Sitemap: the site map tells the crawler that this page is a site map

WordPress robots.txt rule recommendation

User-agent: * Disallow: /wp-admin/ Allow: /wp-admin/admin-ajax.php

User-agent: * Disallow: /wp-admin/ Disallow: /wp-content/plugins/ Disallow: /? s=* Allow: /wp-admin/admin-ajax.php User-agent: YandexBot Disallow: / User-agent: DotBot Disallow: / User-agent: BLEXBot Disallow: / User-agent: YaK Disallow: / Sitemap: https://blog.naibabiji.com/sitemap_index.xml

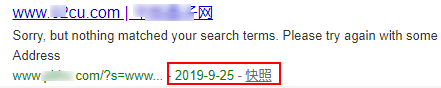

Disallow: /wp-content/plugins/ Disallow: /? s=*

How to check whether robots.txt is effective