KNN Adjacency algorithm be similar

K neighborhood algorithm

K-nearest neighbors algorithm (KNN) is very simple and effective. The model representation of KNN is the whole training data set. Is it simple?

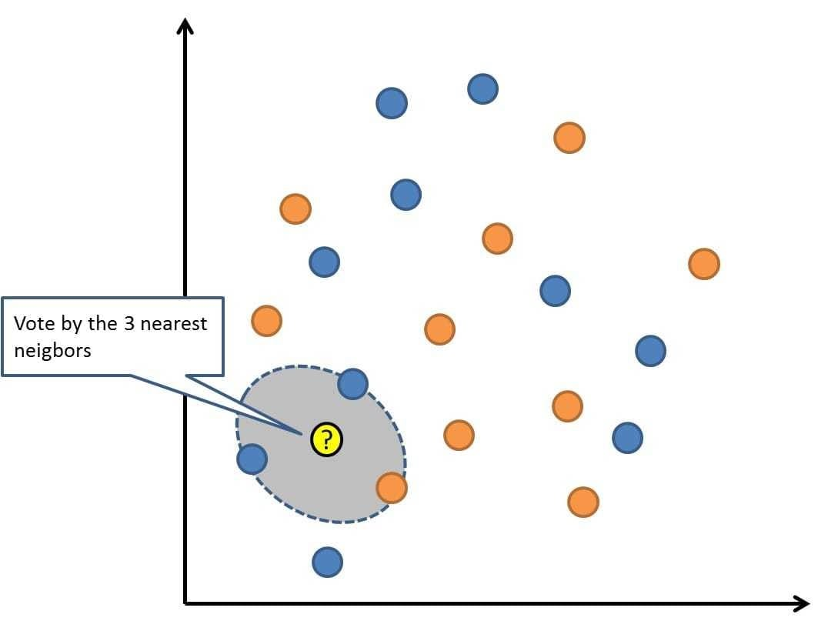

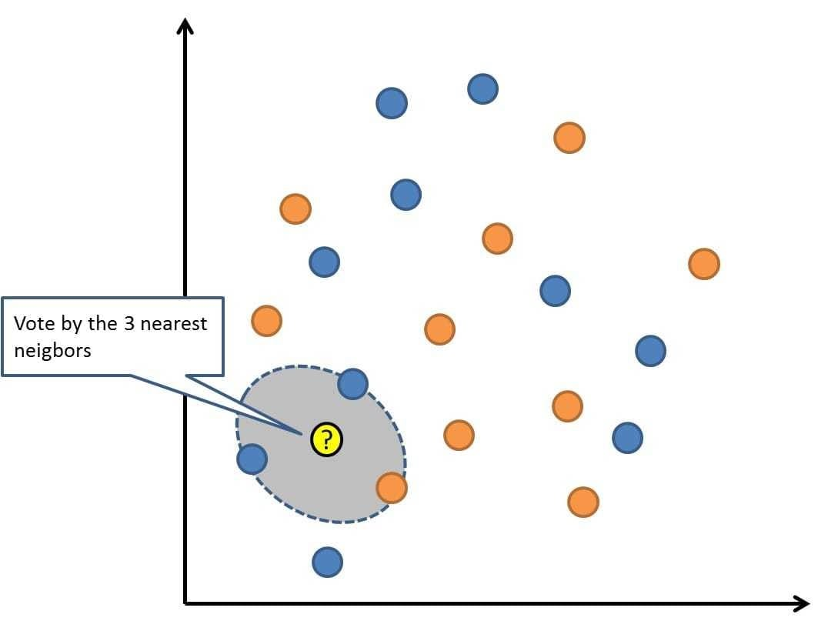

The new data points are predicted by searching the entire training set of K most similar instances (neighbors) and summarizing the output variables of those K instances. For regression problems, this may be the average output variable, and for classification problems, this may be the pattern (or most common) class value.

The trick is how to determine the similarity between data instances. If your attributes have the same scale (for example, in inches), the simplest technique is to use Euclidean distance, and you can directly calculate the number according to the difference between each input variable.

KNN may need a large amount of memory or space to store all data, but it only calculates (or learns) when it needs to predict in time. You can also update and plan your training examples over time to keep your predictions accurate.

The concept of distance or proximity can be decomposed in very high dimensions (many input variables), which will negatively affect the performance of the algorithm on your problem. This is called the curse of dimensions. It recommends that you use only the input variables most relevant to the predicted output variables.

Baidu Encyclopedia version

Neighbor algorithm, or kNN (k-Nearest Neighbor) classification algorithm is one of the simplest methods in data mining classification technology. K nearest neighbors means k nearest neighbors, which means that each sample can be represented by its nearest k neighbors.

The core idea of the kNN algorithm is that if most of the k nearest samples in the feature space belong to a certain category, the sample also belongs to this category and has the characteristics of samples in this category. This method only determines the classification of the samples to be divided according to the classification of the nearest one or several samples. The kNN method is only related to a small number of adjacent samples when making category decisions. Since the kNN method mainly depends on the surrounding limited adjacent samples, rather than on the method of distinguishing the class domain to determine the category, the kNN method is more suitable than other methods for the sample set to be divided with more overlapping or overlapping class domains.

Wikipedia version

In pattern recognition, k-nearest neighbor algorithm (k-NN) is a nonparametric method for classification and regression. In both cases, the input contains the nearest k training samples in the feature space. The output depends on whether k-NN is used for classification or regression:

- In k-NN classification, the output is class membership. Objects are classified by multiple votes of their neighbors, where objects are assigned to the most common class among their k nearest neighbors (k is a positive integer, usually a small integer). If k=1, simply assign the object to the class of the single nearest neighbor.

-In k-NN regression, the output is the attribute value of the object. This value is the average of the values of its k nearest neighbors.

K-NN is an example based learning or lazy learning, where functions are approximated locally only, and all calculations are deferred to classification. The k-NN algorithm is the simplest of all machine learning algorithms.

Advantages and disadvantages

advantage:

- The theory is mature and simple, which can be used for both classification and regression;

- It can be used for nonlinear classification;

- The training time complexity is O (n);

- No assumptions on data, high accuracy, and insensitive to outliers;

- KNN is an online technology, new data can be directly added to the dataset without re training;

- KNN theory is simple and easy to implement;

shortcoming

- The sample imbalance problem (that is, the number of samples in some categories is large, while the number of other samples is small) has poor effect;

- A large amount of memory is required;

- For data sets with large sample size, the calculation is relatively large (reflected in distance calculation);

- When the sample is unbalanced, the prediction deviation is relatively large. For example, the number of samples in one category is relatively small, while the number of samples in other categories is relatively large;

- KNN will perform a global operation again for each classification;

- The selection of k value is not optimal in theory, and it is often combined with K-fold cross validation to obtain the optimal k value selection;

My understanding

In my opinion, knn is to calculate the distance between test data and each training data, take the nearest K training data tags, and use the largest number of them as the prediction tags of test data.

The idea is: if most of the k nearest samples of a sample in the feature space belong to a certain category, the sample is also divided into this category. In KNN algorithm, all the selected neighbors are correctly classified objects. This method only determines the category of the samples to be divided according to the category of the nearest one or several samples in the classification decision.

Algorithm flow

1) Calculate the distance between test data and training data;

2) Sort according to the increasing relationship of distance;

3) Select K points with the smallest distance;

4) Determine the occurrence frequency of the category of the first K points;

5) Return the category with the highest frequency among the first K points as the prediction classification of test data

Value of K

K: Proximity number, that is, when predicting the target point, select several adjacent points to predict.

The value of K is very important because:

- If the value of K is too small, once there are noise components, they will have a relatively large impact on the prediction. For example, when the value of K is 1, once the nearest point is noise, there will be deviation. The reduction of K means that the overall model becomes complex and easy to over fit;

- If the value of K is too large, it is equivalent to using training examples in a larger neighborhood for prediction, and the approximate error of learning will increase. At this time, the instance far from the input target point will also play a role in the prediction, making the prediction error. The increase of K value means that the overall model becomes simple;

If K==N, then it is to take all instances, that is, to take the most points under a certain category in the instances, which has no practical significance for prediction; - The value of K should be odd as far as possible, so as to ensure that a large number of categories will be generated in the end of the calculation result. If the even number is taken, the situation may be equal, which is not conducive to prediction.

The common method is to use the test set to estimate the error rate of the classifier starting from k=1. Repeat the process, adding 1 value to K each time, allowing one neighbor to be added. Select K that produces the minimum error rate. Generally, the value of k does not exceed 20, and the upper limit is the root of n. As the data set increases, the value of K will also increase.

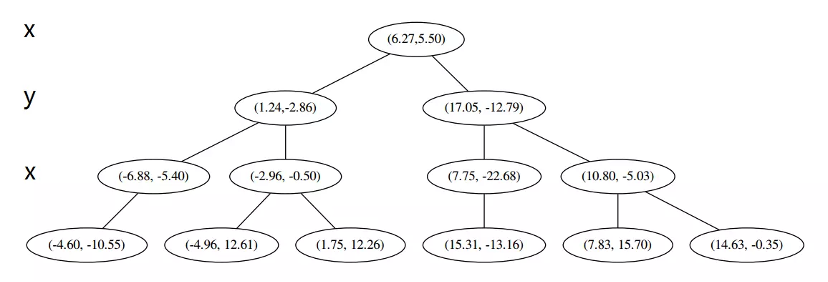

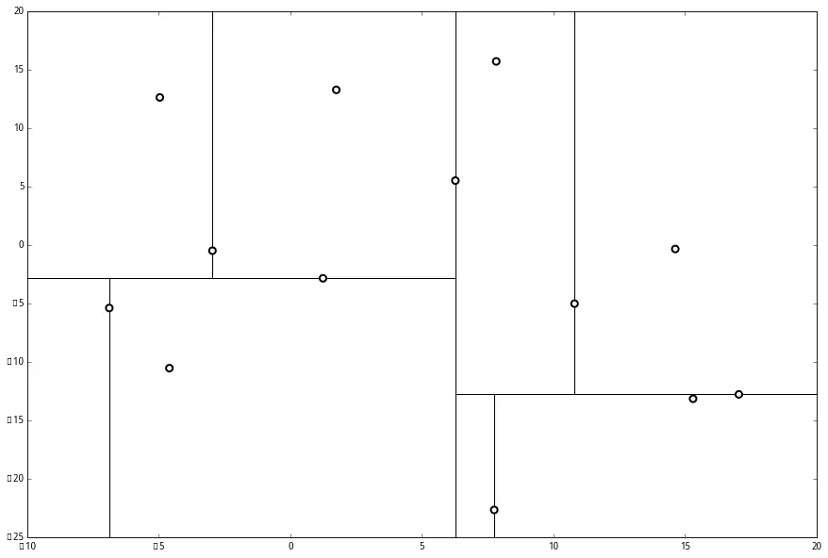

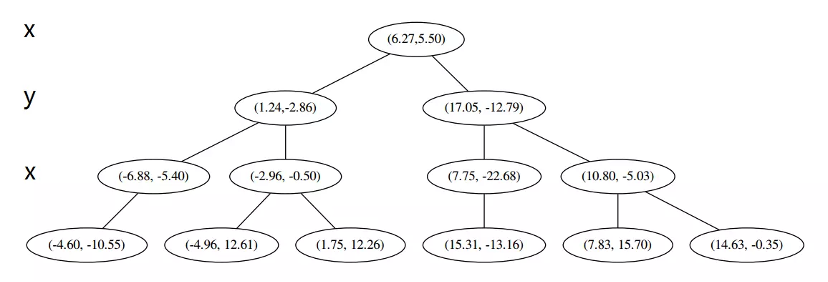

kdtree

The significance of kdtree is to make the search link of knn algorithm faster and improve the overall running speed.

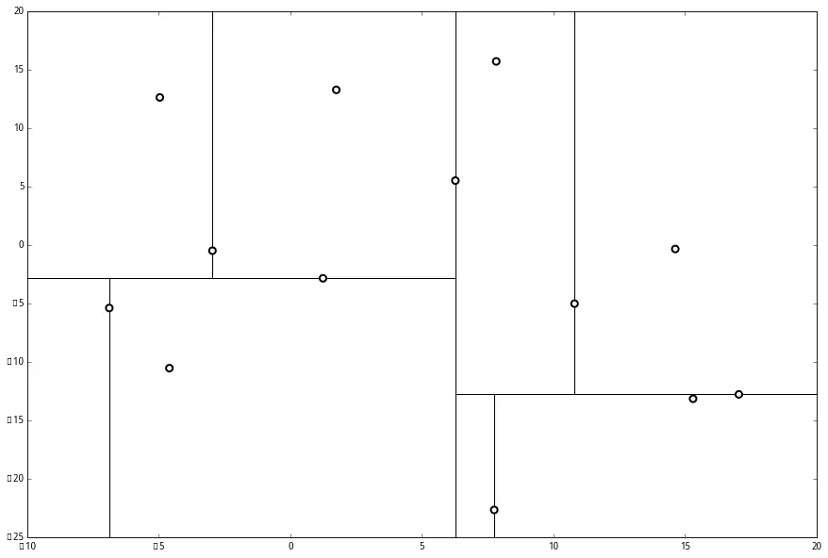

K-d tree is a binary tree whose nodes are k-dimensional points. All non leaf nodes can act as a hyperplane to divide the space into two half spaces. The subtree on the left of the node represents the point on the left of the hyperplane, and the subtree on the right of the node represents the point on the right of the hyperplane. The method to select a hyperplane is as follows: each node is related to the one dimension perpendicular to the hyperplane in the k-dimension. Therefore, if you choose to divide by x axis, all nodes with x value less than the specified value will appear in the left subtree, and all nodes with x value greater than the specified value will appear in the right subtree. In this way, the hyperplane can be determined with the x value, and its normal is the unit vector of the x axis.

In short, it is:

- Select a dimension (x, y, z...);

- Select the median of the dimension value of these points;

- Divide the data into two parts according to the median;

- Perform the same operation for these two parts of data until the number of data points is 1.

Closing~ 👊

This article is written by Chakhsu Lau Creation, adoption Knowledge Sharing Attribution 4.0 International License Agreement.

All articles on this website are original or translated by this website, except for the reprint/source. Please sign your name before reprinting.