Nginx Node.js upstream five hundred and two

background

Recently, we monitored nginx, and found some 5xx errors, mainly 502 and 503. 503 occurred because the request concurrency exceeded the limit. This needs to remind the customer to reduce concurrency. Later, there were also customer feedback that occasionally there were 502 cases, although very few, his interface was about 10 every day, and the whole system was about 100 every day, Although some examples were added midway, it seemed to ease the problem, but the customer asked from time to time, which was quite troublesome, so he used his spare time before the holiday to find information and research.

Details

When nginx reports 502, the error log is as follows:

1、 Connection reset by peer

2019/01/31 11:50:40 [error] 19324#0: *65019204 recv() failed (104: Connection reset by peer) while reading response header from upstream, client: 1.119.136.18, server: xxx.com, request: "POST /path/abc HTTP/1.0", upstream: "htt p://xxx:12345/path/abc", host: "xxx.com"

2、 upstream prematurely closed connection

2019/01/31 13:39:04 [error] 19323#0: *65264092 upstream prematurely closed connection while reading response header from upstream, client: 42.62.19.174, server: xxx.com, request: "POST /path/abc HTTP/1.1", upstream: " http://xxx:12345/path/abc ", host: "xxx.com"

Then no exception is found in the node service. This may also be an error that has not been printed...

problem analysis

1. From the description of the problem, it is because the upstream service (node service) is disconnected. Although the first case on the Internet may be that the client actively closes the connection, from the nginx request log, it can be seen that all customer interfaces exist and will trigger when the service restarts, so it can be determined that the problem is our own node service;

2. The online data shows that the configuration of upstream keepAlive needs to be smaller, because too large a value will lead to too many idle long connections. If the upstream long connection is disconnected and semi closed, that is, nginx has not yet perceived that the long connection is unavailable. If you send data to the long connection at this time, the above problem 2 will be triggered;

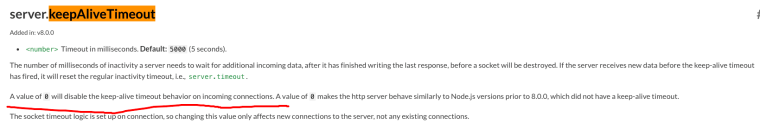

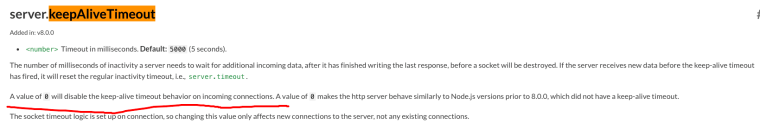

3. As for why the node service will actively disconnect the long connection, the reason is that since node 8.0.0, the keepAliveTimeout parameter has been added, and the default value is 5000ms. This parameter is used to set the allowed idle time of the long connection. If the idle time exceeds the configuration, the long connection will be actively disconnected, and then the connection will wait for the initiator to confirm and close;

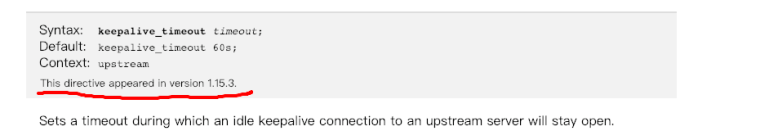

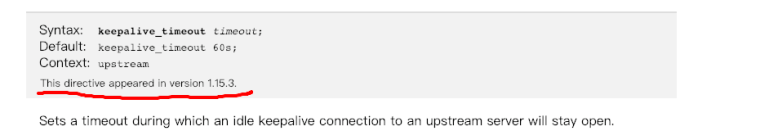

4. Long connections do need to be recycled when they are idle to prevent them from taking up too much resources. However, it is better for the sender to initiate closure actively, so that the connection will not be closed prematurely. In this scenario, As the initiator of a long connection, nginx can choose to disconnect actively. However, the current version of nginx used by the service does not officially provide upstream keepAliveTimeout, and some third-party modules have to be introduced. Unless upgraded to 1.15.3, both of them need to restart nginx, and the problem has a large impact on stability, so you should adjust the node service first;

Problem repair

Let's talk about the process of tossing and turning first, so as to prevent people from stepping on the pit later. First, I suspect that the upstream keep_alive value is too large. At that time, it was 64. If it was changed to 5, 502 will appear again and again;

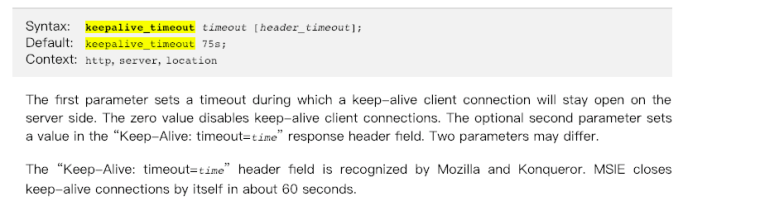

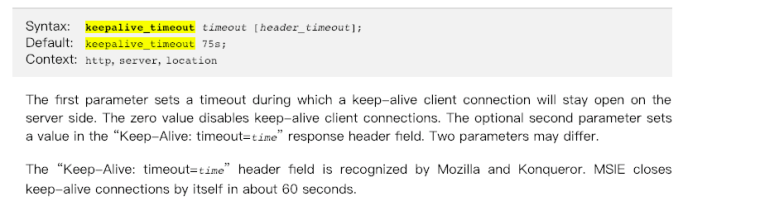

In addition, the keep_live_timeout in http, server, and location is adjusted, The nginx document says that the default value of this configuration is 75s. I think since the default value is 5s after node 8.0, the problem of premature disconnection can be avoided by adjusting nginx to 4s. However, after 4s configuration, the problem still cannot be solved. Finally, it is found that keep_live_timeout here refers to nginx and the client, not upstream, The configuration of upstream requires official support after 1.15.3. The old version requires a third-party module. The documents are as follows:

After several searches and queries, the problem finally surfaced, that is, the node keepAliveTimeout is improperly configured. According to the official node.js document, this function may be disabled by specifying keepAliveTimout as 0.

The node service is adjusted as follows:

const server = app.listen(options, function () { // A value of 0 will disable the keep-alive timeout behavior on incoming connections //The default 5000 (5 seconds) is 5 seconds by default from node v8.0.0. If the value is less than the keepAliveTimeout of nginx when it is enabled, there is a certain probability that the long connection with nginx proxy will be actively disconnected at low concurrency, resulting in a request of 502 //Moreover, the current version 1.12.1 of nginx does not support the keepAliveTimeout configuration of upstream, so the keepAliveTimeout of the node service is temporarily disabled server.keepAliveTimeout = 0 console.info('listen', options.port, 'process.version', process.version) })

After the update, nginx no longer has 502

reference resources

Analysis of several problems brought about by the server actively closing the socket -- the half closing problem caused by the TCP four handshake

Node http document

Chinese parsing of nginx long link documents

ngx_http_upstream_module

ngx_http_core_module

This article is written by Chakhsu Lau Creation, adoption Knowledge Sharing Attribution 4.0 International License Agreement.

All articles on this website are original or translated by this website, except for the reprint/source. Please sign your name before reprinting.