background

What is Kafka

-

Message system, providing publishing and subscription of event flow -

Persistent storage, fault tolerance function to avoid consumer node failure -

Stream processing, such as stream aggregation and connection, concretely refers to processing out of order/delayed data, windows, states and other operations

-

Transmission efficiency of data stream -

The process of mass sending messages by producers and pulling messages by consumers, and the real-time, reliability and throughput of messages -

Platform level persistent storage scheme, high fault tolerance, multi node backup

transmission efficiency

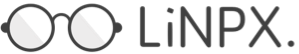

mmap

-

Read ahead: load a large disk block into the memory in advance. The efficiency of the user program to read the data on the disk is equal to the speed of copying the kernel memory to the memory allocated by the user program -

Write behind: A certain number of small logical write operations will be mapped to the disk cache and merged into a large physical write operation. The time of writing is usually the operating system cycle sync Depending, users can also actively call sync

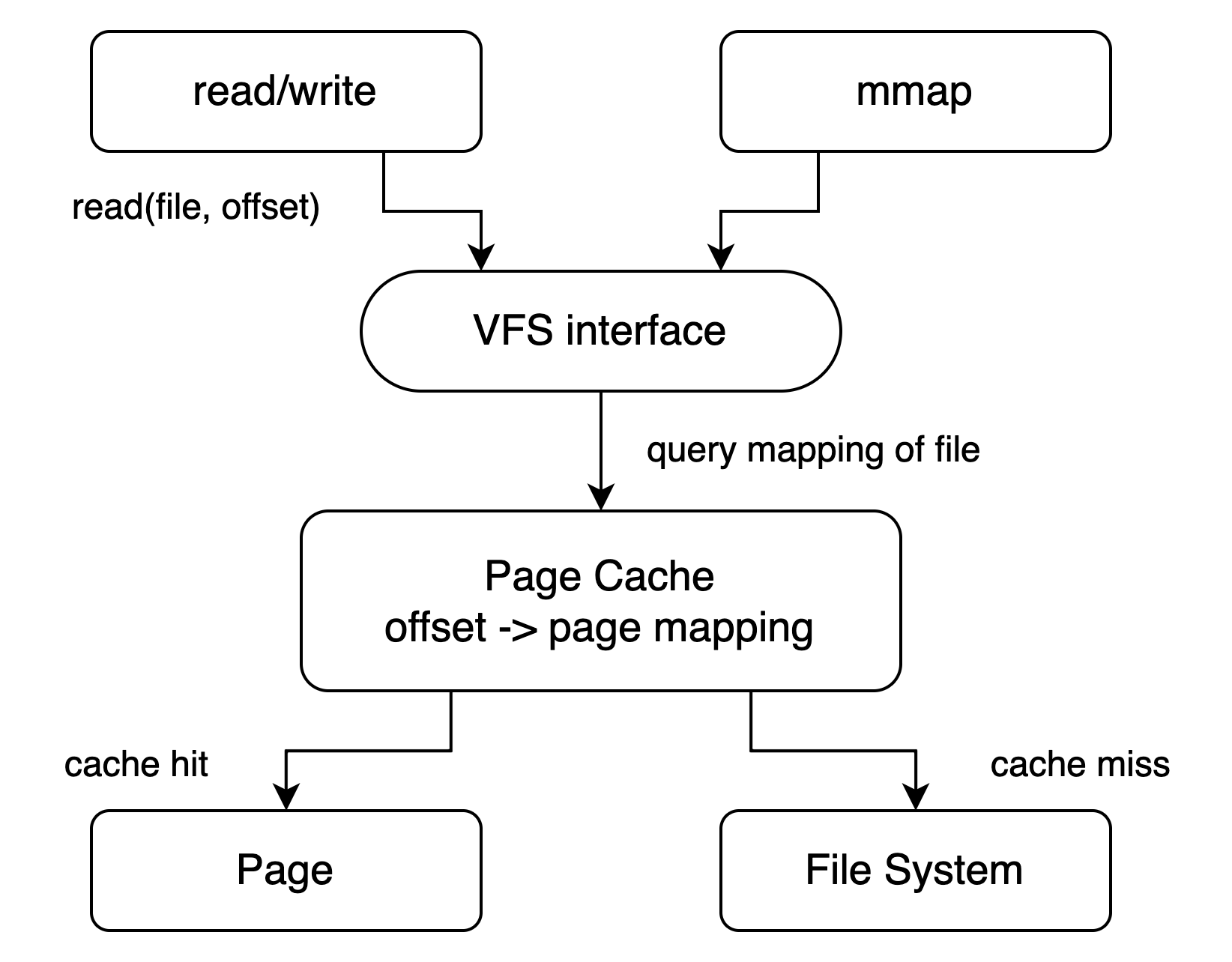

Zero-Copy

#include<sys/sendfile.h> ssize_t sendfile(int out_fd, int in_fd, off_t *offset, size_t count);

Message quality

Space for time

Distributed design

In most message queue applications, there are three levels of message status: -

After the producer publishes the message, whether it is received by the consumer or not -

After the producer publishes the message, more than one consumer must receive it -

After the producer publishes the message, ensure that only one consumer receives it

-

Cache of messages -

Traffic peak shaving. In unit time, when the consumer processing traffic is lower than the producer publishing traffic, the message system needs redundant messages -

If the consumer has an error in processing the message, or if the consumer fails to process the message after receiving it, the message needs to be processed again

-

Order of messages -

Specific business scenarios have requirements for the order of messages. Messages need to be processed in strict order to ensure that consumers receive sequential messages

-

-

Kafka rule 1: messages are stored in two dimensions, a virtual partition and a physical broker, which are many to one -

Kafka rule 2: One partition of a topic can only have one consumer thread pull messages at the same time -

Kafka rule 3 maintains the consumer pull progress of message cache on a partition

-

For the problem of message cache capacity and traffic differentiation, Kafka can flexibly expand the nodes (brokers) under the cooperation of zookeeper For the status of messages, Kafka maintains the offset value on a partition to save the consumption progress of messages, which needs to be submitted by consumers -

If a consumer pulls a message from a partition and does not submit the latest offset value, the offset on the partition is always behind the actual progress of the message pulling. When the association between the partition and the consumer is reallocated, the partition sends the message again from the last start -

If a consumer pulls a message, submits the offset to the partition after normal processing, and the same continues. When a consumer fails unexpectedly during the processing of a message, Kafka can identify that the consumer's communication connection is interrupted, and trigger the rebalance associated with the consumer in the partition

-

For the timing problem of messages, each partition can allocate at most one consumer. By maintaining the consumption progress, you can ensure the timing of messages in the same partition, thus: -

When a producer publishes a message that has temporal properties for an object, it can mark the message with the object's ID, Kafka will allocate messages to a partition that conforms to the hash value through the hash value of the object ID. Thus, the sequential messages of an object are stored in a partition and pulled by a consumer. The messages of the partition can ensure that messages are received by consumers in a chronological order

The above scenario takes effect only when the partition is not reallocated. If the broker node expands or fails, the reallocation of the partition will be triggered. On the other hand, if the consumer node expands or fails, the reallocation of the partition associated with the consumer will be triggered

-

Backup Policy

summary

-

IO intensive and large file operations can be optimized at the operating system level, making good use of mmap and sendfile -

When repeated operations are intensive, they can be processed "in batches" to exchange time for space and obtain greater throughput -

Distributed has a widely used design, which is horizontally expanded from two dimensions: virtual partitions and physical nodes