Kafka Flink Event time

Event time

Because in most real world use cases, message arrival is out of order, we must consider those late messages when processing events. Flink has a powerful event time processing interface to help developers better meet business requirements.

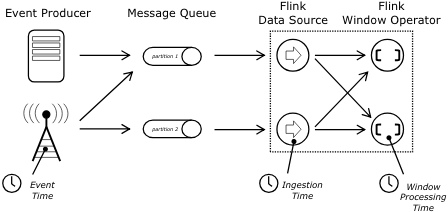

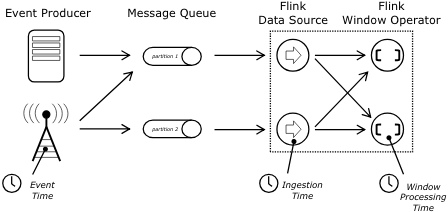

As shown in the figure below, we can clearly see the three types of events: 1 Event Time ; 2、 Ingestion Time; 3、 Processing Time。

Event Time is the time when the event occurs in the real world, Ingestion Time is the time when the event is ingested into the system, and Processing Time is the time when the Flink system processes the event.

watermark

In general, we choose Event Time as the event processing time to ensure that the program processing is as expected.

However, we know that flow processing has a process and time from event generation to source flow to operator. Although in most cases, the data flowing to the operator is in the chronological order of events, it does not rule out out of order or late element due to network, back pressure and other reasons. But for late element, we cannot wait indefinitely. We must have a mechanism to ensure that after a specific time, we must trigger the window to calculate. This special mechanism is watermark.

For more information about watermark, see: http://vishnuviswanath.com/flink_eventtime.html

Kafka example

Kafka is used as the data source. We need to select a field in the message as the EventTime, and configure watermark to ensure the order of data processing:

val env = StreamExecutionEnvironment.getExecutionEnvironment env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime) val kafkaSource = new FlinkKafkaConsumer[MyType]("myTopic", schema, props) kafkaSource.assignTimestampsAndWatermarks(new AscendingTimestampExtractor[MyType] { def extractAscendingTimestamp(element: MyType): Long = element.eventTimestamp }) val stream: DataStream[MyType] = env.addSource(kafkaSource)

legend:

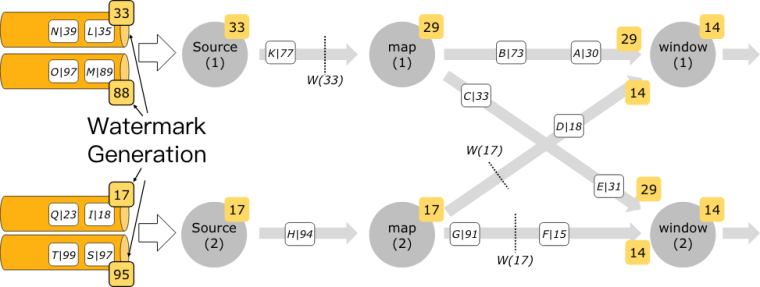

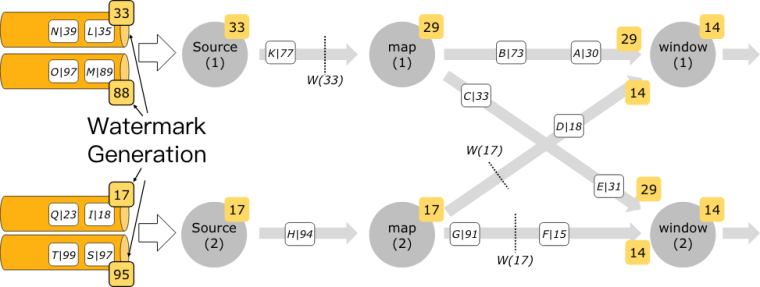

From the figure, we can see that each partition generates watermarks, and how watermarks are transferred in the data processing flow in this case.

matters needing attention:

If watermark depends on the fields in the messages read from kafka, all topics and partitions must have continuous message flows. Otherwise, the watermark of the entire application cannot move forward, and operators based on time operations will not work, such as windows operators.

At present, this problem will be solved in version 1.8.0: https://issues.apache.org/jira/browse/FLINK-5479

Our cluster is 1.7.2. If we use kafka as the data source, we need to ensure that every partition has message flows.

Reference link

This article is written by Chakhsu Lau Creation, adoption Knowledge Sharing Attribution 4.0 International License Agreement.

All articles on this website are original or translated by this website, except for the reprint/source. Please sign your name before reprinting.