Entire book memory allocation Is the key point. IO and CPU work together It is difficult. Process Synchronization It is difficult.

process

Differences between processes and threads

-

Process is the resource allocation unit, and thread is the calling unit -

Threads share process resources, shared address space, and exclusive stack space

Method of process scheduling

Process state transition

-

The time slice is exhausted, and the process runs from execution to be ready (instead of blocking) -

It must be defect Resources for continued implementation -

The process must From Ready Can be converted to execution status

Process Synchronization

memory allocation

-

Is the operation to be loaded in whole, discrete, discrete, all or only the part needed at the beginning? -

Whether the memory is fixed or dynamically changeable (partitions can be split and merged)

Fixed partition allocation

Dynamic partition allocation

-

First adaptation , by address, from the beginning -

Next adaptation , by address, starting from the last allocated free partition address -

Optimal adaptation , select the smallest one that can be installed according to the partition size. -

Worst adaptation , select the largest by partition size

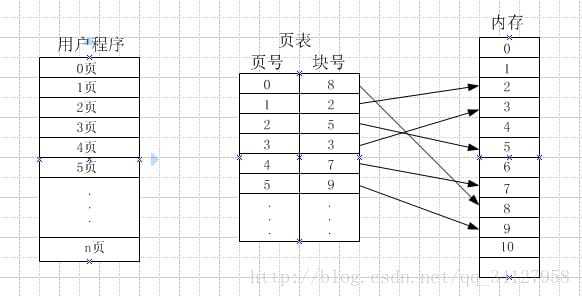

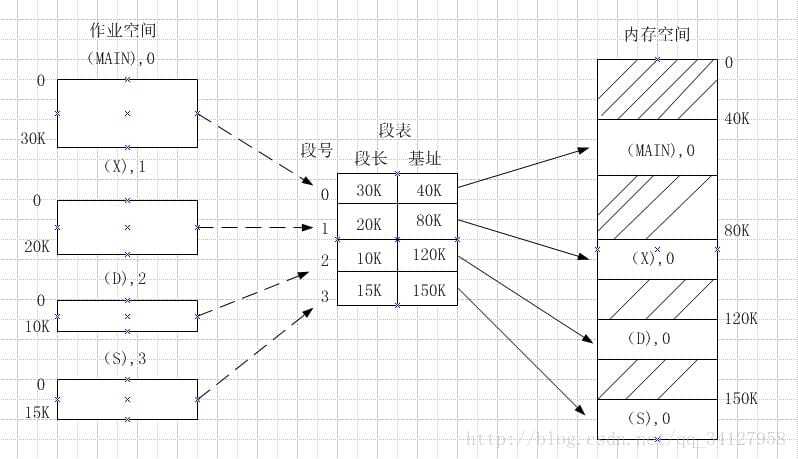

Page/Segment Allocation

The difference between page and segment is

Difference between page table and segment table one

Request paging allocation (virtual memory technology)

-

Is the number of physical blocks in the allocation job fixed or dynamic? ( Page allocation algorithm ) -

Which pages of the job should be transferred to memory? ( Page call in algorithm ) -

If the physical block assigned to the job is full, and now it needs to call in a page, who should be replaced? ( Page replacement algorithm ) -

If the physical block allocated to the job is free, where is a page of the job transferred to memory? ( Mapping relationship between logical address and physical address ) -

What if the contents of the page in memory are modified? ( Data consistency )

TLB (block table), page table, cache, memory

-

Cache is stored in cache, and page table is stored in memory -

The page table is the mapping of page numbers and block numbers of jobs and memory. TLB is a block mapping between memory and cache. Difference between cache allocation and memory allocation: -

Cache content is a copy of memory, Data consistency needs to be saved 。 The memory content is transferred from the disk (job location), which can be inconsistent. When it is replaced, it can be written to the disk, No need for special data consistency 。 -

Generally, there are fewer physical blocks allocated to jobs, so Memory allocation is usually directly connected without explanation , and The mapping relationship between cache and memory is complex 。

-

Page allocation algorithm

-

At the beginning, no physical block is allocated to the job. The memory maintains a linked list of free physical blocks. If the job page needs to enter the memory, you can directly select an empty physical block from the linked list to load it. If the whole memory of the system is full, call up a page of other processes. I.e Dynamic allocation, global replacement -

At the beginning, a certain number of physical blocks are allocated, and the memory also maintains a linked list of free physical blocks. The job page needs to be stored in memory. If your physical block is full, replace it first. If the job frequently sends page faults and interrupts, and frequently replaces its own physical block, the system will not be able to see it anymore, so it will allocate one from the link of the free physical block. If the job does not send interrupts, its physical blocks can be reduced appropriately. I.e Dynamic allocation, local replacement

Page call in algorithm

Mapping relationship

Why do we have such a complex mapping relationship?

What is the relationship between TLB table size and mapping?

Page replacement algorithm

Data consistency

So when the data in the cache is modified (write operation), what should we do in the memory?

Misses -

Read to the cache first, and modify the data in the cache -

Modify the data in memory first, and then read it to the cache

-

Hit: -

Cache and memory are modified simultaneously( Complete writing ) -

Only the cache data is modified. When the data block is replaced, the data in the memory is written back( Write back )

-

summary

file management

Hard link and soft link

-

Soft links, such as creating a shortcut on Windows. When the original program is uninstalled, the shortcut remains, but when you open it, you will be prompted that "the application cannot be found". The content of the directory entry of the soft link file is only the path name of the target file, and the directory entry will be identified as "link". -

Hard link: The directory contents of the hard link file and the target file are the same inode (index allocation) or the same FCB (implicit link allocation). When deleting a file, the reference count of the file will decrease by 1. Only the file

Physical allocation of files on disk

Directory entry, inode node, FCB

Continuous distribution

Explicit link assignment

Implicit link assignment

Index Allocation

IO system

Program interrupt

DMA