-

Limit_conn limits the number of TCP connections for an IP or the total number of connections for a server (website) -

Limit_rate The current data size of each request -

Limit_req limits the number of requests for an IP

The difference between the number of tcp connections and the number of requests

server { listen 80; listen 443 ssl http2; }

netstat -anlp|grep tcp|awk '{print $5}'|awk -F: '{print $1}'|sort|uniq -c|sort -nr|head -n20 ;

Limit_req module

limit_req_zone $binary_remote_addr zone=www_sym:10m rate=20r/s;

limit_req zone=www_sym burst=20 nodelay;

zone : Behind www_sym It is the name of the domain, and you can choose any one. The following correspondence is OK. The 10m after the colon indicates the size of the domain, that is, the module needs to open a memory space to cache the request record of nginx $binary_remote_addr To match whether the request rate exceeds the specified rate 。 rate : This is the number of requests allowed in 1 second, This place is easily misunderstood and leads to configuration errors. For example, the rate is set to 20r/s That is, only one request is allowed in the range of 0-50ms, because nginx is a millisecond rate control, and the rate here is actually a millisecond constant rate control 。 But in fact, our site's requests are all burst traffic, that is, many requests are concurrent in a short time. So we need to use burst To receive burst traffic. burst : It can be understood as a buffer queue. Assume that the value is 20. Assume that there are 21 requests in 0~50ms, then 20 of them will enter the queue. nodelay : This parameter is also easily misunderstood 。 No delay means no delay. Taking the above example as an example, after 20 requests enter the cache queue, they will be immediately forwarded to nginx to request data and returned after obtaining the data. Note that the position (slot) is not released immediately after a request is out of the queue. It is also released at an interval of 50ms (rate is set to 20r/s) 。 That is to say, if 21 requests are sent during 51~100ms, only 2 requests can be returned successfully (because only one slot is released), and the remaining 19 requests will immediately return 503 error codes.

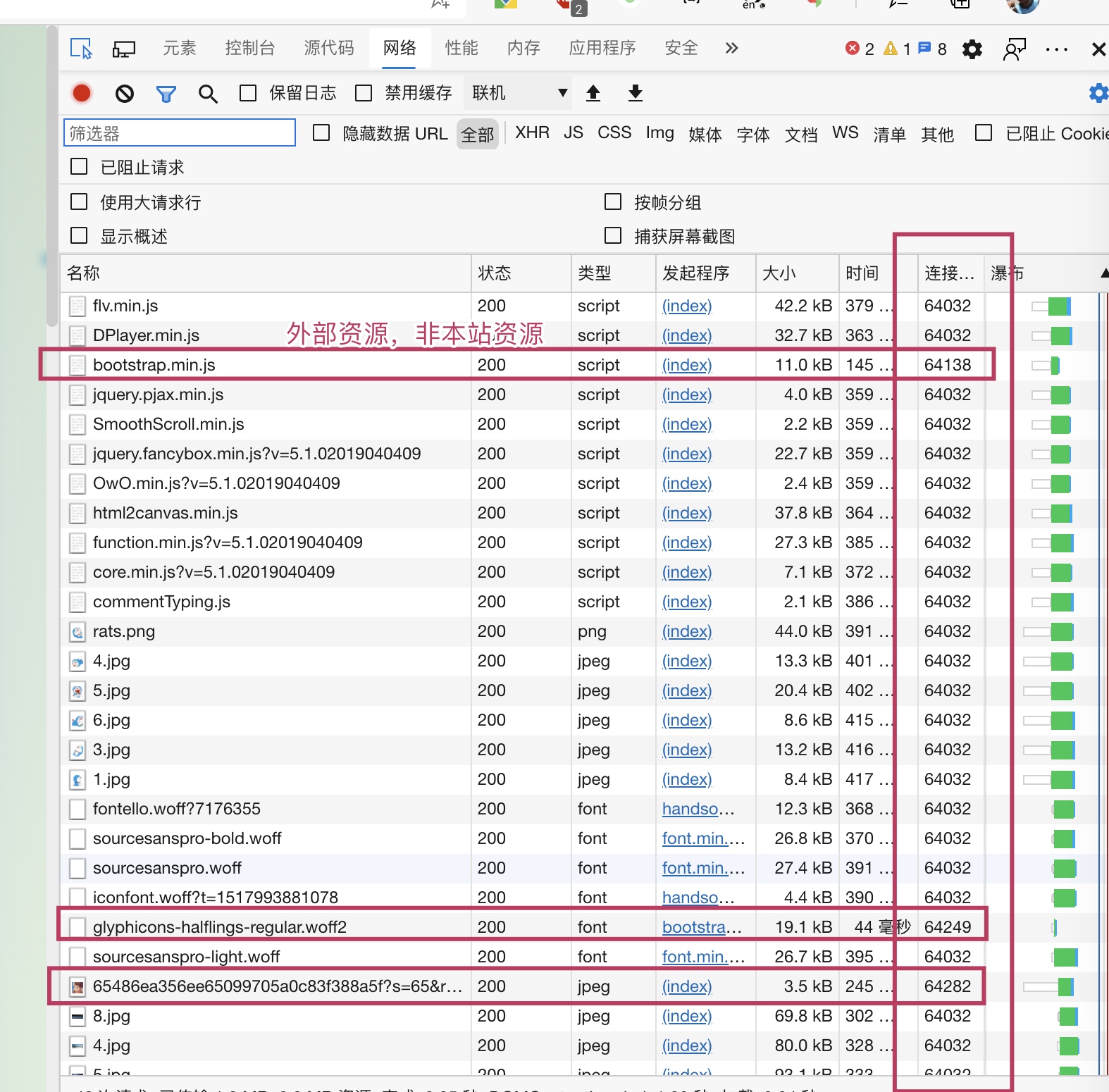

-

Limit the use of nginx service resources -

Ultra detailed analysis of the burst parameter of limit_req module in Nginx

limit_req_log_level error;

2020/08/25 18:09:49 [error] 10215#0: *2445032 limiting requests, excess: 10.700 by zone "www_sym", client: 120.*.3*.29, server: ***.com, request: "GET /RelatedObjectLookups.js HTTP/2.0", host: "***.com", referrer: "https://***/admin"

2022/04/24 20:44:50 [error] 12985#0: *9662214 upstream timed out (110: Connection timed out) while connecting to upstream, client: 111.27.24.183, server: auth.ihewro.com, request: "GET /notice/version HTTP/2.0", upstream: "http://*****:8000/notice/version", host: "auth.ihewro.com", referrer: ""

2022/04/24 21:49:21 [error] 12985#0: *9953796 connect() to unix:/tmp/php-cgi-74.sock failed (11: Resource temporarily unavailable) while connecting to upstream, client: 23.12.64.219, server: www.ihewro.com, request: "GET / HTTP/1.1", upstream: " fastcgi://unix:/tmp/php -cgi-74.sock:", host: "www.ihewro.com", referrer: " https://www.ihewro.com " Limit_conn module

limit_conn_zone $binary_remote_addr zone=perip:10m; limit_conn_zone $server_name zone=perserver:10m;

limit_conn perserver 200; limit_conn perip 20;

limit_conn_log_level error;

2020/08/25 18:09:49 [error] 10216#0: *2445033 limiting connections by zone "www_sym", client: 120.2.33.29, server: ***.com, request: "GET /RelatedObjectLookups.js HTTP/2.0", host: "***.com", referrer: "https://***/admin" Limit_rate module

limit_rate 512k;

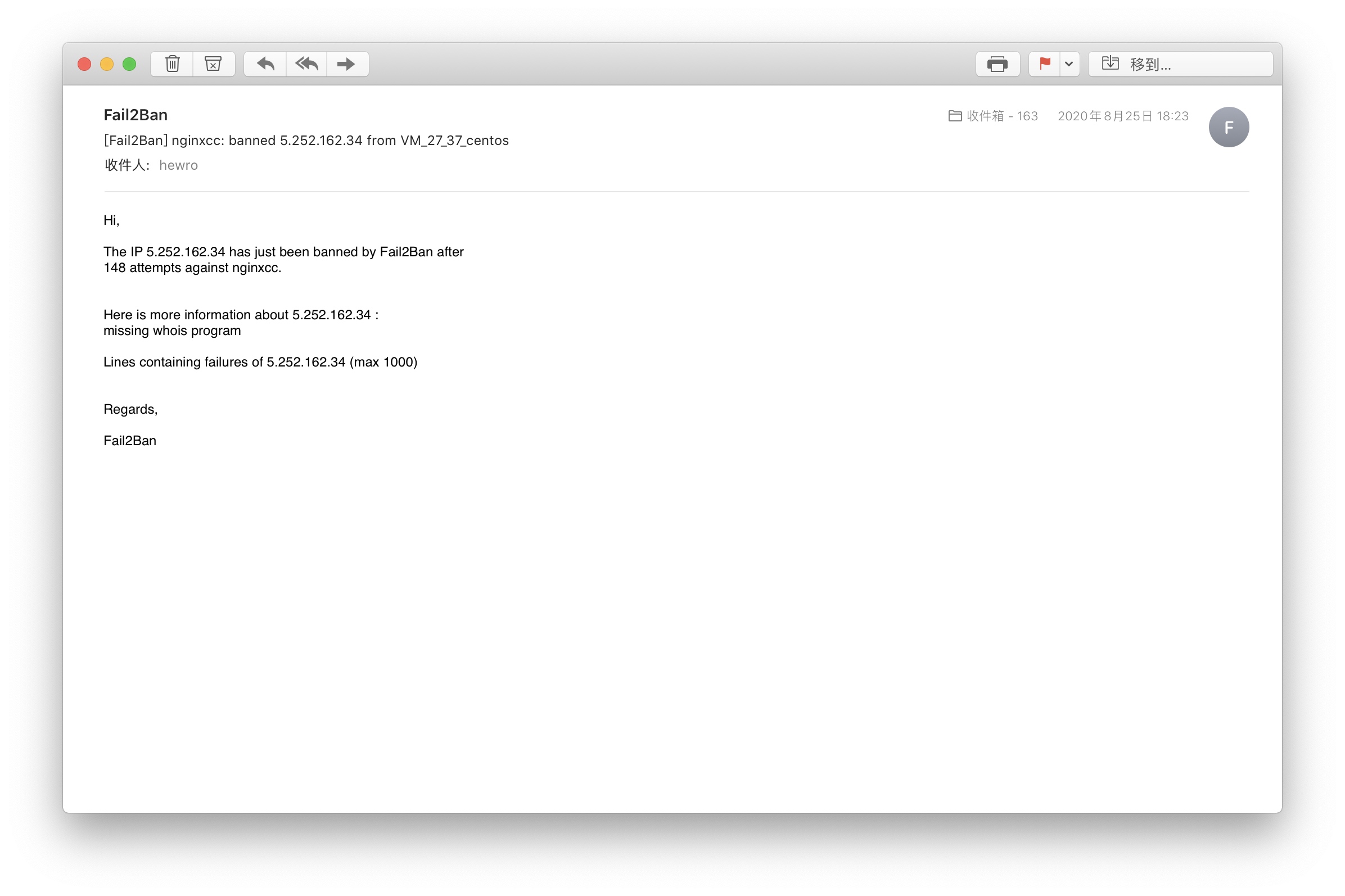

Use of fail2ban

install

# CentOS yum install -y fail2ban #Ubuntu uses apt's system sudo apt-get install -y fail2ban to configure

-

Action. d: Operation after meeting the condition of ban -

Filter. d: filter, which tells fail2ban how to match a line in the log -

Jail.local: jails are prisons. Configure one or more prisons in the file, define the name of the prison, the list of monitored log files, filers, and the actions that meet the conditions -

Jail.conf: This is an official example of multiple prisons. You can directly copy the parts you need to jail.local.

use

[nginxcc] enabled = true filter = nginx-limit-req logpath = /www/wwwlogs/***1.com.error.log /www/wwwlogs/***2.com.error.log maxretry = 120 findtime = 60 bantime = 120000 action = iptables-allports[name=nginxcc] sendmail-whois-lines[name=nginxcc, dest= ihewro@163.com ] -

The first nginxcc is the name of the prison -

The filter uses a filter that comes with fail2ban. The file path is /etc/fail2ban/filter.d/nginx-limit-req.conf -

Logpath is the list of monitored logs. Here I monitor errorlog instead of access.log, because when the traffic is heavy, The access.log log refreshes quickly, which will result in the fail2ban failing to keep up (previously, it was found that the ban has been dropped, but the log still shows the log matching the access of the IP that has been blocked, which is strange. Theoretically, the matching speed should be very fast, but we don't know why this situation occurs) (so we need to set it well limit_req_log_level and limit_conn_log_level Is error) -

Findtime=60 maxretry=120 means that if there are 120 times of overflow records for an IP in a 60s period, it will be blocked -

The unit of bantime is s -

The action blocking operation is to use the iptables tool. This action, fail2ban, has been written for us. The path is /etc/fail2ban/action.d/iptables-allports.conf

failregex = ^\s*\[[a-z]+\] \d+#\d+: \*\d+ .*, client: <HOST>,

fail2ban-regex /etc/fail2ban/filter.d/test.log /etc/fail2ban/filter.d/nginx-limit-req.conf --print-all-matched

[INCLUDES] before = iptables-common.conf [Definition] actionstart = <iptables> -N f2b-<name> <iptables> -A f2b-<name> -j <returntype> <iptables> -I <chain> -p <protocol> -j f2b-<name> actionstop = <iptables> -D <chain> -p <protocol> -j f2b-<name> <actionflush> <iptables> -X f2b-<name> actioncheck = <iptables> -n -L <chain> | grep -q 'f2b-<name>[ \t]' actionban = <iptables> -I f2b-<name> 1 -s <ip> -j <blocktype> && service iptables save actionunban = <iptables> -D f2b-<name> -s <ip> -j <blocktype> && service iptables save [Init]

see

#View the filter and ban logs of prison work tail -f /var/log/fail2ban.log #Start systemctl start fail2ban #Stop systemctl stop fail2ban #Restart systemctl restart fail2ban #Startup systemctl enable fail2ban #Check the operation status of the fail2ban module (generally used when troubleshooting the cause of errors) journalctl -r -u fail2ban.service #View prison list fail2ban-client status #Check the closure of a prison fail2ban-client status nginxcc #Delete an IP under a prison fail2ban-client set nginxcc unbanip 192.168.1.115 #Manually disable an IP fail2ban-client set nginxcc banip 192.168.1.115