What is the hottest technology field in 2020? without doubt: Audio and video 。 The strong development of remote office and online education in 2020 is inseparable from audio and video. Video conference, online teaching, entertainment live broadcast, etc. are typical application scenarios of audio and video.

More abundant use scenarios need us to consider How to provide more configurable capability items, Such as resolution, frame rate, code rate, etc To achieve a better user experience 。 This article will focus on“ resolving power ”Carry out specific sharing.

How to implement user-defined coding resolution

Let's first look at the definition of "resolution". resolving power: It is a parameter that measures the amount of pixel data in the image Key indicators to measure the quality of an image or video 。 The higher the resolution, the larger the image volume (number of bytes), and the better the image quality. For a YUV i420 format video stream with a resolution of 1080p, the volume of a frame image is 1920x1080x1.5x8/1024/1024 ≈ 23.73Mbit, and the frame rate is 30, so the size of 1s is 30x23.73 ≈ 711.9Mbit. It can be seen that the large amount of data requires high bit rate, so video compression coding is required in the actual transmission process. Therefore, the resolution of the original data collected by the video capture device is called Acquisition resolution, The resolution of the data actually sent to the encoder is called Encoding resolution 。

Whether the video picture is clear and the proportion is appropriate will directly affect the user experience. The selection of camera acquisition resolution is limited. Sometimes the resolution we want cannot be directly acquired through the camera. Then, the ability to configure the appropriate encoding resolution according to the scene is crucial** How to convert the captured video into the desired encoding resolution for transmission** This is what we are sharing today.

WebRTC is Google's open source, powerful real-time audio and video project. Most developers in the market build real-time audio and video communication solutions based on WebRTC. In WebRTC, each module has a good abstraction decoupling treatment, which is very friendly to our secondary development. When we build a real-time audio and video communication solution, we need to understand and learn the design idea and code module of WebRTC, and have the ability of secondary development and expansion. In this article, we will talk about how to implement custom encoding resolution based on WebRTC Release 72.

First, let's consider the following questions:

- What is the pipeline of video data from acquisition to encoding?

- How to select the appropriate acquisition resolution according to the set encoding resolution?

- How can I get the desired encoding resolution?

The content of this article will also be shared from the above three points.

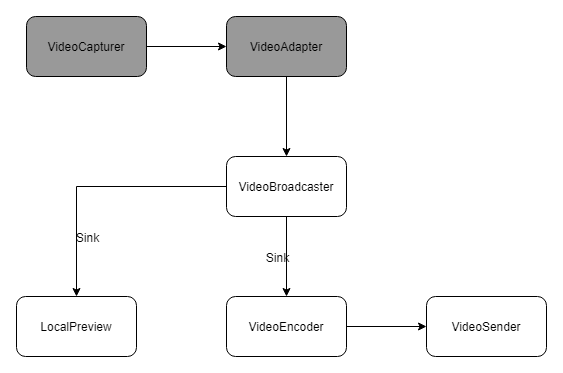

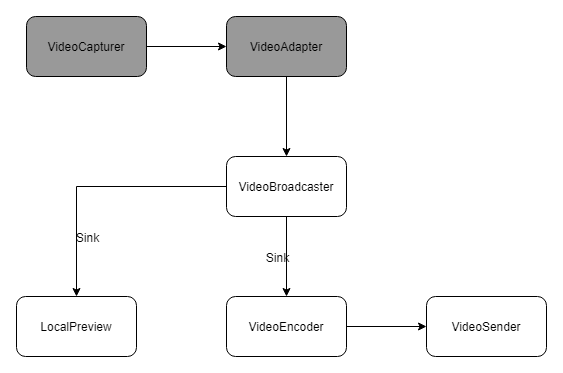

Pipeline of video data

First, let's take a look at the pipeline of video data. The video data is generated by VideoCapture, which collects the data, processes it by VideoAdapter, and then distributes it to the registered VideoLink via VideoSource's VideoBroadcast. VideoLink is the encoder Encoder Sink and the local preview Preview Sink.

For the video resolution, the process is: set the desired resolution to VideoCapture, and VideoCapture selects the appropriate resolution to collect. The original collection resolution data is calculated by the VideoAdapter. If it does not meet the expectations, it will be scaled and cropped to get the video data with the encoding resolution. The data will be sent to the encoder for encoding and then sent.

Here are two key issues:

- How does VideoCapture choose the right acquisition resolution?

- How does the VideoAdapter convert the acquisition resolution to the encoding resolution?

How to select the appropriate acquisition resolution

Selection of acquisition resolution

WebRTC abstracts a Base class for video capture: videocapture.cc. We call the abstraction VideoCapture. Set parameter attributes in VideoCapture, such as video resolution, frame rate, and supported pixel formats, VideoCapture will calculate the best acquisition format according to the set parameters, and then use this acquisition format to call the VDM (Video Device Module) of each platform. The specific settings are as follows:

The code is extracted from src/media/base/videoadapter in WebRTC h

VideoCapturer.h Bool GetBestCaptureFormat (const VideoFormat&desired, VideoFormat * best_format);//The internal traversal of all collection formats supported by the device calls GetFormatDistance() to calculate the distance of each format, and selects the format with the smallest distance Int64_t GetFormatDistance (const VideoFormat&desired, const VideoFormat&supported) void SetSupportedFormats(const std::vector<VideoFormat>& formats);// Set the formats supported by the collection device fps, resolution, NV12, I420, MJPEG, etc

According to the set parameters, sometimes GetBestCaptureFormat() cannot get the acquisition format that is more consistent with our settings. Because different devices have different acquisition capabilities, the resolution support of iOS, Android, PC, Mac native video capture and external USB video capture is different, especially the external USB video capture capabilities are uneven. Therefore, we need to slightly adjust GetFormatDistance() to meet our needs. Let's talk about how to adjust the code to meet our needs.

Source code analysis of selection strategy

Let's first analyze the source code of GetFormatDistance() and extract some code:

The code is extracted from src/media/base/videocapture.cc in WebRTC

// Get the distance between the supported and desired formats. int64_t VideoCapturer::GetFormatDistance(const VideoFormat& desired, const VideoFormat& supported) { //.... Omit some codes // Check resolution and fps. Int desired_width=desired.width;//Encoding resolution width Int desired_height=desired.height;//High coding resolution Int64_t delta_w=supported.width - desired_width;//Difference in width float supported_fps = VideoFormat::IntervalToFpsFloat(supported.interval);// Frame rate supported by the collection device float delta_fps = supported_fps - VideoFormat::IntervalToFpsFloat(desired.interval);// Frame rate difference int64_t aspect_h = desired_width ? supported.width * desired_height / desired_width : desired_height;// Calculate the height of the set aspect ratio. The resolution of the acquisition device supports general width>height Int64_t delta_h=supported. height - aspect_h;//Height difference Int64_t delta_fourcc;//The set pixel format priority is supported. For example, NV12 is set first, and NV12 is preferred for the same resolution and frame rate //.... Omit some degradation policy codes, mainly for the resolution and frame rate supported by the device do not meet the set degradation policy int64_t distance = 0; distance |= (delta_w << 28) | (delta_h << 16) | (delta_fps << 8) | delta_fourcc; return distance; }

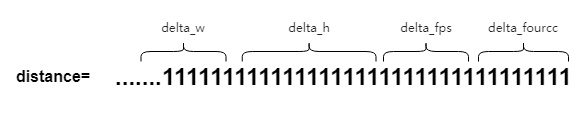

We mainly focus on the parameter Distance. Distance is a concept in WebRTC. It is the difference between the set collection format and the collection format supported by the device calculated according to a certain algorithm strategy. The smaller the difference is, the closer the collection format supported by the device is to the desired format. If it is 0, it just matches.

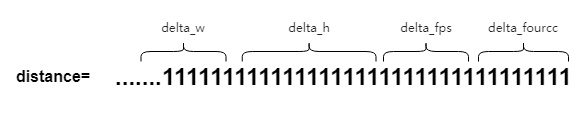

Distance consists of four parts delta_w, delta_h,delta_fps,delta_fourcc, The weight of delta_w (wide resolution) is the heaviest, delta_h (high resolution) is the second, delta_fps (frame rate) is the third, and delta_fourcc (pixel format) is the last. The problem caused by this is that the proportion of width is too high and the proportion of height is too low to match the resolution that is more accurately supported.

Example:

Taking the iPhone xs Max 800x800 fps: 10 as an example, we extract the distance of some collection formats. The original GetFormatDistance() algorithm does not meet the requirements. What we want is 800x800. We can see from the following figure that the best result is 960x540, which is not as expected:

Supported NV12 192x144x10 distance 489635708928 Supported NV12 352x288x10 distance 360789835776 Supported NV12 480x360x10 distance 257721630720 Supported NV12 640x480x10 distance 128880476160 Supported NV12 960x540x10 distance 43032248320 Supported NV12 1024x768x10 distance 60179873792 Supported NV12 1280x720x10 distance 128959119360 Supported NV12 1440x1080x10 distance 171869470720 Supported NV12 1920x1080x10 distance 300812861440 Supported NV12 1920x1440x10 distance 300742082560 Supported NV12 3088x2316x10 distance 614332104704 Best NV12 960x540x10 distance 43032248320

Select Policy Adjustment

In order to obtain the resolution we want, according to our analysis, we need to explicitly adjust the GetFormatDistance() algorithm to adjust the weight of the resolution to the highest, the frame rate to the second, and the pixel format to the last when no pixel format is specified. The modification is as follows:

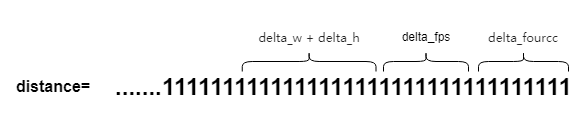

int64_t VideoCapturer::GetFormatDistance(const VideoFormat& desired, const VideoFormat& supported) { //.... Omit some codes // Check resolution and fps. Int desired_width=desired.width;//Encoding resolution width Int desired_height=desired.height;//High coding resolution int64_t delta_w = supported.width - desired_width; int64_t delta_h = supported.height - desired_height; int64_t delta_fps = supported.framerate() - desired.framerate(); distance = std::abs(delta_w) + std::abs(delta_h); //.... Omit the degradation strategy, for example, 1080p is set, but the camera acquisition device supports 720p at most, and needs to be degraded distance = (distance << 16 | std::abs(delta_fps) << 8 | delta_fourcc); return distance; }

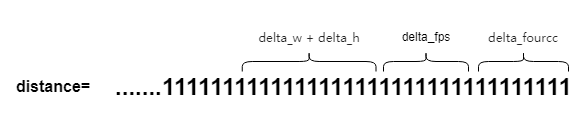

After modification: Distance consists of three parts: resolution (delta_w+delta_h), frame rate (delta_fps), and pixel (delta_furcc). Among them, (delta_w+delta_h) has the highest proportion, followed by delta_fps, and finally by delta_furcc.

Example:

Taking the iPhone xs Max 800x800 fps: 10 as an example, we extract the distance of some acquisition formats. After GetFormatDistance() is modified, we want 800x800, and the Best is 1440x1080. We can get 800x800 by scaling and cropping, which is in line with expectations (if the resolution requirements are not particularly precise, you can adjust the downgrade strategy and select 1024x768):

Supported NV12 192x144x10 distance 828375040 Supported NV12 352x288x10 distance 629145600 Supported NV12 480x360x10 distance 498073600 Supported NV12 640x480x10 distance 314572800 Supported NV12 960x540x10 distance 275251200 Supported NV12 1024x768x10 distance 167772160 Supported NV12 1280x720x10 distance 367001600 Supported NV12 1440x1080x10 distance 60293120 Supported NV12 1920x1080x10 distance 91750400 Supported NV12 1920x1440x10 distance 115343360 Supported NV12 3088x2316x10 distance 249298944 Best NV12 1440x1080x10 distance 60293120

How to achieve acquisition resolution to coding resolution

After the video data collection is completed, it will be processed by VideoAdapter (abstract in WebRTC) and then distributed to the corresponding Sink (abstract in WebRTC). We make slight adjustments in the VideoAdapter to calculate the parameters required for scaling and clipping, and then use LibYUV to scale the video data before clipping it to the encoding resolution (in order to retain as much picture image information as possible, we first use scaling processing, and then clip the redundant pixel information when the aspect ratio is inconsistent). Here we focus on two issues:

- In the above example, we want a resolution of 800x800, but the best acquisition resolution is 1440x1080. How can we get the set encoding resolution of 800x800 from 1440x1080?

- In the process of streaming video data from VideoCapture to VideoLink, it will be processed by the VideoAdapter. What does the VideoAdapter do specifically?

Now let's make a concrete analysis of these two problems. Let's first understand what VideoAdapter is.

Introduction to VideoAdapter

WebRTC describes the VideoAdapter as follows:

VideoAdapter adapts an input video frame to an output frame based on the specified input and output formats. The adaptation includes dropping frames to reduce frame rate and scaling frames.VideoAdapter is thread safe.

It can be understood that the VideoAdapter is a data input/output control module that can control and degrade the frame rate and resolution accordingly. In the VQC (Video Quality Control) video quality control module, by configuring the VideoAdapter, you can dynamically reduce the frame rate and dynamically scale the resolution under the condition of low bandwidth and high CPU, so as to ensure the smoothness of the video and improve the user experience.

From src/media/base/videoadapter h

VideoAdapter.h bool AdaptFrameResolution(int in_width, int in_height, int64_t in_timestamp_ns, int* cropped_width, int* cropped_height, int* out_width, int* out_height); void OnOutputFormatRequest( const absl::optional<std::pair<int, int>>& target_aspect_ratio, const absl::optional<int>& max_pixel_count, const absl::optional<int>& max_fps); void OnOutputFormatRequest(const absl::optional<VideoFormat>& format);

Source code analysis of VideoAdapter

In the VideoAdapter, call AdaptFrameResolution() according to the set destried_format to calculate the croped_width, croped_height, out_width, out_height parameters that should be scaled and trimmed from the acquisition resolution to the coding resolution. WebRTC's native adaptFrameResolution calculates the scaling parameters based on the calculated pixel area, but cannot obtain accurate width&height:

From src/media/base/videoadapter. cc

bool VideoAdapter::AdaptFrameResolution(int in_width, int in_height, int64_t in_timestamp_ns, int* cropped_width, int* cropped_height, int* out_width, int* out_height) { //..... Omit some codes // Calculate how the input should be cropped. if (! target_aspect_ratio || target_aspect_ratio->first <= 0 || target_aspect_ratio->second <= 0) { *cropped_width = in_width; *cropped_height = in_height; } else { const float requested_aspect = target_aspect_ratio->first / static_cast<float>(target_aspect_ratio->second); *cropped_width = std::min(in_width, static_cast<int>(in_height * requested_aspect)); *cropped_height = std::min(in_height, static_cast<int>(in_width / requested_aspect)); } Const Fraction scale;//vqc scaling factor Omit code // Calculate final output size. *out_width = *cropped_width / scale.denominator * scale.numerator; *out_height = *cropped_height / scale.denominator * scale.numerator; }

Example:

Taking iPhone xs Max 800x800 fps: 10 as an example, set the encoding resolution to 800x800 and the acquisition resolution to 1440x1080. According to the original algorithm, the new resolution calculated is 720x720, which is not as expected.

VideoAdapter Adjustment

The VideoAdapter is an important part of the VQC (video quality control module) to adjust the video quality. The reason why VQC can complete frame rate control, resolution scaling and other operations mainly depends on the VideoAdapter. Therefore, the modification needs to consider the impact on VQC.

In order to accurately obtain the desired resolution without affecting the control of the VQC module on resolution, we make the following adjustments to AdaptFrameResolution():

bool VideoAdapter::AdaptFrameResolution(int in_width, int in_height, int64_t in_timestamp_ns, int* cropped_width, int* cropped_height, int* out_width, int* out_height) { //.... Omit some codes bool in_more = (static_cast<float>(in_width) / static_cast<float>(in_height)) >= (static_cast<float>(desired_width_) / static_cast<float>(desired_height_)); if (in_more) { *cropped_height = in_height; *cropped_width = *cropped_height * desired_width_ / desired_height_; } else { *cropped_width = in_width; *cropped_height = *cropped_width * desired_height_ / desired_width_; } *out_width = desired_width_; *out_height = desired_height_; //.... Omit some codes return true; }

Example:

Taking iPhone xs Max 800x800 fps: 10 as an example, set the encoding resolution to 800x800 and the acquisition resolution to 1440x1080. According to the adjusted algorithm, the calculated encoding resolution is 800x800, which is in line with expectations.

summary

This article mainly introduces how to configure the encoding resolution based on WebRTC. When we want to modify the video coding resolution, we need to understand the whole process of video data acquisition, transmission, processing, coding, etc. Here are also a summary of the key steps shared today. When we want to achieve custom coding resolution transmission:

- First, set the desired encoding resolution;

- Modify VideoCapture.cc, and select the appropriate acquisition resolution according to the encoding resolution;

- Modify VideoAdapter.cc to calculate the parameters required for scaling and clipping the acquisition resolution to the encoding resolution;

- According to the scaling and clipping parameters, use libyuv to scale and clip the original data to the encoding resolution;

- Then send the new data to the encoder for encoding and transmission;

- Finally, Donne.

In the same way, we can also make other adjustments according to this idea. The above is the full introduction of this article. We will continue to share more audio and video related technology implementations, and welcome to leave a message to exchange related technologies with us.

The 5G era has come, and the application field of audio and video will be more and more broad, and everything is promising.

Author Introduction

He Jingjing, the audio and video engineer of NetEase Yunxin client, is responsible for the research and development of the cross platform SDK of Yunxin audio and video. Previously engaged in audio and video work in the field of online education, had some understanding of building real-time audio and video solutions and use scenarios, and liked to study and solve complex technical problems.

More technical dry goods, welcome to follow [Netease Smart Enterprise Technology+] WeChat official account