There is no doubt that live broadcast is one of the most popular areas of the current mobile Internet strong Under the guidance of the popularity, the live broadcast field has also attracted a lot of commercial capital. At the moment when all major live broadcasting applications are in full bloom, it is also the real air outlet that live broadcasting applications face. Standing on this wind outlet, only by grasping the wind vane and introducing differentiated functions with high user stickiness, can the live broadcast application gain a firm foothold and an unshakable position in this era of constant innovation.

Based on the full practice of Lianmai interactive live broadcast program, the series of articles Netease Yunxin The exploration and practice of, from the scene, process to the scheme, architecture, in-depth optimization scheme for live experience -“ Lianmai interactive live broadcast ”A comprehensive explanation and introduction were given.

Recommended reading:

Full practice of Lianmai interactive live broadcast scheme 1: What is Lianmai interactive live broadcast?

Full practice of Lianmai interactive live broadcast scheme 2: The evolution process of Netease Yunxin Lianmai interactive live broadcast scheme

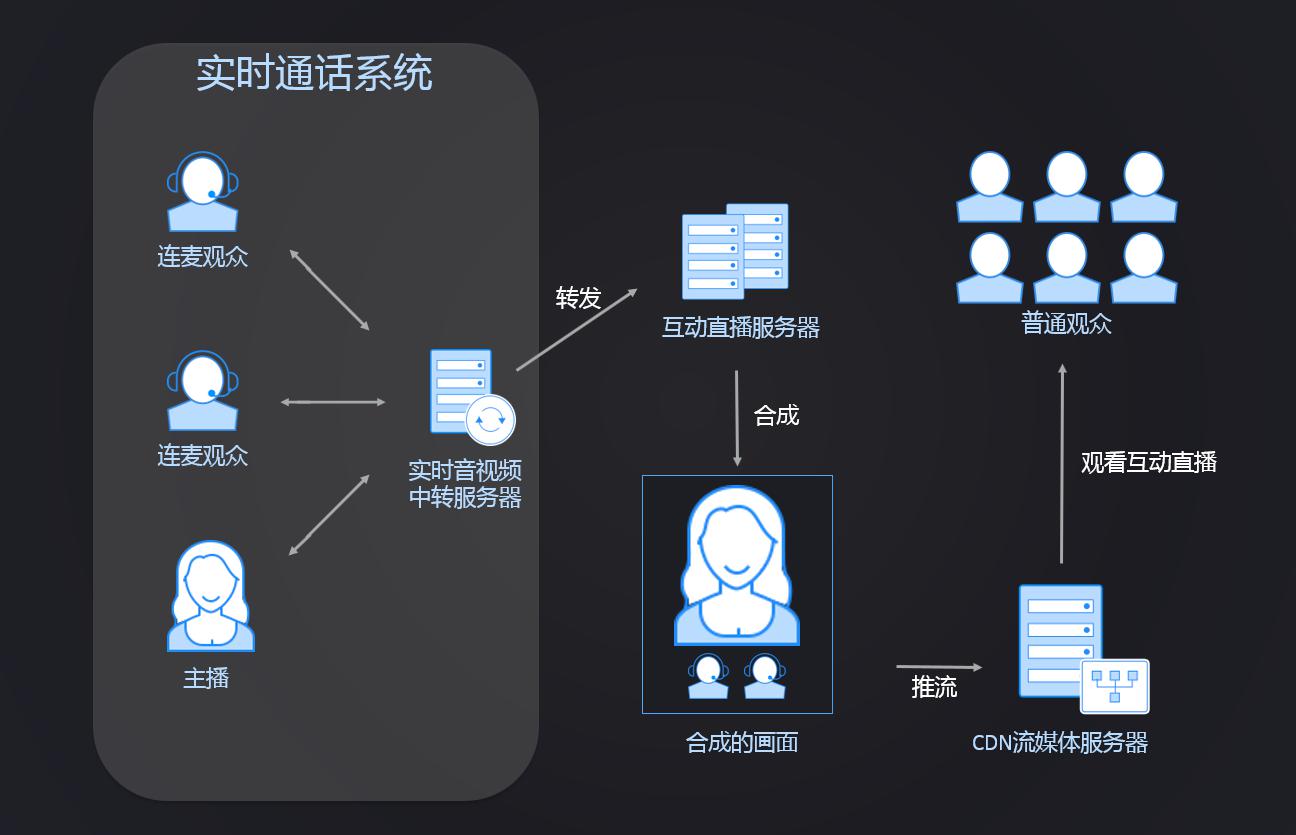

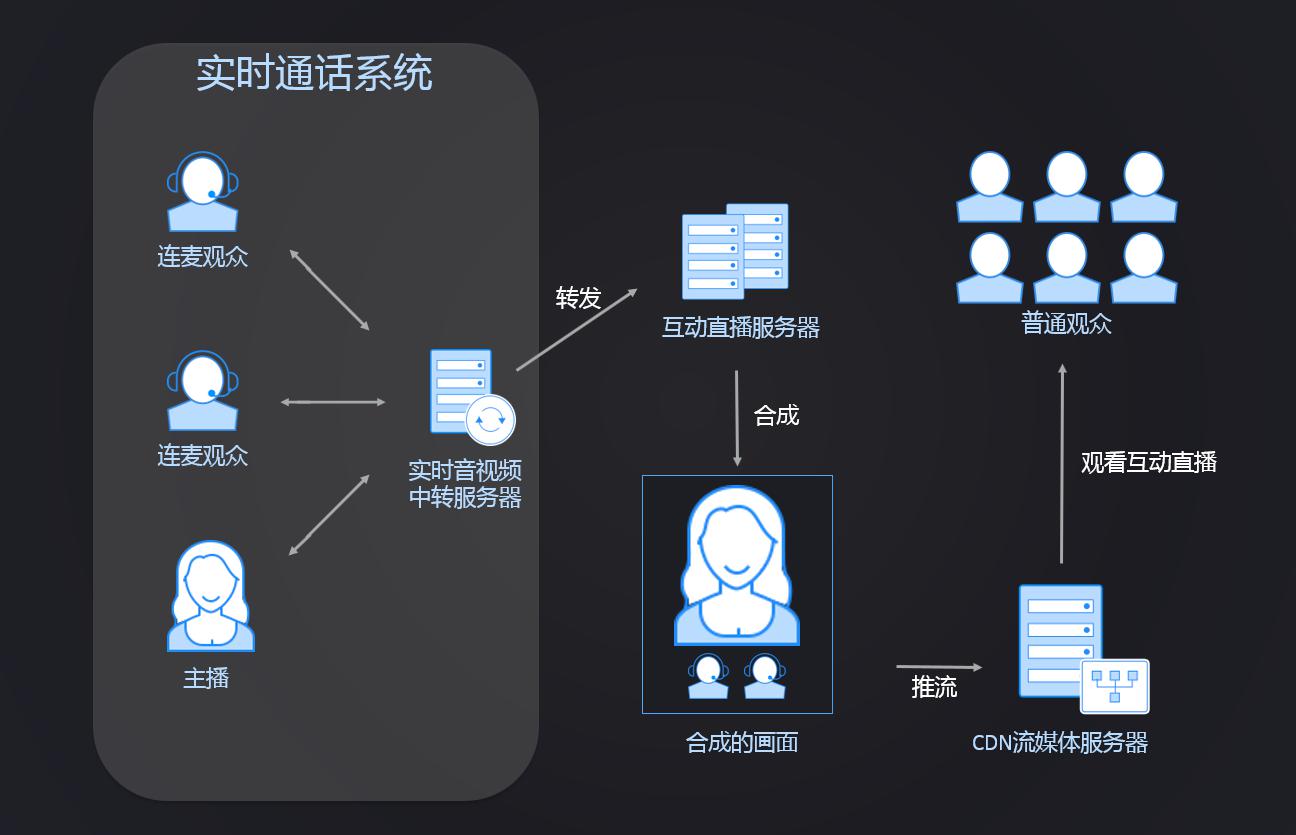

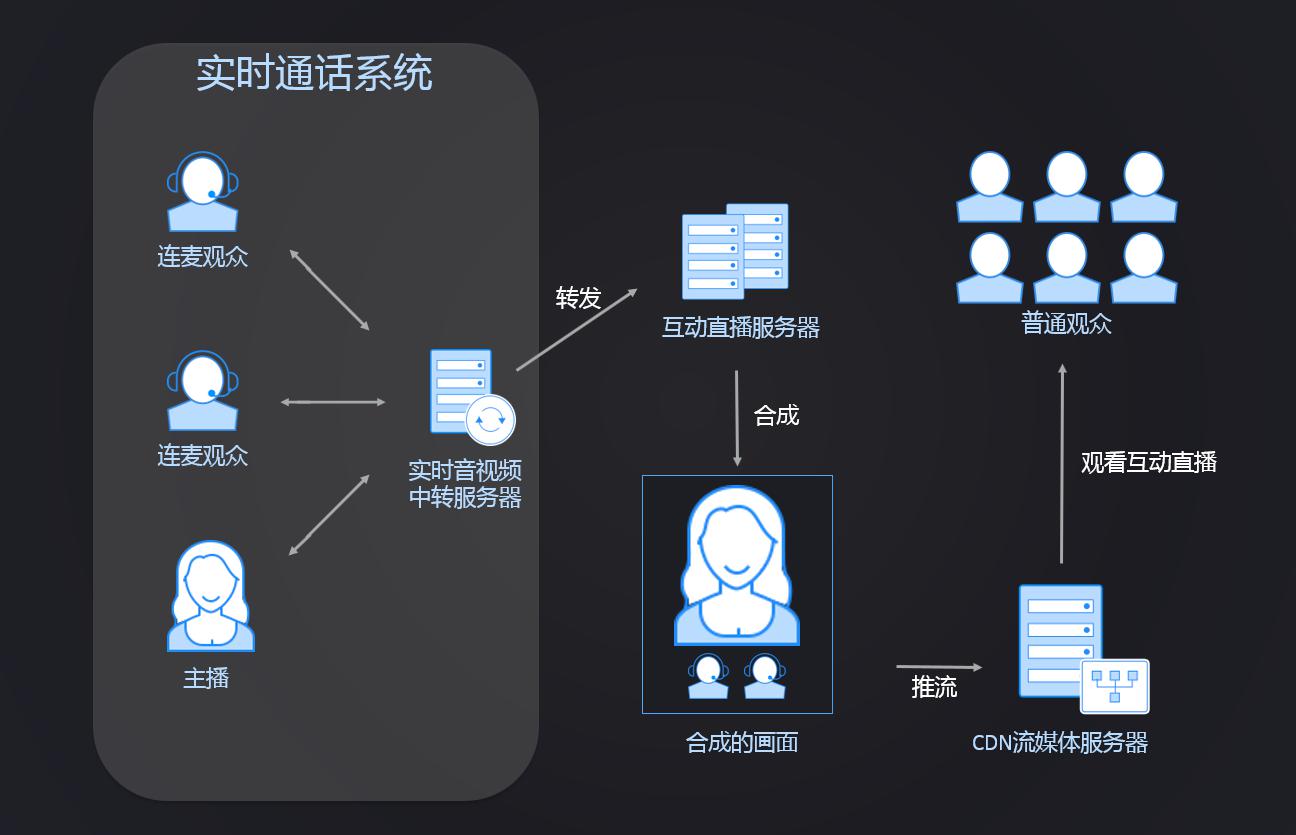

Now let's take a look Netease Yunxin How is the new Lianmai interactive live broadcast scheme realized ? We can see from the architecture diagram that the whole Lianmai interactive live broadcast is mainly composed of two modules, the real-time audio and video system and the interactive live broadcast server system.

![]()

Real time audio and video system

First, let's take a look at the real-time audio and video system. Real time audio and video In fact, it is based on the network, which can make real-time calls between two or more people with low latency, audio or video. Skype, facetime, WeChat, and Easel all provide real-time audio and video call functions. In the interactive live broadcast, the real-time audio and video system is mainly used to realize the function of low delay connection.

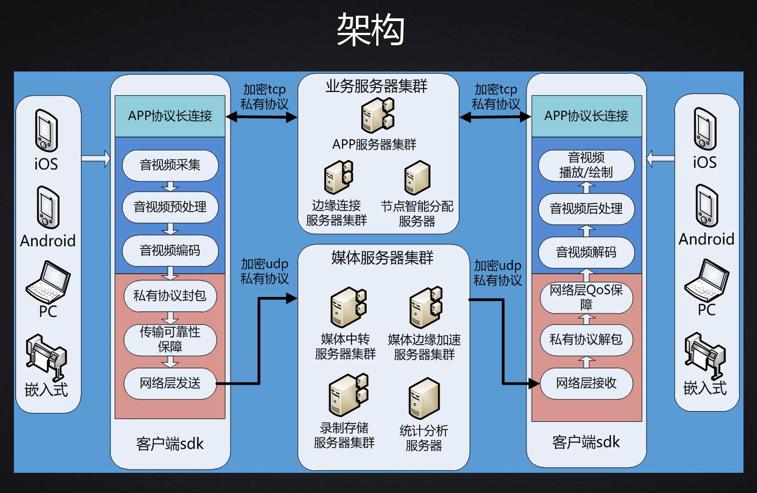

Let's take a look at the architecture of real-time audio and video:

![]()

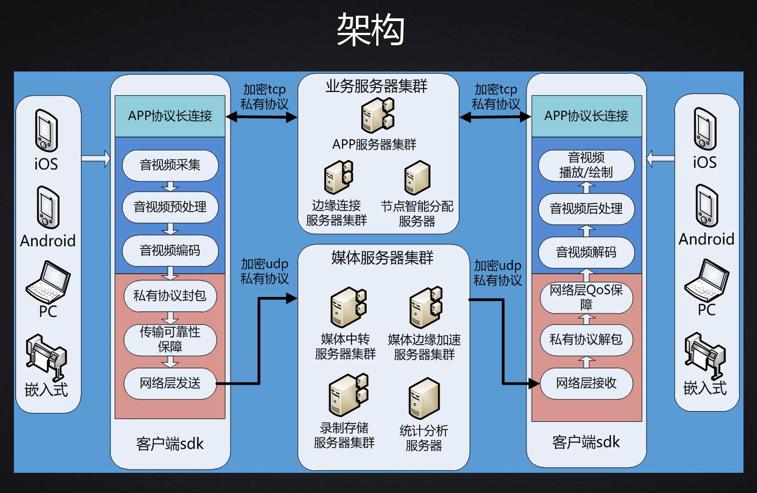

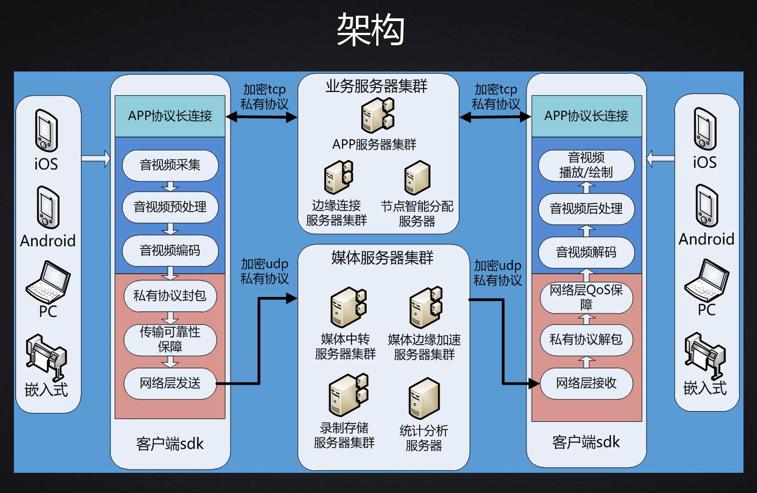

Clients of all platforms use client sdks to access real-time audio and video systems, including iOS, Android, PC and embedded devices.

The client maintains a long APP protocol connection with the business server cluster, and uses the encrypted tcp private protocol as the interaction protocol, which is mainly used for the initiation of related services, notification push, etc. The service server cluster includes the edge connection server for user accelerated access, and the distribution server for intelligent allocation of global media server nodes to users. After the client obtains the media server address from the distribution server through the APP protocol, it enters the media and network process of real-time audio and video.

For a more intuitive explanation, the architecture diagram shows a one-way process. The sender is on the left and the receiver is on the right. The sender's codec performs audio and video acquisition, audio and video pre-processing, and then audio and video coding, and then enters the network layer. After completing the packet of the private protocol and ensuring the transmission reliability, the encrypted udp private protocol is used as the transport layer protocol, and the data packet is sent to the media server cluster distributed by the distribution server according to the intelligent algorithm. The core media relay server is reached through the edge acceleration node, and the media relay server is responsible for forwarding data packets to other users in the same session.

Then let's look at the receiver. The receiver also uses the encrypted udp private protocol. The network layer of the receiver receives the data packet sent from the media relay server, first unpacks the private protocol from the bottom up, and then does the related network layer QoS guarantee. After the completion of network layer processing, the complete audio and video coding data will be called back to the codec layer. In the encoding and decoding layer, audio and video are first decoded, then related audio and video post-processing is performed, and finally the processed audio is played, and the video screen is drawn in the interface.

This completes a one-way interaction of audio and video data, and the reverse process is consistent. In addition, we see that the most critical part of the media server cluster is the transit server. There is also a recording storage server where users record audio and video during a call and store them in the cloud. The statistical analysis server is used to analyze and count the call quality of users and the running status of the system, which is very helpful for optimizing the real-time audio and video call effect, and also has a corresponding reference for the strategy of node allocation server.

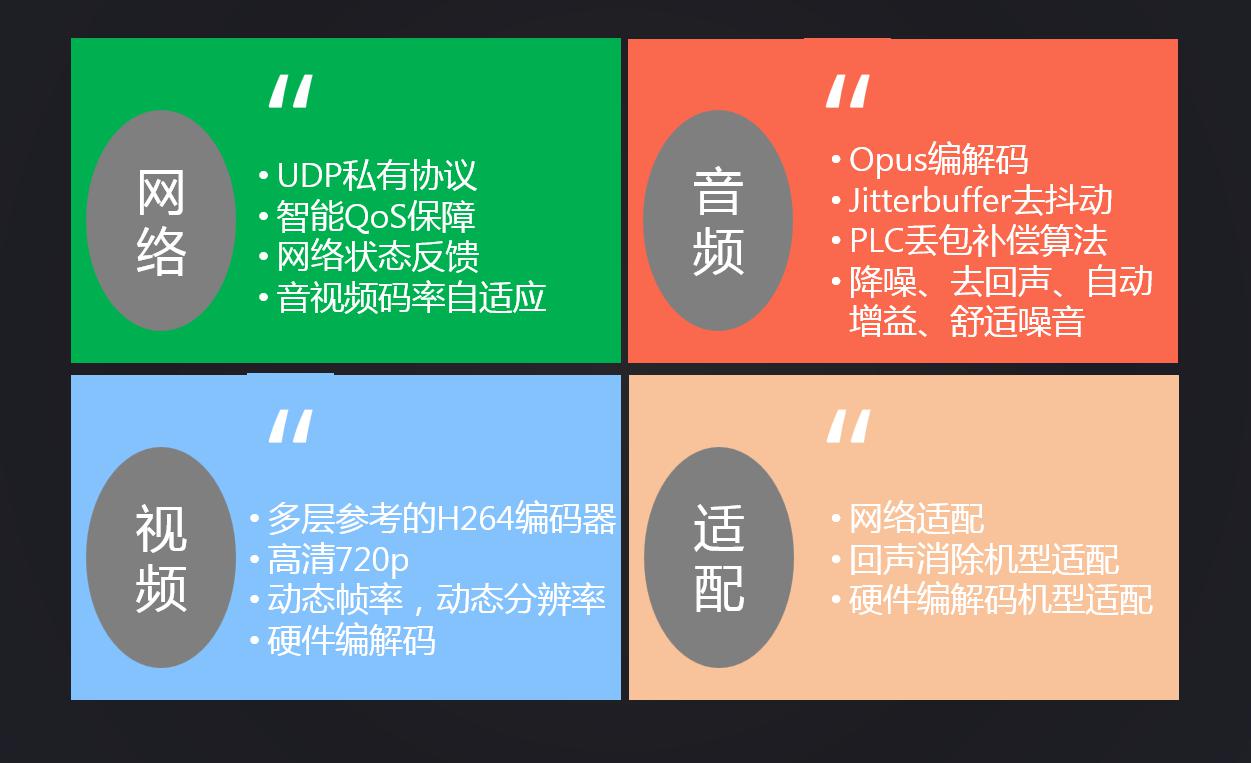

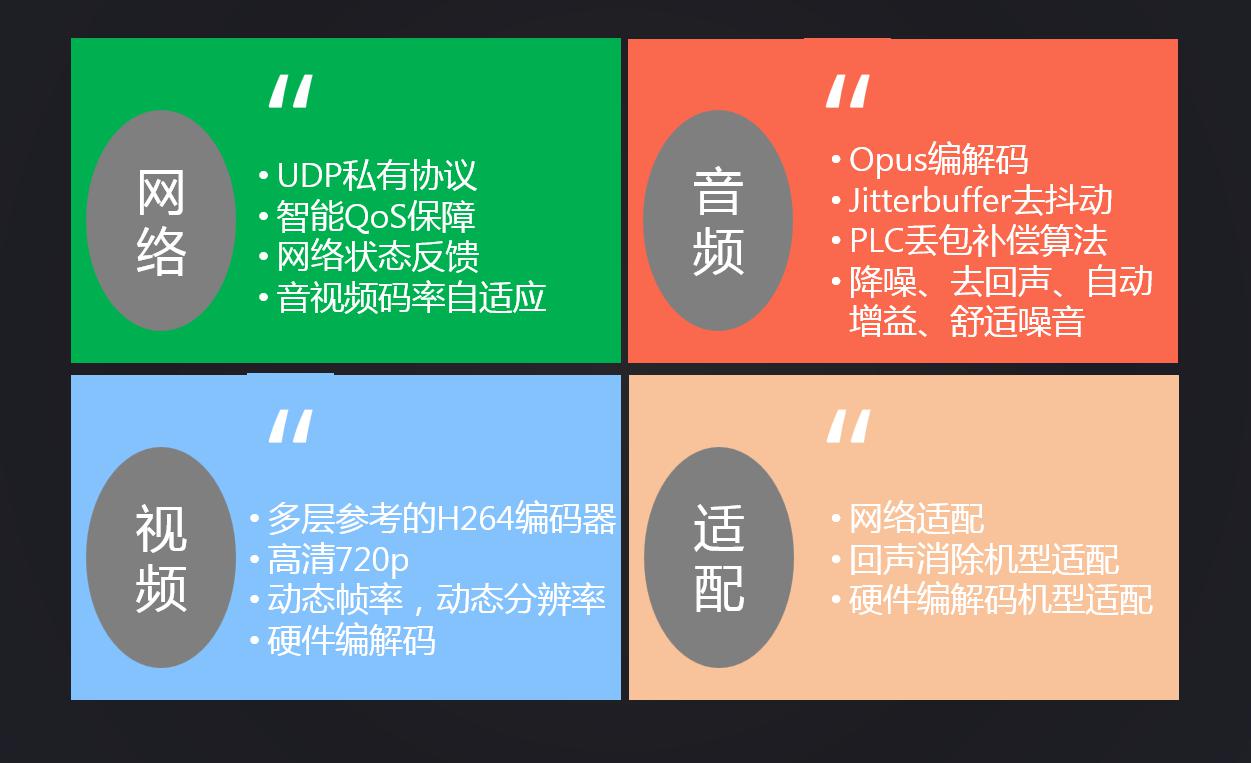

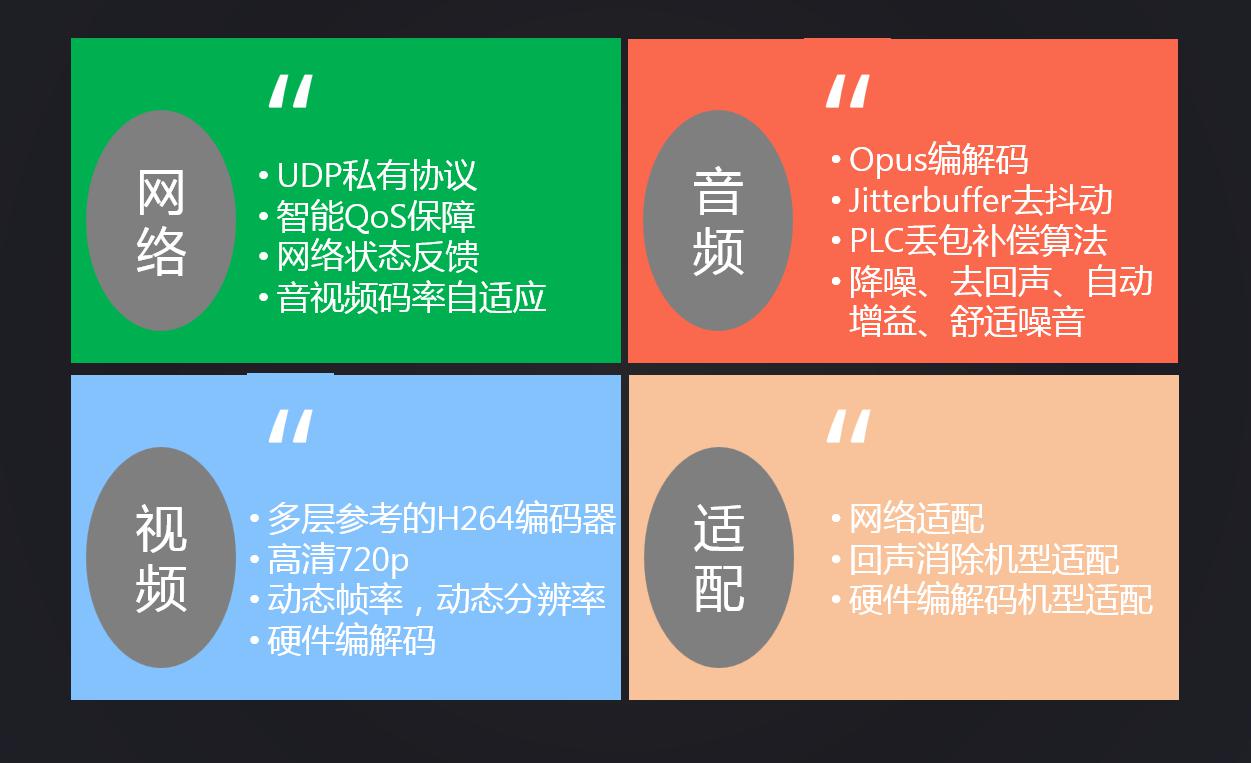

Realized Real time audio and video The main technical points of the system mainly include the following four parts: network, audio, video and adaptation.

![]()

With the introduction of real-time audio and video above, we have laid a solid foundation for the low delay Lianmai interactive live broadcast.

Interactive live broadcast server

The main functions of the interactive live broadcast server are:

- Perform the screen synthesis of the host and the connected person in the interactive live broadcast;

- Docking C DN Streaming media server

The main points of the interactive live broadcast server are: integrating real-time system and live broadcast system; Real time processing video synthesis; Ensure interactive synchronization and high-performance hardware.

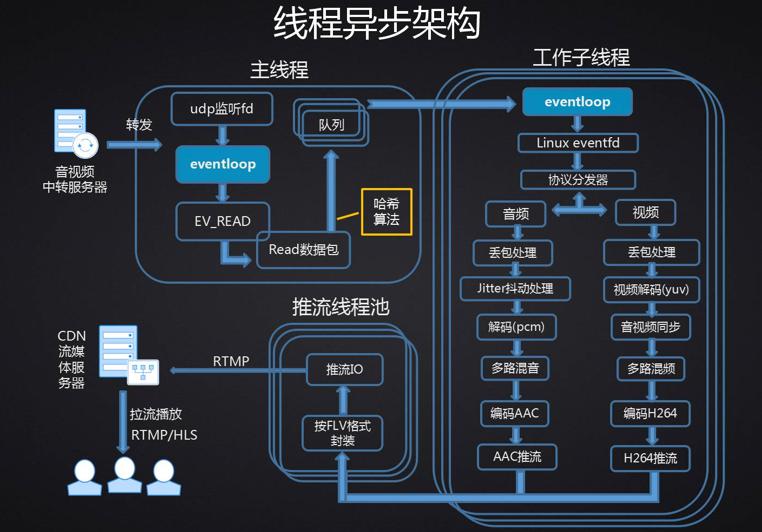

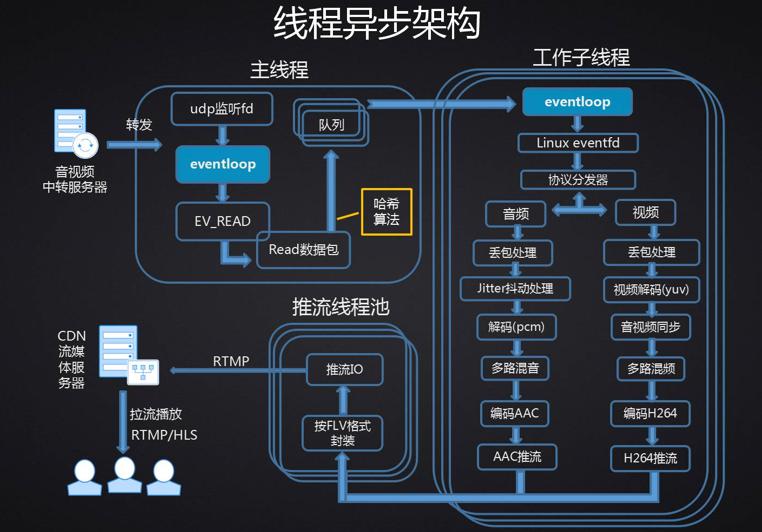

Let's take a brief look at the multi-threaded asynchronous architecture of the interactive live broadcast server.

![]()

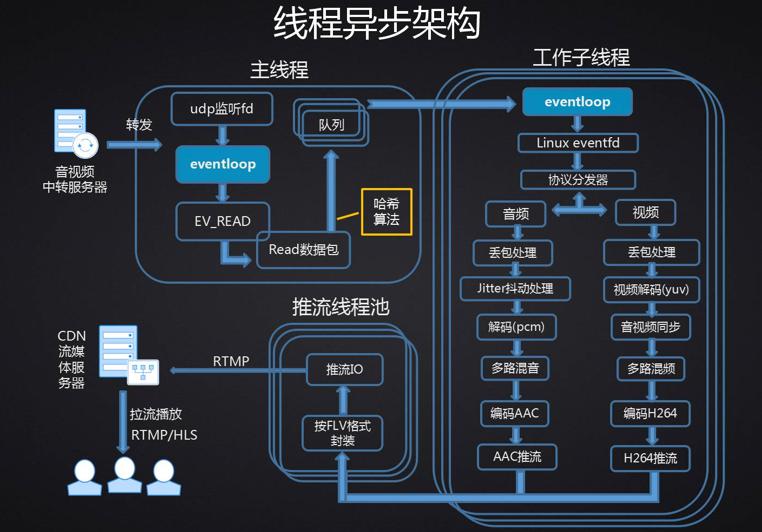

The interactive live broadcast server is mainly composed of one IO main thread, multiple work sub threads and multiple streaming thread pools.

The IO main thread is responsible for creating the udp listener fd and registering the readable events into the eventloop event loop. The transit server forwards the audio and video data to the port monitored by the main thread. The eventloop reads the event callback. The IO main thread is responsible for reading the data packets in the kernel UDP cache and putting them into the temporary queue corresponding to the worker sub thread one by one according to the hash algorithm. When the kernel cache is read empty, the IO main thread will trigger eventloop to listen to each work sub thread of eventfd readable events. Then, the data in the temporary queue of each main thread is distributed to the corresponding worker sub thread for processing by means of closures.

The worker sub thread takes out the data packets from the queue in turn, unpacks the data protocol, enters the protocol distributor, splits the business logic, and processes the audio data packet loss. Then, the jitterbuffer algorithm will smooth the jitter and decode the data into pcm data; For the video, packet loss processing will also be performed, and then the video will be decoded into yuv data. The video part will also be synchronized with the audio. After that, multiple audio pcm data and multiple video yuvs in each room will be mixed. After mixing, pcm data will be encoded as aac and then aac streaming will be called. After video yuv data is encoded as H264, H264 streaming will be called.

The streaming thread pool is responsible for encapsulating the raw data of aac and h264 in FLV format, and then using streaming network IO to push audio and video packets to the cdn streaming media server using RTMP protocol.

Ordinary viewers can use the rtmp streaming address to pull audio and video streams from the cdn streaming media server to complete the viewing of Lianmai interactive live broadcast.

We see that we only have one IO thread to process IO read operations. This is mainly to ensure that our IO is not affected by audio and video codecs. We will bind the IO thread to a specific CPU.

The worker sub thread will also bind the corresponding CPU core, which is also to improve performance. After binding, the performance can be improved by about 20%. The main reason is that the worker sub thread is extremely computationally intensive and has great pressure on the CPU. If it is not bound, the thread may switch on different cores, resulting in corresponding performance loss.

Server deployment and intelligent allocation

After talking about the functions and implementation details of the above two systems, what are we missing?

Our service is to be deployed on our server, and the deployment and allocation of the server is crucial in Lianmai interactive live broadcast. Next, let's look at the key points of server deployment and intelligent allocation.

First of all, in order to provide high-quality services to users around the world, global node deployment is essential. Based on the experience of NetEase Yunxin and relying on NetEase's computer rooms in the world, we have deployed multiple BGP computer rooms and third line computer rooms in China. For overseas, we have deployed overseas nodes. In particular, in order to optimize cross-border chat, we also use fiber optic special lines of transnational agents.

Second, after there are so many nodes, one node's intelligent allocation strategy is required. The user needs to be assigned the best access node according to the user's geographical location and the type of ISP of the user's operator. Of course, the real-time load of the service node and the real-time network conditions should also be considered when allocating.

Third, simply allocating nodes on the server side is not enough, and the client needs to use an intelligent routing strategy. Intelligently select the best link according to the packet loss and delay of each link. At the same time, when a link is interrupted, it will switch to another link. The link handover is transparent and insensitive to upper layer users, that is, the calls will not be affected.

Fourth, in order to ensure the high availability of the entire real-time audio and video service, all our servers are deployed using a high availability architecture. Since audio and video servers have high demand for network cards, 80% of our servers use high-performance physical machines equipped with 10 Gigabit network cards. At the same time, the corresponding recovery and policy switching are used for server downtime and network disconnection, all of which are for the high availability of our real-time audio and video services.

That's it Netease Yunxin New Lianmai Interaction For the specific implementation method of the live broadcast scheme, please leave a message and interact with us.