Select, poll and epoll are IO multiplexing mechanisms. I/O multiplexing can monitor multiple descriptors through a mechanism. Once a descriptor is ready (generally read ready or write ready), it can notify the program to perform corresponding read and write operations. However, select, poll, and epoll are all synchronous I/O in essence, because they need to be responsible for reading and writing after the read-write event is ready, that is, the process of reading and writing is blocked Asynchronous I/O does not need to be responsible for reading and writing. The implementation of asynchronous I/O will be responsible for copying data from the kernel to the user space. As for the usage of these three IO multiplexes, the previous three summaries are very clear and tested with the server echo program. The connection is as follows:

select: http://www.cnblogs.com/Anker/archive/2013/08/14/3258674.html

poll: http://www.cnblogs.com/Anker/archive/2013/08/15/3261006.html

epoll: http://www.cnblogs.com/Anker/archive/2013/08/17/3263780.html

Today, we will compare these three IO multiplexers. With reference to the information on the Internet and books, we will sort them out as follows:

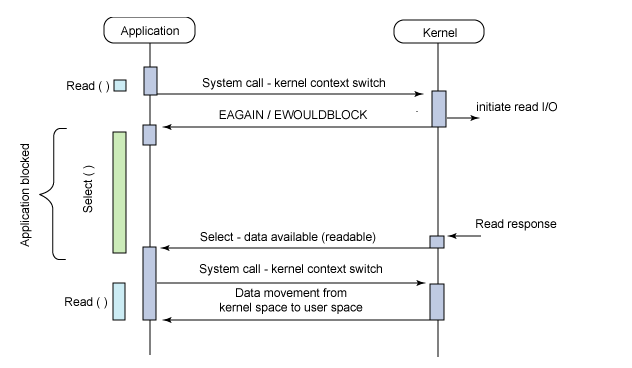

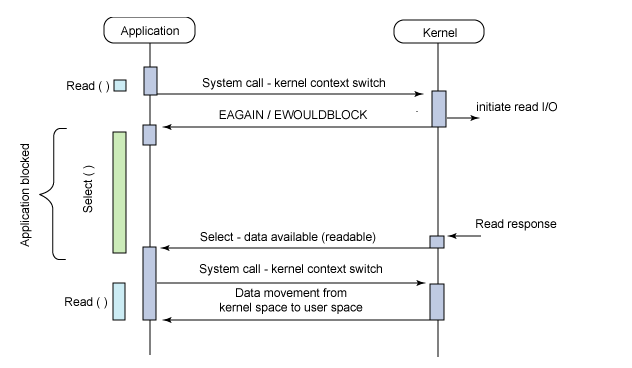

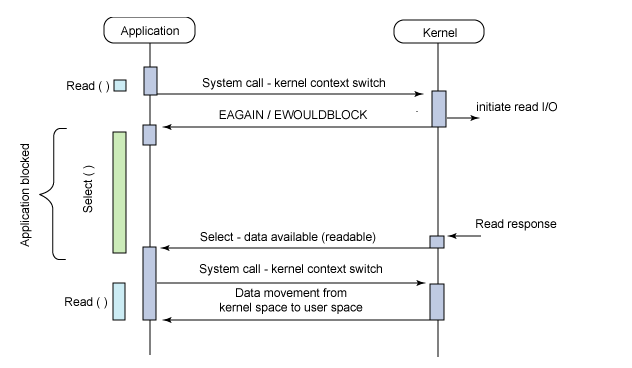

1. Select implementation

The call process of select is as follows:

(1) Use copy_from_user to copy fd_set from user space to kernel space

(2) Register callback function __pollwait

(3) Traverse all fds and call their corresponding poll method (for sockets, this poll method is sock_poll, and sock_poll will call tcp_poll, udp_poll, or datagram_poll as appropriate)

(4) Taking tcp_poll as an example, its core implementation is __pollwait, which is the callback function registered above.

(5) The main task of __pollwait is to hang the current (current process) to the waiting queue of the device. Different devices have different waiting queues. For tcp_poll, the waiting queue is sk ->sk_sleep (note that hanging the process to the waiting queue does not mean that the process has fallen asleep). After the device receives a message (network device) or fills in the file data (disk device), it wakes up the process waiting for the queue to sleep. At this time, the current is awakened.

(6) When the poll method returns, it returns a mask that describes whether the read/write operation is ready. According to this mask, it assigns a value to fd_set.

(7) If all fds are traversed and a read-write mask is not returned, schedule_timeout will be called, which means that the process calling select (that is, current) will sleep. When the device driver reads and writes its own resources, it will wake up the process waiting for sleep on the queue. If no one wakes up after a certain timeout period (specified by schedule_timeout), the process calling select will be awakened again to obtain the CPU, and then traverse fd again to determine whether there is a ready fd.

(8) Copy fd_set from kernel space to user space.

Summary:

Several disadvantages of select:

(1) Every time you call select, you need to copy the fd set from the user mode to the kernel mode. This cost is high when there are many fds

(2) At the same time, every call to select requires the kernel to traverse all fds passed in, which is also costly when there are many fds

(3) The number of file descriptors supported by select is too small. The default is 1024

2 poll implementation

The implementation of poll is very similar to that of select, except that the way to describe the fd set is different. Poll uses the pollfd structure instead of the fd_set structure of select, and others are similar.

For the analysis of the implementation of select and poll, please refer to the following blog posts:

http://blog.csdn.net/lizhiguo0532/article/details/6568964#comments

http://blog.csdn.net/lizhiguo0532/article/details/6568968

http://blog.csdn.net/lizhiguo0532/article/details/6568969

http://www.ibm.com/developerworks/cn/linux/l-cn-edntwk/index.html?ca=drs -

http://linux.chinaunix.net/techdoc/net/2009/05/03/1109887.shtml

3、epoll

Since epoll is an improvement on select and poll, it should avoid the above three shortcomings. How is epoll solved? Before that, let's take a look at the difference in the call interfaces between epoll and select and poll. Both select and poll only provide one function - select or poll. Epoll provides three functions, epoll_create, epoll_ctl and epoll_wait. epoll_create creates an epoll handle; Epoll_ctl is the event type registered for listening; Epoll_wait is waiting for the event to occur.

For the first disadvantage, epoll's solution is in the epoll_ctl function. Each time a new event is registered in the epoll handle (EPOLL_CTL_ADD is specified in epoll_ctl), all fds will be copied into the kernel instead of being copied repeatedly when epoll_wait. Epoll ensures that each fd will be copied only once in the whole process.

For the second disadvantage, unlike select or poll, epoll's solution adds current to the device waiting queue corresponding to the fd in turn each time, but only hangs current once in epoll_ctl (this is necessary) and assigns a callback function to each fd. When the device threads and wakes up the waiters on the waiting queue, this callback function will be called, This callback function will add the ready fd to a ready linked list. The job of epoll_wait is actually to check whether there is a ready fd in the ready linked list (use schedule_timeout() to realize sleep for a while and judge the effect of a while, which is similar to step 7 in the select implementation).

For the third disadvantage, epoll does not have this limit. The maximum FD limit it supports is the maximum number of files that can be opened. This number is generally much larger than 2048. For example, on a machine with 1GB of memory, it is about 100000. The specific number can be observed by cat/proc/sys/fs/file max. Generally, this number has a great relationship with the system memory.

Summary:

(1) The select and poll implementation needs to constantly poll all fd collections until the device is ready. During this period, sleep and wake-up may alternate several times. In fact, epoll also needs to call epoll_wait to constantly poll the ready linked list. During this period, sleep and wake-up may alternate for many times. However, when the device is ready, it calls the callback function, places the ready fd in the ready linked list, and wakes up the process that enters sleep in epoll_wait. Although sleep and alternation are required, select and poll need to traverse the entire fd set when they are "awake", while epoll only needs to judge whether the ready linked list is empty when they are "awake", which saves a lot of CPU time. This is the performance improvement brought by the callback mechanism.

(2)select, Each call to poll needs to copy the fd set from the user state to the kernel state once, and suspend the current to the device waiting queue once, while epoll only needs to copy the current once, and suspend the current to the waiting queue only once (at the beginning of epoll_wait, note that the waiting queue here is not a device waiting queue, but a waiting queue defined inside epoll). This can also save a lot of money.

reference material:

http://www.cnblogs.com/apprentice89/archive/2013/05/09/3070051.html

http://www.linuxidc.com/Linux/2012-05/59873p3.htm

http://xingyunbaijunwei.blog.163.com/blog/static/76538067201241685556302/

http://blog.csdn.net/kkxgx/article/details/7717125

https://banu.com/blog/2/how-to-use-epoll-a-complete-example-in-c/epoll-example.c