Article | Cao Jiajun

Senior server development engineer of NetEase Smart Enterprise

Background

Introduction to Redis Cluster

Redis cluster is an official cluster solution provided by Redis. It is designed to adopt a decentralized architecture. Nodes exchange their status with each other through the gossip protocol, Redis cluster uses data fragmentation to build a cluster. The cluster has 16384 built-in hash slots. Each key belongs to one of the 16384 hash slots. The hash value is calculated through the crc16 algorithm, and then the hash slot to which each key belongs can be obtained by taking the remainder; Redis cluster supports dynamic addition of new nodes, dynamic migration of slots, automatic failover, etc.

The architecture of the Redis cluster requires that the client needs to directly establish a connection with each node in the Redis cluster. In case of events such as adding new nodes, node downtime, failover, and slot migration, the client needs to be able to update the local slot mapping table through the Redis cluster protocol, and be able to handle ASK/MOVE semantics. Therefore, We generally say that the client that implements the Redis cluster protocol is the smart Redis client 。

The Redis cluster can build a cluster with more than 100 primary nodes at most (after that, the overhead of the gossip protocol is too high, and the cluster may become unstable). According to the 10G capacity of a single node (too large memory of a single instance may lead to performance degradation), a single cluster can support a maximum capacity of about 1T.

Question

Redis cluster has many advantages (such as the ability to build large capacity clusters, good performance, and flexible capacity expansion), However, when some project projects expect to migrate from Redis to Redis cluster, the client is facing a lot of transformation work, At the same time, it brings a lot of testing work and new risks, which is undoubtedly a huge cost for some stable online projects.

Demand

To facilitate the migration of services to the Redis cluster, The most desirable thing is that the API of the client SDK is fully compatible with redis/redis cluster, The RedisTemplate provided by spring is a good implementation, but for projects that do not use the SpringRedisTemplate, many client implementations of the Redis and Redis cluster access APIs are inconsistent (such as the popular Jedis in Java), which virtually increases the workload and complexity of migration, At this point, the Redis cluster proxy is a good choice, With proxy, you can operate the Redis cluster like a single instance of Redis, and the client program does not need to be modified.

Of course, adding a layer of proxy will inevitably lead to a decline in performance to some extent. However, as a stateless service, proxy can be horizontally expanded in theory, and because the presence of the proxy layer reduces the number of connections to the back-end Redis server, it can even improve the overall throughput of the Redis cluster in some extreme scenarios. In addition, based on proxy, we can do many additional things:

- For example, you can Fragmentation logic on the proxy layer In this way, when the Redis cluster of a single cluster does not meet the requirements (memory/QPS), you can access multiple Redis cluster clusters transparently and simultaneously through the proxy layer.

- Another example is OK Double write logic in the proxy layer In this way, when migrating or splitting cache type Redis, you do not need to use tools such as Redis migrate tool for full migration, but only need to write twice as needed to complete the migration.

- In addition, because proxy implements the Redis protocol, it can Implement Redis related commands on the proxy layer using other storage media So that it can be simulated as an external service of Redis. A typical scenario is cold and hot storage.

Features

Due to the above reasons and needs, We have developed a middleware called camellia redis proxy based on netty, which supports the following features :

- Support setting password

- Support proxy to ordinary Redis and also to Redis cluster

- Support the configuration of customized sharding logic (proxy to multiple redis/redis cluster clusters)

- Support the configuration of customized dual write logic (the server will recognize the read/write attribute of the command, and the write command will be sent to multiple backend at the same time after dual write is configured)

- External plug-ins are supported, so that the protocol resolution module can be reused (currently including the camellia redis proxy hbase plug-in, which realizes the cold and hot separation of zset commands)

- Support online configuration changes (Camellia dashboard needs to be introduced)

- Support multiple business logics to share a set of proxy clusters, such as: A business configuration forwarding rule 1, B business configuration forwarding rule 2 (you need to set the business type through the client command when establishing a Redis connection)

- A spring boot starter is provided externally, and three lines of code can quickly set up a proxy cluster

![]()

How to improve performance?

The client sends a request to camellia redis proxy, and the process of receiving the request packet goes through the following steps:

- Uplink protocol resolution (IO read and write)

- Protocol forwarding rule matching (memory computing)

- Request forwarding (IO read and write)

- Backend Redis packet return and unpacking (IO read and write)

- The backend Redis packet is sent back to the client (IO read-write)

It can be seen that as a proxy, a lot of work is done in network IO operations, In order to improve the performance of proxy, the following work has been done:

Multithreading

We know that Redis itself is a single thread, but as a proxy, it can use multiple threads to make full use of the performance of multi-core CPUs. However, excessive threads will cause unnecessary context switches and performance degradation. Camellia redis proxy uses the multithreaded reactor model of netty to ensure the processing performance of the server. By default, the work threads with the number of cpu cores will be turned on. In addition, if the server supports multiple queues of network cards, enabling it can avoid load imbalance between different CPU cores; If it is not supported, bind the business process to other cores other than CPU0, so that CPU0 can concentrate on handling network card interruptions without being too affected by the business process.

Asynchronous non blocking

The asynchronous non blocking IO model is generally superior to the synchronous blocking IO model, In the above five processes, except for memory calculation such as protocol forwarding rule matching, the entire forwarding process is different Step non blocking Of To ensure that the blocking of individual processes will not affect the entire service.

Assembly line

We know that the Redis protocol supports pipeline. The use of pipeline can effectively reduce network overhead. Camellia redis proxy also makes full use of this feature, mainly including two aspects:

- When parsing uplink protocols, try to resolve multiple commands at one time, so that rules can be forwarded in batches

- When forwarding to the rear Redis node, try to submit in batches. In addition to aggregating commands from the same client connection, you can also connect commands from different clients. When the forwarding target Redis is the same, you can also aggregate commands

Of course, all these batch and aggregate operations need to ensure one-to-one correspondence between requests and responses.

TCP packet and large packet processing

Whether it's uplink protocol resolution or packet return from backend Redis, especially in the case of large packets, When encountering TCP packets, using an appropriate checkpoint mechanism can effectively reduce the number of repeated packets and improve performance 。

Exception handling and exception log merging

If various exceptions are not handled effectively, the server performance will also decline rapidly when the exception occurs. Imagine a scenario where we configured 90% of the traffic to be forwarded to cluster A and 10% to cluster B. If cluster B goes down, we expect 90% of the requests from the client to execute normally and 10% to fail, but in fact, far more than 10% of the requests may fail because of various reasons:

- Sudden downtime at the backend operating system level may not be immediately perceived by the proxy layer (no TCP fin packet is received) , resulting in a large number of requests waiting for packet return. Although the proxy layer is not blocked, the client displays request timeout

- When the proxy attempts to forward requests to cluster B, The reconnection request for cluster B may slow down the whole process

- A large number of abnormal logs caused by downtime may cause server performance degradation (This is a place easy to ignore)

- The pipeline submitted 99 requests to cluster A and 1 request to cluster B, but Due to the unavailability of cluster B, requests to cluster B are delayed in returning packets or abnormally slow in responding, The final performance of the client is that 100 requests failed

When dealing with the above problems, camellia redis proxy adopts the following strategies:

- Set the quick failure degradation policy for abnormal backend nodes To avoid slowing down the whole service

- Unified management and consolidated output of exception logs , reducing the impact of exception logs on server performance without losing exception information

- Add regular activity detection for backend Redis To avoid long-term business exceptions caused by failure to immediately perceive downtime

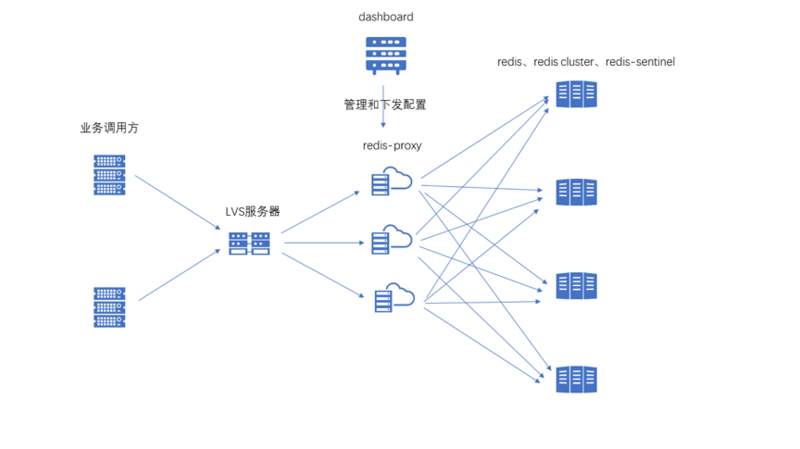

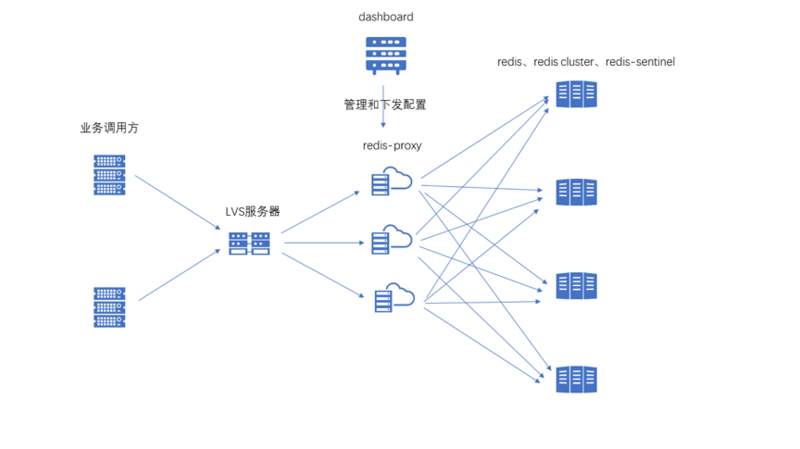

Deployment architecture

As a stateless service, proxy can achieve horizontal expansion. In order to make the service highly available, at least two proxy nodes should be deployed. For clients, they want to access the proxy as a single node Redis, You can set an LVS proxy service before the proxy layer , at this time, The deployment architecture is as follows:

![]()

Of course, there is another plan, The proxy node can be registered to a registry such as zk/Eureka/Consul. The client can pull and listen to the proxy list, and then access each proxy just like a single node Redis. Taking Jedis as an example, just replace JedisPool with RedisProxyJedisPool that encapsulates the registration and discovery logic, and you can use proxy just like you can access ordinary Redis. At this time, The deployment architecture is as follows :

![]()

Application scenarios

- You need to migrate from Redis to Redis cluster, but the client code is not easy to modify

- The client directly connects to the Redis cluster, which leads to too many cluster server connections and server performance degradation

- The capacity/QPS of a single Redis/Redis cluster cluster does not meet the business requirements, and the fragmentation function of camellia Redis proxy is used

- Cache class Redis/Redis cluster cluster splitting migration, using the dual write function of camellia Redis proxy

- Use the dual write function for disaster recovery of Redis/Redis cluster

- Some business scenarios where sharding and dual write functions are mixed

- Develop custom plug-ins based on the plug-in function of camellia redis proxy

![]()

Conclusion

Redis cluster is an officially recommended cluster solution. More and more projects have been or are being migrated to Redis cluster. Camellia redis proxy was born under this background; In particular, if you are a Java developer, camellia also provides solutions such as the CamelliaRedisTemplate. The CamelliaRedisTemplate has the same API as ordinary Jedis, provides features that native JedisCluster does not support, such as mget/mset/pipeline, and provides features such as sharding/double writing that are consistent with the Camellia redis proxy.

In order to give back to the community, camellia has been officially open source To learn more about the camellia project, please click [Read the original text] to visit github, and attach the address:

https://github.com/netease-im...

If you have any good ideas or proposals, or have any questions, please submit the issue to us!

About the author

Cao Jiajun. Senior server development engineer of NetEase Smart Enterprise. After graduation, the graduate students of the Chinese Academy of Sciences joined NetEase and have been responsible for the development of IM servers in NetEase Yunxin.

Author: Netease Yunxin

Link: https://segmentfault.com/a/1190000023210717

Source: SegmentFault

The copyright belongs to the author. For commercial reproduction, please contact the author for authorization, and for non-commercial reproduction, please indicate the source.