In the Internet+era, the magnitude of messages has risen significantly, and the diversity of message forms has brought great challenges to the instant messaging cloud service platform. What is the architecture and characteristics behind the highly concurrent IM system?

The above content is collated from the internal shared materials of the chief architect of Netease Yunxin

Recommended reading:

Detailed explanation of push guarantee and network optimization: how to realize backstage survival without affecting user experience

Push guarantee and network optimization details: how to make a long connection plus push combination scheme

Key points of this article:

- Netease Yunxin Overall architecture analysis

- Yunxin client Connection and access point management

- Servitization and high availability

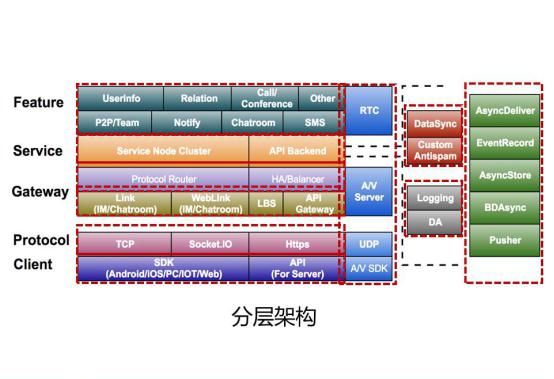

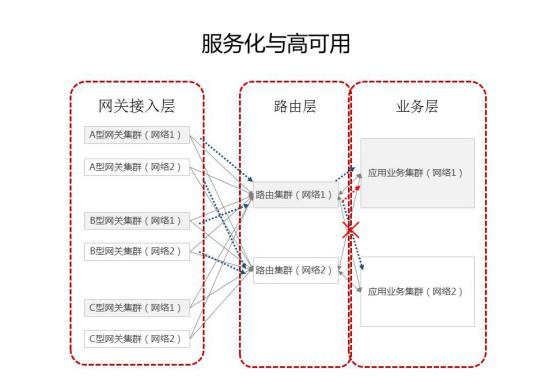

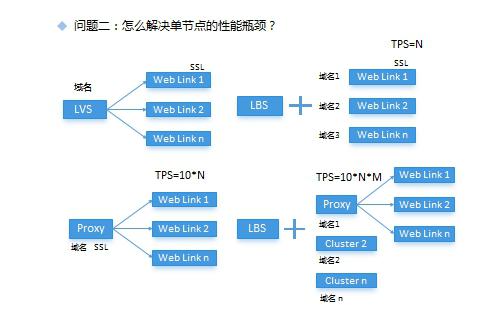

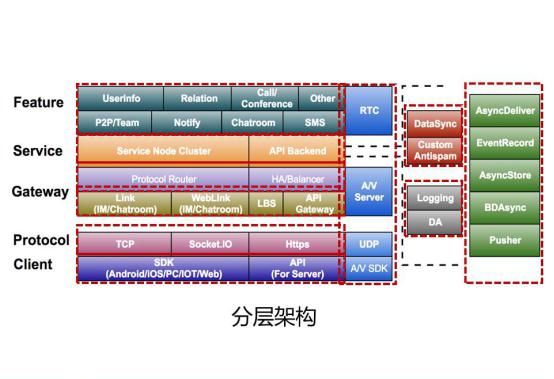

Netease IM Cloud Layered Architecture Diagram Analysis

1. Bottom client SDK , covering multiple platforms such as Android, iOS, Windows PC desktop, web page and embedded devices. The network protocols used in the SDK layer are Layer 4 TCP protocol and Layer 7 Socket IO protocol, which is specially used to provide long connection capability in the Web SDK; In addition to the SDK integrated into the application app, it also provides an API interface for third-party servers to call, based on the Http protocol; The final A/V SDK is a real-time audio and video SDK based on UDP protocol, which is used to realize voice and video calls based on the network.

![]()

(Netease IM Cloud Hierarchical Architecture Diagram)

2. Gateway layer: provide client direct access and maintain long connection with server; The WebSDK is directly connected to the Weblink service, which is based on Socket The long connection service realized by IO protocol, while the link service based on TCP protocol is used for direct connection of AOS/IOS/PC and other client SDKs; A very important function in Link and WebLink services is the management of all client long connections. The following gateways based on the HTTP protocol include API services, LBS services, etc. LBS services are used to help the client SDK select the most suitable gateway access point and optimize network efficiency; API services directly provide business requests from third-party servers;

3. HA layer: Above the gateway access layer is the HA layer. The gateway access layer can provide direct connections to clients. There is an HA layer between the link layer and the service layer to decouple and provide features such as high availability and easy expansion; In terms of the specific implementation of HA, for Link and WebLink, the two services that maintain long client connections, Yunxin provides protocol routing services to distribute business requests. The routing layer will forward requests from clients to corresponding business nodes according to predefined rules. When the business cluster is expanded, the routing service will find new available nodes immediately, The request will be forwarded to the destination. When an exception is found in the service node, it will also be marked by the routing layer and isolated for offline replacement.

4. Business node cluster: on the HA layer, it is a specific business node cluster, which is called App service. This service handles specific client requests, and the back-end directly connects to DB Cache and other basic services. The nodes in this cluster are lightweight, and each node is stateless. When Yunxin actually deploys this cluster, it will be deployed across the network environment. For example, a set of business service nodes will be deployed in two machine rooms in the same city, and the front end will distribute business requests through the routing layer. Normally, businesses are hot standby for each other, Average sharing of online traffic; When a single network environment or infrastructure fails, it will be immediately detected by the routing service, and the computing node in this environment will be marked offline, and all online traffic requests will be forwarded to the normal working cluster; This improves the overall availability of services; With the operation and maintenance tools such as the monitoring platform, the real-time processing capacity and capacity usage of business nodes will be dynamically monitored. When the processing capacity reaches the preset water level, an alarm will be sent immediately. The operation and maintenance personnel can easily and quickly expand the business node cluster through the automatic deployment platform.

5. Business layer: it includes some key functions: core single chat messages, group chat messages, chat rooms, notifications, etc; And user information trusteeship, special relationship management, etc; There are also API oriented services, such as short message service, callback call and special line conference; There are also real-time audio, video and live broadcast functions.

The more important functions listed separately from the service layer are listed on the right, including third-party data synchronization with developer applications, personalized content audit support, super group services, login and login event logs, roaming messages and cloud message history functions, push services, and so on.

Netease IM Cloud Deployment Topology

The following simplified deployment topology can provide a preliminary understanding of the overall technical system of Yunxin. On the far right is the client. The client obtains the list of gateway access points through the LBS service, establishes a long connection with long connection servers such as Link and WebLink, and performs RPC operations. All requests from the client will be forwarded to the APP layer of the back-end through the routing layer, The APP layer processes and issues the processing results of synchronous requests in real time, and sends some asynchronous tasks to asynchronous tasks through queue services, such as the sending of large groups of messages, push services, storage of cloud historical messages, and third-party data copying synchronous services; The API interface at the bottom is similar. The API directly provides server call requests to third parties. The API backend is a variety of independent services, such as callback calls, SMS, etc; Similarly, all API back-end business requests will also generate corresponding logs; Like the logs on the APP, these logs will be collected on the big data platform through the log collection platform. On the one hand, this kind of data will be stored on HDFS as the data source for data statistics and analysis; On the other hand, it will be imported into data warehouses such as Hbase to provide log retrieval and secondary analysis.

![]()

(Netease IM Cloud Deployment Topology Diagram)

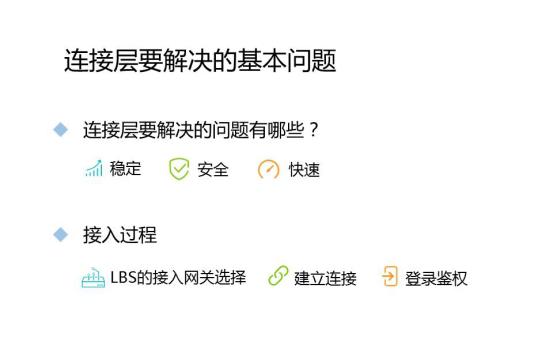

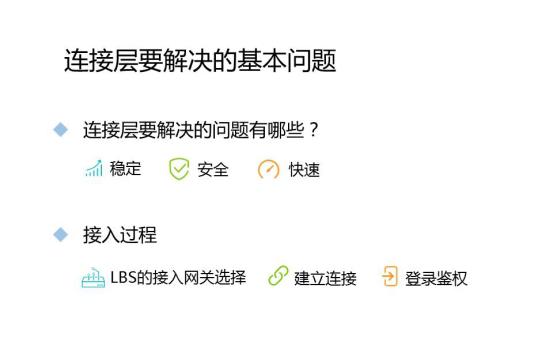

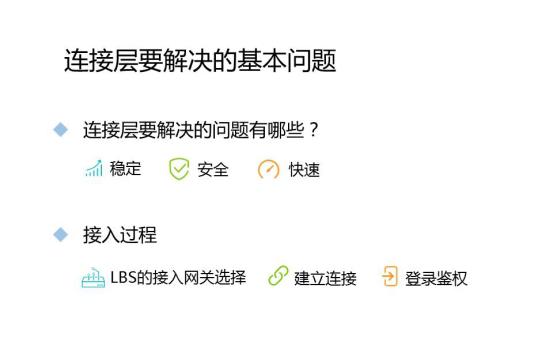

Optimization Practice of High Concurrency IM System Connection Layer

What is the most important connection management service in instant messaging? The premise of fast message arrival is that a stable connection is maintained between the client and the server; It can be understood as the cornerstone of the stability of cloud trust services. What is the most important problem that the gateway access layer needs to solve? The core is still stability, security and speed.

![]()

How to ensure stability? NetEase Yunxin SDK adopts a long connection mechanism, and detects disconnection and automatically reconnects by heartbeat. At the same time, Yunxin SDK has a lot of optimization work for weak network environments such as mobile networks. It uses TCP to connect the client and server on the mobile end/PC end, and socket IO protocol to the Web end, so as to achieve a long connection and solve the compatibility problem of the browser at the same time;

How to achieve security?, Yunxin requires that all data transmitted on the public network must be encrypted; In the process of establishing the connection between the SDK and the server, there is a complex key negotiation process. First, the client needs to generate a one-time encryption key, and use asymmetric encryption to encrypt the key and then send it to the server. The encrypted data will be decrypted by the server, and then the encryption key will be retained in the session information of the long connection, The secret key is used for data encryption. This is a stream encryption, which can effectively prevent man in the middle attacks and packet playback and other attacks.

How to ensure fast? First, in terms of the choice of gateway access points, LBS services can help clients find the gateway access points that are most suitable for them. For example, the physical distance to the nearest node is judged from information such as IP. Second, after the connection is established, the long connection mechanism can greatly improve the speed of messages up and down. In the process of data transmission, Yunxin will compress the transmission of data packets, Reduce network overhead to increase the speed of message sending and receiving; For mobile client scenarios such as frequent foreground and background switchover and re login, the SDK provides mechanisms such as automatic login and reconnection, that is, the message channel has been established in advance while the UI interface is up; In the selection strategy of access gateway, the speed of connection establishment is improved through parallelism (diagram);

Presentation of the process of establishing a long connection between the client and the server

The first step of SDK access is to request the LBS service to obtain the list of accessible access gateway addresses. The LBS service will assign addresses to clients according to various policy conditions. Common conditions are as follows:

1: Appkey, through which a specific application request can all be directed to a specific set of access points, which can be used for the exclusive server scheme;

2: Client IP, which is used to allocate access gateways to nearby clients according to their geographical location, and is commonly used in the configuration of overseas nodes;

3. SDK version number, which points clients in a specific version range to a specific gateway, is commonly used for compatibility schemes of upgrading old and new versions, and there is no actual use case at present;

4. Specific environment identifications, such as intelligent customer service environment, are used to point specific types of apps to specific gateways for the isolation requirements of larger granularity environments;

After the LBS service request is sent to the access gateway address, the client will try to establish a connection according to the address in the list; If this order is strictly followed, the client connection establishment process will be slow. In order to speed up the access process, in fact, during the operation, the SDK will use the address list returned from the last LBS request cached locally to establish a connection, and take a new address list from the LBS and cache it locally for next use; When all addresses in the list fail after one attempt, the default link address will be used to establish the connection; If the default address also fails, a network error code of 415 or 408 will appear;

After obtaining the target address, it will try to establish a TCP long connection. After the connection is established, it will negotiate the encryption key with the server and send the first authentication packet. After the authentication is completed, this long connection is a safe and effective connection, and the client can send subsequent RPC requests; The server can also send message notifications to this connection; If the secret key negotiation or authentication fails, the connection will be considered an illegal connection request, and the server will be forced to disconnect;

Finally, let's talk about the acceleration node. In order to achieve fast connection, the node closest to the client will be given priority in the allocation of gateway access points; The acceleration node here is a special node provided by the user.

The principle background of the acceleration node is the lines provided by the operator to individual users. Whether it is a mobile network or a wired network, its quality is always different from that of the network between IDC centers; If the critical path in the entire user link is replaced by the network line between IDCs, it is helpful to improve the stability and speed of the connection.

Suppose a customer in the United States accesses a gateway access point in Hangzhou through a mobile network. Since the network where the client is located is a mobile network, the link to the server in Hangzhou is very long and the intermediate node that may jump is unpredictable. In China, it has to cross the firewall; Therefore, most of the direct connection cases may be unable to connect, or frequent disconnection after connection.

We provide a multi-layer accelerator node: after the accelerator node is added, the unpredictable link of the user's overall link is replaced by a high-quality line, and the network of the user directly connecting to the local accelerator node is often much better.

:

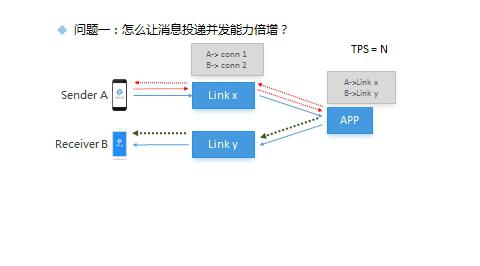

Let's talk about the impact of different delivery modes on message delivery efficiency:

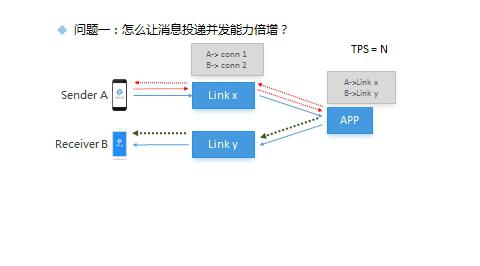

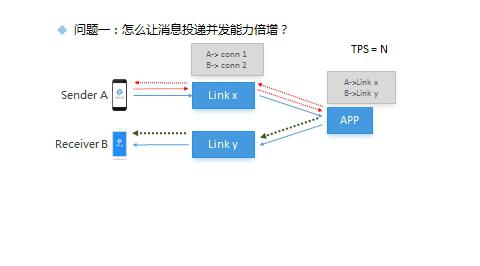

Question 1: How to double the concurrency of message delivery?

![]()

In this figure, the upper part represents a point-to-point Link server. When sender A sends a message, it submits it to the APP through Link for processing. The APP queries that the Link server where message receiver B is located is Link y, and sends a downlink notification packet to the Link y server, Find the long connection corresponding to user B on Link y and send the notification to the client; In this mode, all access points are equivalent to all users. They can access to any server. To send any message, the service layer must query the link server of the target receiver and send a notification packet to the corresponding link server, Then you need to query the link list of all members in the group on the business APP; This is a time-consuming operation; And with the increase of the number of message receiving members, the cost increases; So if you need to send messages to the chat room, because the number of members in the chat room is very large, this mode will soon encounter a performance bottleneck, and the delay of message delivery will be very serious;

For the broadcast link server, Yunxin first follows a principle when assigning access points, that is, members in the same chat room should try to allocate chat rooms to the same group of access points; The long connection set of all members in each room is maintained on Link; What is maintained on the App is no longer the mapping relationship between a specific user and a link, but the collection of links assigned to a specific room; So after any member sends a chat room broadcast message, the message is uploaded to the App through the link. The App only needs to find the list of link addresses that have been assigned to the chat room and send a broadcast message to each link. After receiving the downlink broadcast message, the Link will broadcast and distribute it locally; This efficiency is more than one order of magnitude higher than the on-demand mode;

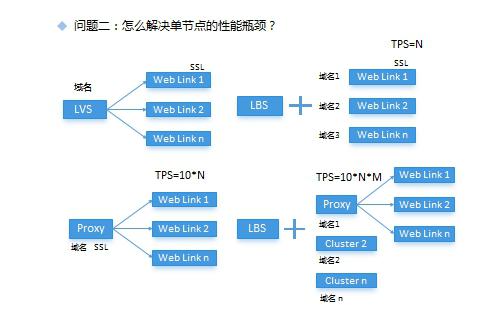

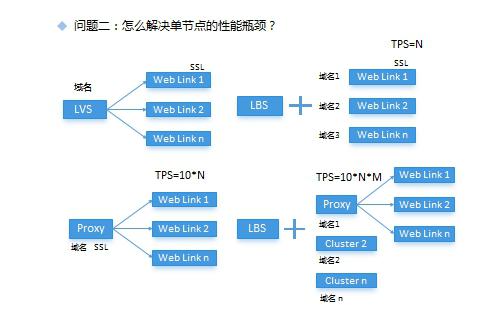

Question 2: How to solve the performance bottleneck of a single node?

After talking about the difference between point-to-point and broadcast links; Yunxin looks back to the evolution and optimization process of another kind of proxy scheme for weblink based on socket.io in Yunxin;

Before that, we need to emphasize two key points in WebLink. First, WebLink is based on the Socket.io protocol. In order to ensure the reliability of the data channel, Yunxin needs to use Https to encrypt the channel. Second, because of the request of Https, it must provide an independent domain name.

![]()

Figure 1 shows the earliest scheme. The backend Weblink provides connection and implements SSL encryption. Multiple nodes are proxied through LVS in front, the domain name is bound to the LVS proxy, and the LVS proxy is followed by the Keepalived scheme to ensure HA; There is only one domain name exposed to the public in this scheme, but there are actually many nodes inside, and the expansion is transparent to the public; The Web client only needs to directly connect to the unique domain name when connecting. This is the most convenient and fast way for a single product. The client can bypass the process of address allocation; The disadvantages also focus on a single outlet. If this single outlet is attacked by DDOS, it can only be avoided by domain name rebinding. The domain name rebinding requires a certain effective time, which brings some costs in operation and maintenance. Secondly, for the service of Yunxin, the single outlet loses flexibility; All customers are directly connected to the same entrance, and the exclusive service and business isolation cannot be realized, and the acceleration node scheme cannot be realized;

Then there is the second scheme. This scheme borrows from the LBS allocation method in the Link service. It still implements SSL encryption on the Weblink node and assigns an independent domain name to each Weblink node. The client allocates the appropriate access point through the LBS service before accessing; The advantage of this scheme is that it provides greater flexibility, can expand the cluster capacity at any time, can also dynamically adjust the access point address of specific applications, and also provides the possibility of being an acceleration node; However, the problem with this scheme is that each node is a single point, and SSL coding is still required in the node. Because Java's SSL costs a lot of CPU resources, the service capacity of a single node will be affected in case of sudden user traffic;

So there is a third solution. In this solution, Nginx is used as the 7-layer proxy in the front end, and SSL and domain name binding are configured in Nginx. The back end can use a set of Weblinks at the same time; Because Nginx is used, the port allocation logic is more scientific, which improves the convenience of operation and maintenance; Finally, Yunxin got a combination scheme currently in use. The front-end still allocates access points to SDKs through LBS services to provide flexibility; The backend uses multiple Nginx clusters as proxy clusters, and the performance of each cluster grouping has been improved.

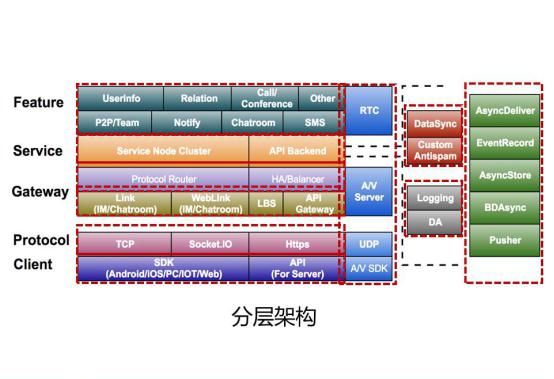

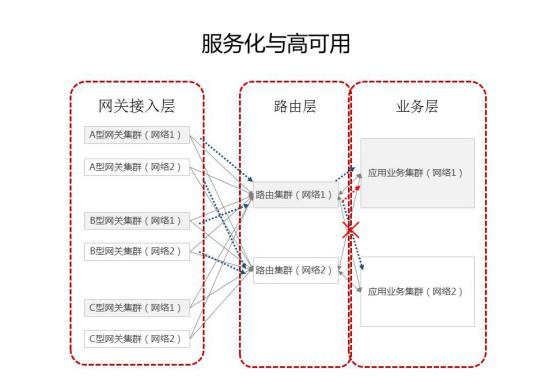

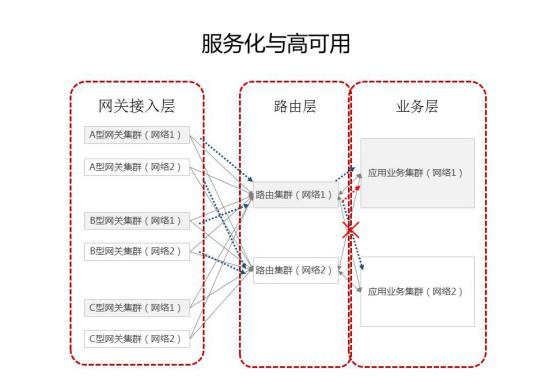

Instant messaging platform service and high availability practice

The previous section focused on some methods used by Yunxin in the implementation of the client access layer and the management of access points. Through these technical means, a stable and reliable message channel has been established for IM services. Now let's talk about the service and high availability work done in the business layer.

![]()

The gateway access layer is responsible for the maintenance and management of the client's long connection. All access nodes can even be stateless peer nodes, which are only responsible for the forwarding of requests between the client and the server, and optimize the forwarding efficiency; The real business processing logic still needs to be implemented in the business layer.

The business layer needs to handle a large number of requests and be responsible for interacting with DB, cache, queue, third-party interface and other components. Its stability, availability and scalability directly affect the quality of the entire cloud service; In order to make the service layer more resilient, Yunxin introduces a routing layer between the gateway access layer and the service layer to decouple; After the business node goes online, it will register itself with the service center, and the routing node will transfer the request packet of the gateway layer, and select the matching node from the service node to distribute the request; This three-tier architecture makes the whole system more resilient.

In order to improve the service availability, Yunxin will distribute the service nodes to different network environments. Normally, it can provide services at the same time. Once the network or infrastructure of one environment fails, it can quickly offline the faulty cluster through the routing layer.

Flexible support for grayscale upgrade mode. Yunxin can upgrade some of its business nodes, and then import the specified user traffic to the newly upgraded node through the configuration of the routing layer;

Flexible support for exclusive services. For some customers with strong demand for exclusive resources, Yunxin can import all the traffic under the customer's application to an independent cluster through the routing layer.