K8S is the current mainstream container orchestration service, which mainly solves the "management problem" of "container applications" in "cluster environment", mainly including the following aspects:

K8S is the current mainstream container orchestration service, which mainly solves the "management problem" of "container applications" in "cluster environment", mainly including the following aspects:

Container cluster management • Orchestration • Scheduling • Access infrastructure management • Computing resources • Network resources • Storage resources The strength of the k8s depends on its good design concepts and abstractions, attracting more and more developers to invest in the k8s community, and the number of companies that use the k8s as an infrastructure operation service is gradually increasing.

In terms of design concept, only APIServer communicates with etcd (storage) in k8s. Other components maintain state in memory and persist data through APIServer. The triggering of management component actions is level based rather than edge based, and the corresponding actions are performed according to the "current state" and "expected state" of resources. K8s adopts hierarchical design, based on various abstract interfaces, and different plug-ins meet different needs.

In terms of abstraction, different workloads serve different applications, such as deployment for stateless applications and StatefulSet for stateful applications. In terms of access management, Service decouples service providers and accessors inside the cluster, and Ingress provides access management from outside the cluster to inside the cluster.

Although K8S has good design concept and abstraction, its steep learning curve and imperfect development materials greatly increase the difficulty of application development.

Based on the author's development practice, this sharing will take MySQL on k8s as an example to describe how to develop highly reliable applications based on k8s, The best practices should be abstracted as far as possible to reduce the cost of developing highly reliable applications based on k8s.

MySQL on k8s

The design and development of applications cannot be separated from business requirements. The requirements for MySQL applications are as follows:

- High reliability of data

- High availability of services

- Easy to use

- Easy operation and maintenance

In order to achieve the above requirements, it is necessary to rely on the cooperation of k8s and applications, that is, to develop highly reliable applications based on k8s, which requires both k8s related knowledge and knowledge in the application field.

The following will analyze the corresponding solutions according to the above requirements.

1. High reliability of data

The high reliability of data generally depends on these aspects: • redundancy • backup/recovery

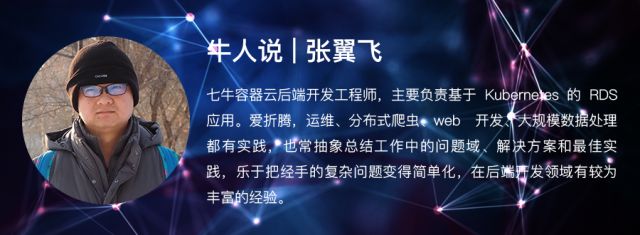

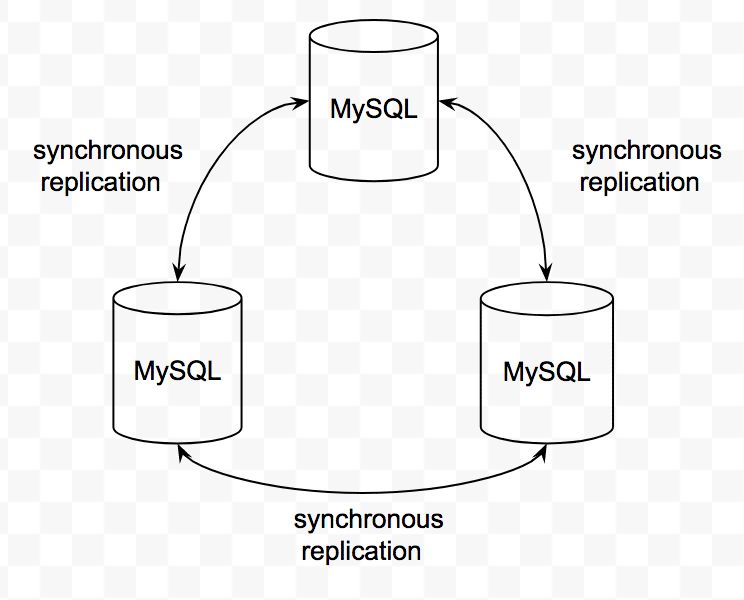

We use Percona XtraDB Cluster as the MySQL cluster solution. It is a multi master MySQL architecture, and real-time data synchronization between instances is achieved based on Galera Replication technology. This cluster scheme can avoid the possible data loss of master slave architecture clusters during master-slave switchover, and further improve the reliability of data.

In terms of backup, we use xtrabackup as the backup/recovery scheme to realize hot backup of data, which does not affect the normal access of users to the cluster during backup.

While providing "scheduled backup", we also provide "manual backup" to meet the business needs for backup data.

2. High availability of services

Here, we will analyze it from the perspectives of "data link" and "control link".

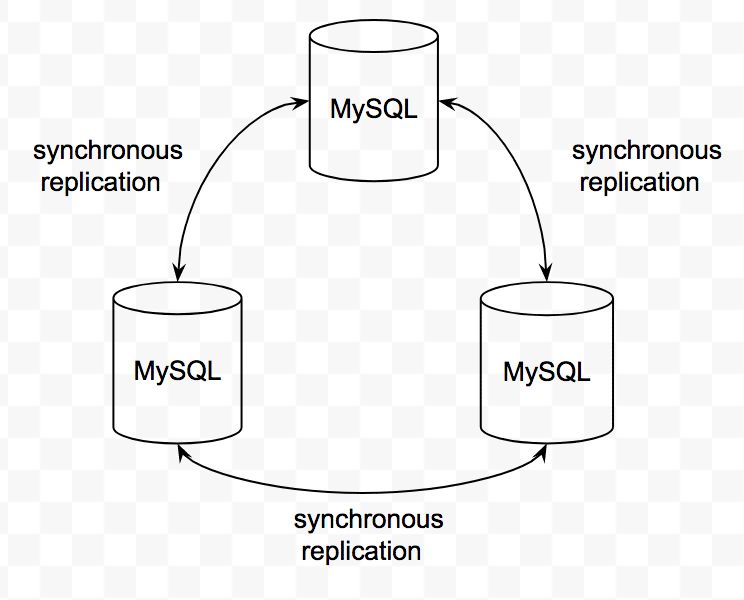

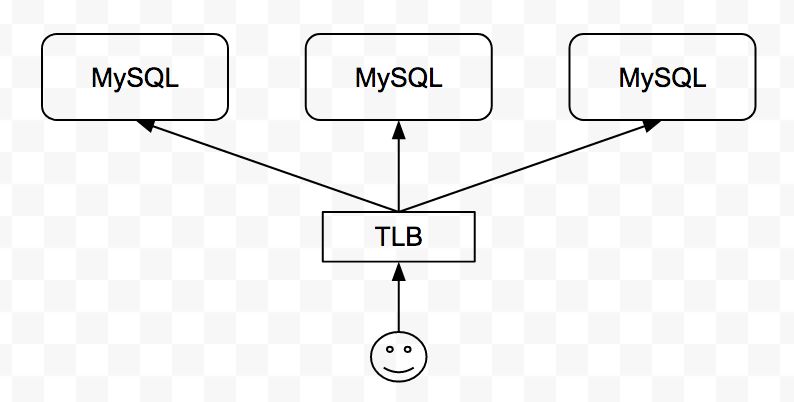

The "data link" is a link for users to access MySQL services. We use the MySQL cluster scheme of three master nodes to provide access to users through TLB (the four layer load balancing service developed by Qiniu). TLB not only realizes the load balancing of the access layer to the MySQL instance, but also realizes the health detection of the service, automatically removes the abnormal node, and automatically joins the node when it recovers. As shown below:

Based on the above MySQL cluster scheme and TLB, The exceptions of one or two nodes will not affect the normal access of users to the MySQL cluster, so as to ensure the high availability of MySQL services.

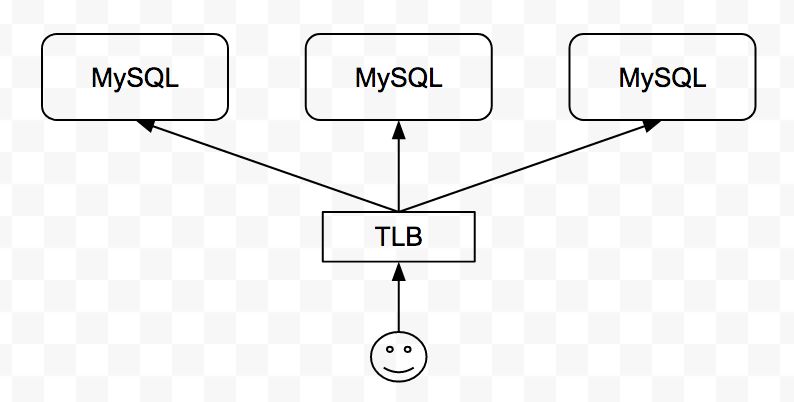

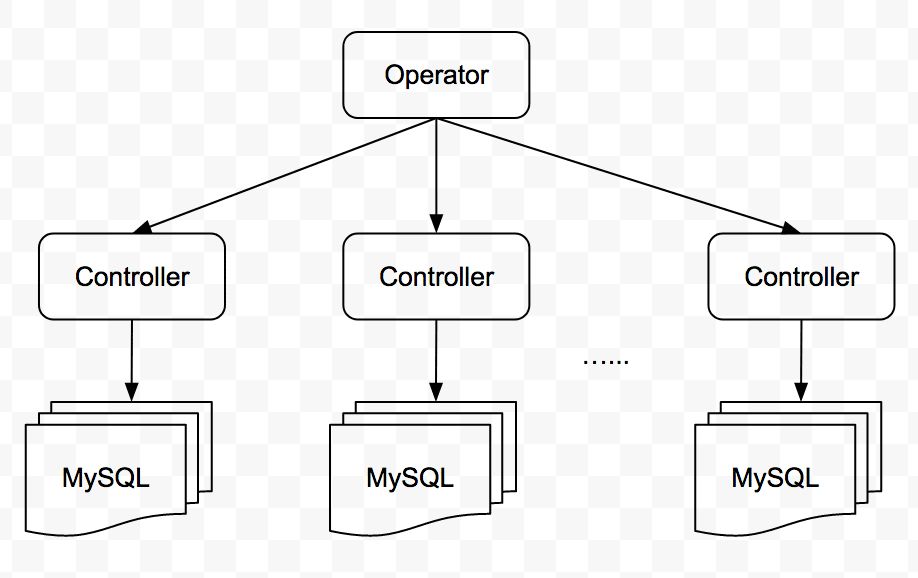

The "control link" is the management link of the MySQL cluster, which is divided into two levels: • Global control management • Control management of each MySQL cluster Global control management is mainly responsible for "creating/deleting clusters", "managing the status of all MySQL clusters", etc. It is implemented based on the concept of the operator. Each MySQL cluster has a controller that is responsible for "task scheduling", "health detection", "automatic fault handling", etc. of the cluster.

This kind of disassembly decentralized the management work of each cluster to each cluster, reducing the mutual interference of control links between clusters, and reducing the pressure on the global controller. As shown below:

Here is a brief introduction to the concept and implementation of Operator.

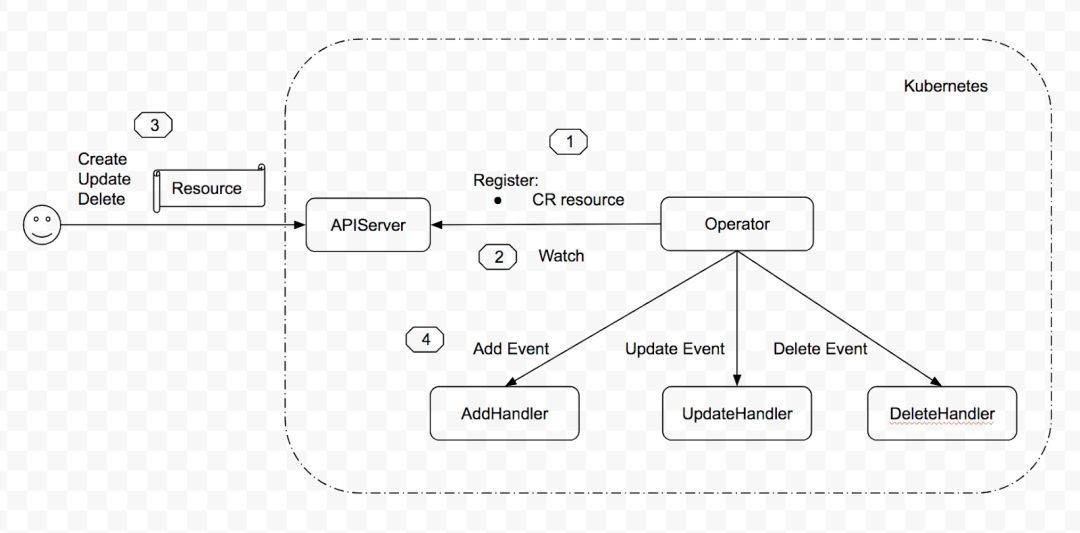

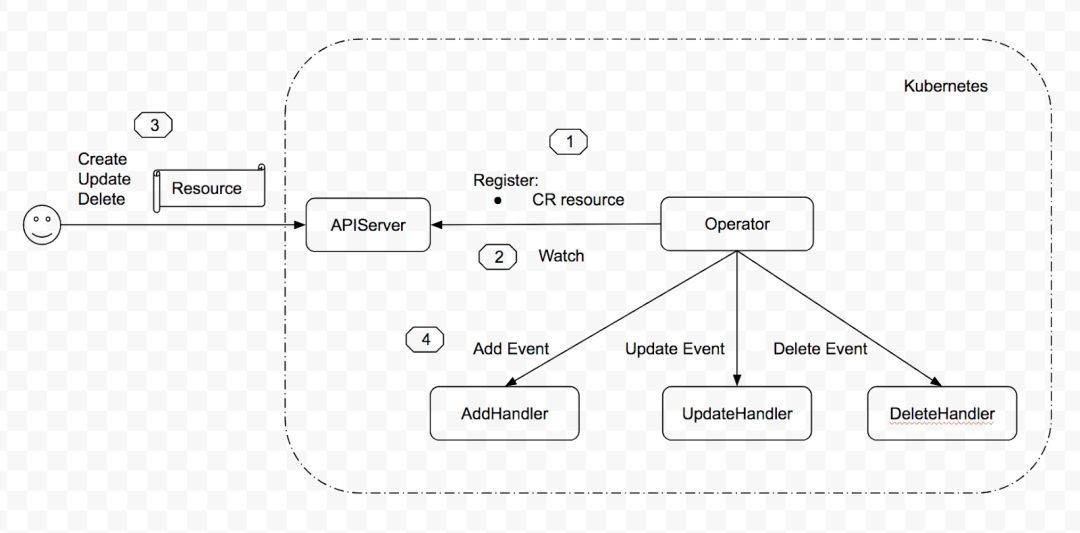

Operator is a concept proposed by CoreOS, which is used to create, configure, and manage complex applications. It consists of two parts: Resource • Customizing resources • Providing users with a simple way to describe their expectations of services Controller • Creating resources • Monitoring resource changes, which is used to realize users' expectations of services

The workflow is shown in the following figure:

That is:

- Register CR (CustomResource) resources

- Listen for changes in CR objects

- The user performs CREATE/UPDATE/DELETE operations on the CR resource

- Trigger the corresponding handler for processing

According to practice, we have abstracted the development operator as follows:

CR is abstracted into such a structure:

The operation on CR ADD/UPDATE/DELETE events is abstracted as the following interface:

On the basis of the above abstraction, Qiniu provides a simple Operator framework, which transparently enables operations such as creating CR and monitoring CR events, making it easier to develop an Operator.

We have developed MySQL Operator and MySQL Data Operator, It is used to create/delete clusters and manual backup/recovery.

Since each MySQL cluster has multiple types of task logic, such as "data backup", "data recovery", "health detection", and "automatic fault handling", the concurrent execution of these logic may cause exceptions, so the task scheduler is needed to coordinate the execution of tasks. The Controller plays a role in this regard:

Through the Controller and various workers, each MySQL cluster realizes self operation and maintenance.

In terms of "health detection", we have implemented two mechanisms: • passive detection • active detection "passive detection" means that each MySQL instance reports the health status to the Controller, and "active detection" means that the Controller requests the health status of each MySQL instance. These two mechanisms complement each other to improve the reliability and timeliness of health detection.

The controller and operator will use the health detection data, as shown in the following figure:

The health detection data is used by the Controller in order to timely discover the exceptions of the MySQL cluster and handle the corresponding failures, so accurate and timely health status information is required. It maintains the status of all MySQL instances in memory, updates the instance status according to the results of "active detection" and "passive detection", and performs corresponding processing.

The operator uses the health detection data to reflect the running condition of the MySQL cluster to the outside world, and intervene in the fault handling of the MySQL cluster when the controller is abnormal.

In practice, due to the relatively high frequency of health detection, a large number of health states will be generated. If each health state is persistent, the Operator and APIServer will suffer from huge access pressure. Because these health states are only the most recent data meaningful, Therefore, at the controller level, the health status to be reported to the operator is inserted into a queue with limited capacity. When the queue is full, the old health status will be discarded.

When the Controller detects an exception in the MySQL cluster, it will automatically handle the failure.

First define the fault handling principles: • No data loss • No impact on availability as far as possible • Automatic handling of known and treatable faults • No automatic handling of unknown and non treatable faults, manual intervention in fault handling, There are these key issues: • What are the types of failures • How to detect and sense failures in a timely manner • Whether a failure has occurred at present • What type of failure has occurred • How to deal with the above key issues, we have defined three levels of cluster status:

Green • External service available • Number of running nodes meets the expectation Yellow • External service available • Number of running nodes does not meet the expectation Red • External service unavailable

At the same time, the following statuses are defined for each mysqld node:

Green • The node is running • The node is in the MySQL cluster Yellow • The node is running • The node is not in the MySQL cluster Red clean • The node exits Red clean gracefully • The node exits Unknown gracefully • The node status is unknown

After collecting the status of all MySQL nodes, the Controller will calculate the status of the MySQL cluster according to the status of these nodes. When it is detected that the MySQL cluster status is not Green, the "fault handling" logic will be triggered, and the logic will be processed according to the known fault handling scheme. If the fault type is unknown, handle it manually. The whole process is as follows:

Due to the different fault scenarios and handling schemes for each application, the specific handling methods will not be described here.

3. Easy to use

Based on the concept of Operator, we have implemented highly reliable MySQL services and defined two types of resources for users, namely QiniuMySQL and QiniuMySQL Data. The former describes the user's configuration of the MySQL cluster, and the latter describes the task of manually backing up/restoring data. Here we take QiniuMySQL as an example.

Users can trigger the creation of MySQL clusters through the following simple yaml files:

After the cluster is created, the user can obtain the cluster status through the status field of the CR object:

Here is another concept: Helm.

Helm is a package management tool for k8s. It standardizes the delivery, deployment and use process of k8s applications by packaging them as Charts.

Chart is essentially a collection of k8s yaml files and parameter files. In this way, applications can be delivered through one Chart file. Helm can deploy and upgrade applications with one click by operating Chart.

Due to the space and versatility of the Helm operation, the specific use process will not be described here.

4. Easy operation and maintenance

In addition to the above "health detection" and "automatic fault handling" as well as the delivery and deployment of Helm management applications, the following issues need to be considered in the operation and maintenance process: • monitoring/alarm • log management

We use prometheus+grafana as the monitoring/alarm service. The service exposes metric data to prometheus through HTTP API, and the prometheus server pulls it regularly. Developers can visualize the monitoring data in prometheus in grafana, and set alarm lines in the monitoring chart according to their grasp of the monitoring chart and application. Grafana can realize the alarm.

This way of visual monitoring before alarm has greatly enhanced our grasp of application operation characteristics, Specify the indicators and alarm lines that need attention, and reduce the amount of invalid alarms.

In development, we realize the communication between services through gRPC. In the gRPC ecosystem, there is an open source project called go grpc prometheus. By inserting a few lines of simple code into the service, you can monitor and control all rpc requests of the gRPC server.

For container services, log management includes two dimensions: log collection and log rolling.

We print the service log to the syslog, and then transfer the syslog log to the stdout/stderr of the container by some means to facilitate external log collection in a conventional way. At the same time, the logrotate function is configured in the syslog to automatically roll logs, so as to avoid service exceptions caused by logs occupying full container disk space.

To improve development efficiency, we use https://github.com/phusion/baseimage-docker As a basic image, it has built-in syslog and lograte services. Applications only care about logging into syslog, and do not care about log collection and log rolling.

Summary

Through the above description, the complete MySQL application architecture is as follows:

In the process of developing highly reliable MySQL applications based on k8s, with the in-depth understanding of k8s and MySQL, we continue to abstract, The following general logic and best practices are gradually implemented in modules: • Operator development framework • Health detection service • Fault automatic processing service • Task scheduling service • Configuration management service • Monitoring service • Log service • etc

With the modularization of these general logic and best practices, when developing new k8s based highly reliable applications, developers can quickly build up k8s related interactions like "building blocks". Such applications have been highly reliable since the beginning because they have applied best practices. At the same time, developers can shift their attention from the steep learning curve of K8S to the application field, and enhance the reliability of services from the application itself.

Niuren said

The Niurenshuo column is devoted to the discovery of the thoughts of technologists, including technical practice, technical dry goods, technical insights, growth experience, and all the technical content worth being discovered. We hope to gather the best technicians to dig out unique, sharp and contemporary voices.