public class TestBean implements Record { private int intV; private String stringV; public TestBean() { } public TestBean(int intV, String stringV) { this.intV = intV; this.stringV = stringV; } //Get/set method @Override public void deserialize(InputArchive archive, String tag) throws IOException { archive.startRecord(tag); this.intV = archive.readInt("intV"); this.stringV = archive.readString("stringV"); archive.endRecord(tag); } @Override public void serialize(OutputArchive archive, String tag) throws IOException { archive.startRecord(this, tag); archive.writeInt(intV, "intV"); archive.writeString(stringV, "stringV"); archive.endRecord(this, tag); } }

public class BinaryTest1 { public static void main(String[] args) throws IOException { ByteArrayOutputStream baos = new ByteArrayOutputStream(); BinaryOutputArchive boa = BinaryOutputArchive.getArchive(baos); new TestBean(1, "testbean1").serialize(boa, "tag1"); byte array[] = baos.toByteArray(); ByteArrayInputStream bais = new ByteArrayInputStream(array); BinaryInputArchive bia = BinaryInputArchive.getArchive(bais); TestBean newBean1 = new TestBean(); newBean1.deserialize(bia, "tag1"); System.out.println("intV = " + newBean1.getIntV() + ",stringV = " + newBean1.getStringV()); bais.close(); baos.close(); } }

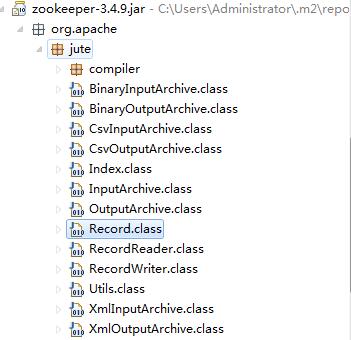

public interface Record { public void serialize(OutputArchive archive, String tag) throws IOException; public void deserialize(InputArchive archive, String tag) throws IOException; }

public interface OutputArchive { public void writeByte(byte b, String tag) throws IOException; public void writeBool(boolean b, String tag) throws IOException; public void writeInt(int i, String tag) throws IOException; public void writeLong(long l, String tag) throws IOException; public void writeFloat(float f, String tag) throws IOException; public void writeDouble(double d, String tag) throws IOException; public void writeString(String s, String tag) throws IOException; public void writeBuffer(byte buf[], String tag) throws IOException; public void writeRecord(Record r, String tag) throws IOException; public void startRecord(Record r, String tag) throws IOException; public void endRecord(Record r, String tag) throws IOException; public void startVector(List v, String tag) throws IOException; public void endVector(List v, String tag) throws IOException; public void startMap(TreeMap v, String tag) throws IOException; public void endMap(TreeMap v, String tag) throws IOException; }

private ByteBuffer bb = ByteBuffer.allocate(1024); private DataOutput out; public static BinaryOutputArchive getArchive(OutputStream strm) { return new BinaryOutputArchive(new DataOutputStream(strm)); } /** Creates a new instance of BinaryOutputArchive */ public BinaryOutputArchive(DataOutput out) { this.out = out; } public void writeByte(byte b, String tag) throws IOException { out.writeByte(b); } //Other types of serialization are omitted. You can check the source code yourself

public class TestBeanAll implements Record { private byte byteV; private boolean booleanV; private int intV; private long longV; private float floatV; private double doubleV; private String stringV; private byte[] bytesV; private Record recodeV; private List<Integer> listV; private TreeMap<Integer, String> mapV; @Override public void deserialize(InputArchive archive, String tag) throws IOException { archive.startRecord(tag); this.byteV = archive.readByte("byteV"); this.booleanV = archive.readBool("booleanV"); this.intV = archive.readInt("intV"); this.longV = archive.readLong("longV"); this.floatV = archive.readFloat("floatV"); this.doubleV = archive.readDouble("doubleV"); this.stringV = archive.readString("stringV"); this.bytesV = archive.readBuffer("bytes"); archive.readRecord(recodeV, "recodeV"); // list Index vidx1 = archive.startVector("listV"); if (vidx1 != null) { listV = new ArrayList<>(); for (; ! vidx1.done(); vidx1.incr()) { listV.add(archive.readInt("listInt")); } } archive.endVector("listV"); // map Index midx1 = archive.startMap("mapV"); mapV = new TreeMap<>(); for (; ! midx1.done(); midx1.incr()) { Integer k1 = new Integer(archive.readInt("k1")); String v1 = archive.readString("v1"); mapV.put(k1, v1); } archive.endMap("mapV"); archive.endRecord(tag); } @Override public void serialize(OutputArchive archive, String tag) throws IOException { archive.startRecord(this, tag); archive.writeByte(byteV, "byteV"); archive.writeBool(booleanV, "booleanV"); archive.writeInt(intV, "intV"); archive.writeLong(longV, "longV"); archive.writeFloat(floatV, "floatV"); archive.writeDouble(doubleV, "doubleV"); archive.writeString(stringV, "stringV"); archive.writeBuffer(bytesV, "bytes"); archive.writeRecord(recodeV, "recodeV"); // list archive.startVector(listV, "listV"); if (listV != null) { int len1 = listV.size(); for (int vidx1 = 0; vidx1 < len1; vidx1++) { archive.writeInt(listV.get(vidx1), "listInt"); } } archive.endVector(listV, "listV"); // map archive.startMap(mapV, "mapV"); Set<Entry<Integer, String>> es1 = mapV.entrySet(); for (Iterator<Entry<Integer, String>> midx1 = es1.iterator(); midx1 .hasNext();) { Entry<Integer, String> me1 = (Entry<Integer, String>) midx1.next(); Integer k1 = (Integer) me1.getKey(); String v1 = (String) me1.getValue(); archive.writeInt(k1, "k1"); archive.writeString(v1, "v1"); } archive.endMap(mapV, "mapV"); archive.endRecord(this, tag); } }

module test { class TestBean { int intV; ustring stringV; } class TestBeanAll { byte byteV; boolean booleanV; int intV; long longV; float floatV; double doubleV; ustring stringV; buffer bytes; test.TestBean record; vector<int>listV; map<int,ustring>mapV; } }

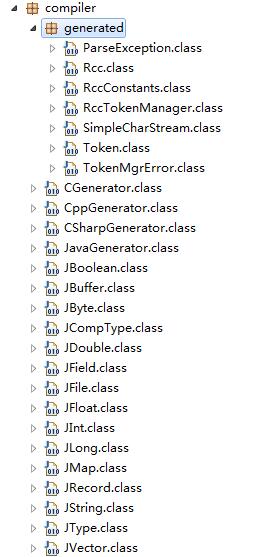

public static void main(String args[]) { String language = "java"; ArrayList recFiles = new ArrayList(); JFile curFile=null; for (int i=0; i<args.length; i++) { if ("-l".equalsIgnoreCase(args[i]) || "--language".equalsIgnoreCase(args[i])) { language = args[i+1].toLowerCase(); i++; } else { recFiles.add(args[i]); } } if (! "c++".equals(language) && ! "java".equals(language) && ! "c".equals(language)) { System.out.println("Cannot recognize language:" + language); System.exit(1); } //The following is omitted }

public class ParseTest { public static void main(String[] args) { String params[] = new String[3]; params[0] = "-l"; params[1] = "java"; params[2] = "test.jute"; Rcc.main(params); } }

syntax = "proto3"; option java_package = "protobuf.clazz"; option java_outer_classname = "GoodsPicInfo"; message PicInfo { int32 ID = 1; int64 GoodID = 2; string Url = 3; string Guid = 4; string Type = 5; int32 Order = 6; }

module test { class PicInfo { int ID; long GoodID; ustring Url; ustring Guid; ustring Type; int Order; } }

public class Protobuf_Test { public static void main(String[] args) throws InvalidProtocolBufferException { long startTime = System.currentTimeMillis(); byte[] result = null; for (int i = 0; i < 50000; i++) { GoodsPicInfo.PicInfo.Builder builder = GoodsPicInfo.PicInfo .newBuilder(); builder.setGoodID(100); builder.setGuid("11111-22222-3333-444"); builder.setOrder(0); builder.setType("ITEM"); builder.setID(10); builder.setUrl(" http://xxx.jpg "); GoodsPicInfo.PicInfo info = builder.build(); result = info.toByteArray(); } long endTime = System.currentTimeMillis(); System. out. println ("Byte size:"+result. length+", serialization time:" + (endTime - startTime) + "ms"); for (int i = 0; i < 50000; i++) { GoodsPicInfo.PicInfo newBean = GoodsPicInfo.PicInfo .getDefaultInstance(); MessageLite prototype = newBean.getDefaultInstanceForType(); newBean = (PicInfo) prototype.newBuilderForType().mergeFrom(result) .build(); } long endTime2 = System.currentTimeMillis(); System. out. println ("Deserialization takes time:"+(endTime2 - endTime)+"ms"); } }

public class Jute_test { public static void main(String[] args) throws IOException { long startTime = System.currentTimeMillis(); byte array[] = null; for (int i = 0; i < 50000; i++) { ByteArrayOutputStream baos = new ByteArrayOutputStream(); BinaryOutputArchive boa = BinaryOutputArchive.getArchive(baos); new PicInfo(10, 100, " http://xxx.jpg ", "11111-22222-3333-444", "ITEM", 0).serialize(boa, "tag" + i); array = baos.toByteArray(); } long endTime = System.currentTimeMillis(); System. out. println ("Byte size:"+array. length+", serialization time:" + (endTime - startTime) + "ms"); for (int i = 0; i < 50000; i++) { ByteArrayInputStream bais = new ByteArrayInputStream(array); BinaryInputArchive bia = BinaryInputArchive.getArchive(bais); PicInfo newBean = new PicInfo(); newBean.deserialize(bia, "tag1"); } long endTime2 = System.currentTimeMillis(); System. out. println ("Deserialization takes time:"+(endTime2 - endTime)+"ms"); } }