<dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.10</artifactId> <version>1.2.0</version> </dependency>

package com.spark; import java.util.Arrays; import java.util.regex.Pattern; import org.apache.spark.SparkConf; import org.apache.spark.api.java.JavaRDD; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.api.java.function.FlatMapFunction; public class WordCount { private static final Pattern SPACE = Pattern.compile(" "); public static void main(String[] args) { SparkConf conf = new SparkConf().setAppName("JavaWordCount"); JavaSparkContext ctx = new JavaSparkContext(conf); String filePath = "D:/systemInfo.log"; JavaRDD<String> lines = ctx.textFile(filePath, 1); JavaRDD<String> words = lines .flatMap(new FlatMapFunction<String, String>() { @Override public Iterable<String> call(String s) { return Arrays.asList(SPACE.split(s)); } }); System.out.println("wordCount:" + words.count()); } }

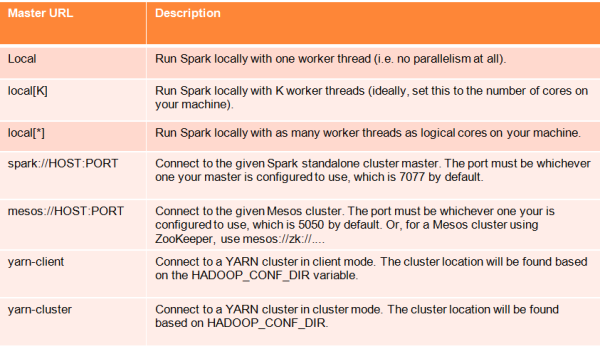

package com.spark; import java.io.BufferedReader; import java.io.InputStreamReader; import java.util.Arrays; import java.util.regex.Pattern; import org.apache.spark.SparkConf; import org.apache.spark.api.java.JavaRDD; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.api.java.function.FlatMapFunction; public final class JavaWordCount { private static final Pattern SPACE = Pattern.compile(" "); public static void main(String[] args) throws Exception { SparkConf conf = new SparkConf().setMaster("local").setAppName( "JavaWordCount"); JavaSparkContext ctx = new JavaSparkContext(conf); String filePath = ""; BufferedReader reader = new BufferedReader(new InputStreamReader( System.in)); System.out.println("Enter FilePath:"); System.out.println("e.g. D:/systemInfo.log"); while (true) { System.out.println("> "); filePath = reader.readLine(); if (filePath.equalsIgnoreCase("exit")) { ctx.stop(); } else { JavaRDD<String> lines = ctx.textFile(filePath, 1); JavaRDD<String> words = lines .flatMap(new FlatMapFunction<String, String>() { @Override public Iterable<String> call(String s) { return Arrays.asList(SPACE.split(s)); } }); System.out.println("wordCount:" + words.count()); } } } } Enter FilePath: e.g. D:/systemInfo.log > D:/systemInfo.log wordCount:48050 >