OPV2V

What is OPV2V?

-

aggregated sensor data from multi-connected automated vehicles -

73 scenes, 6 road types, 9 cities -

12K frames of LiDAR point clouds and RGB camera images, 230K annotated 3D bounding box -

comprehensive benchmark with 4 LiDAR detectors and 4 different fusion strategies (16 models in total)

Diverse Scenes

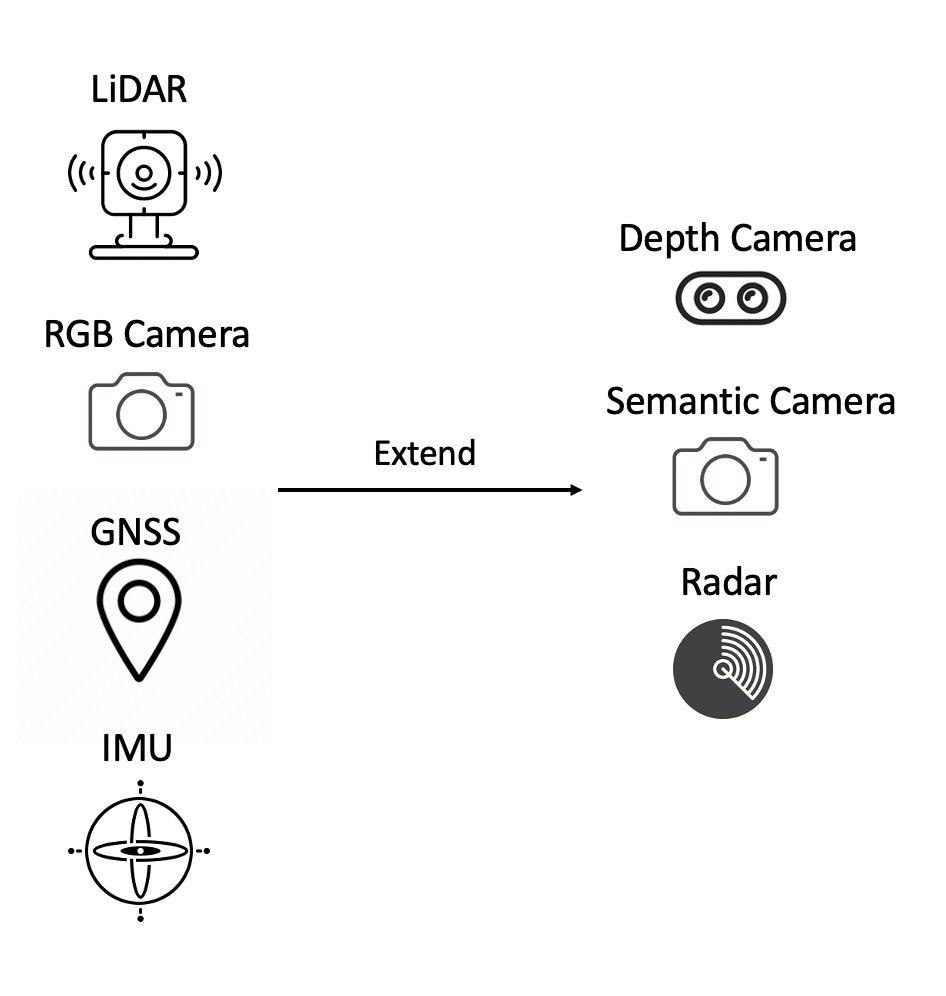

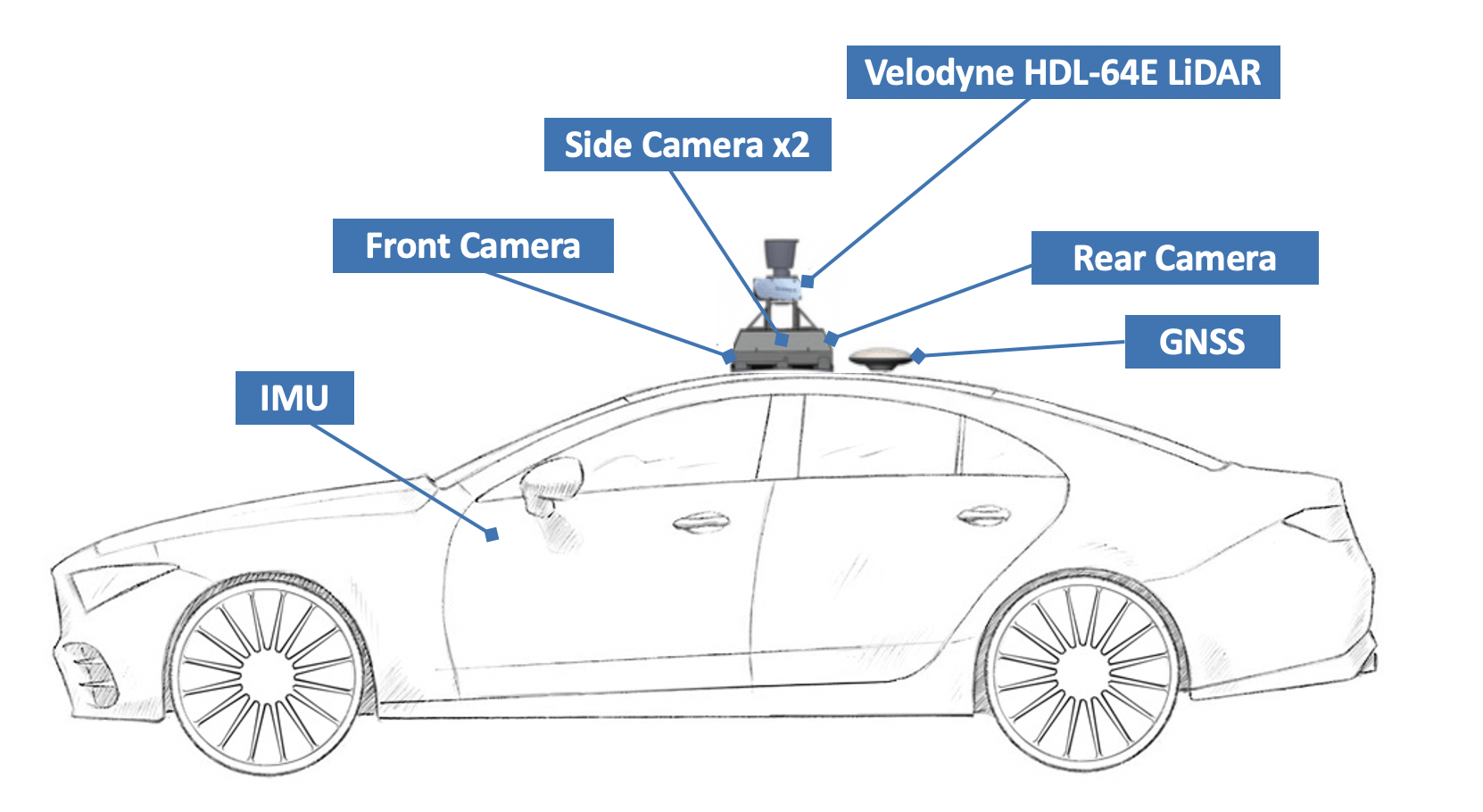

Sensor Suites

-

Lidar: 120 m detection range, 130,0000 points per second, 26.8◦ vertical

-

4x Camera: 110 ◦ FOV, 800×600 resolution

-

GNSS: 0.2m error

Extensible and Reproducible