With the rapid popularization of generative AI technology and the increasing demand for terminal AI, Qualcomm, as a world leading chip enterprise and AI technology driver, Recently released a new white paper, "Enable terminal side generative AI through NPU and heterogeneous computing", It deeply analyzed the NPU technology brought by Qualcomm, and discussed how to achieve a variety of generative AI application cases on the terminal side through the integration of NPU and heterogeneous computing.

It is believed that many concepts and terms are unfamiliar to the mass consumers, Based on the information collected by the author and in combination with some information from Qualcomm, let me briefly say: How does Qualcomm promote the development of terminal AI through new technologies?

Before understanding its significance, it is necessary to understand the following questions:

What are NPU and heterogeneous computing?

Why are NPU and heterogeneous computing more suitable for generative AI?

What are the advantages of Qualcomm terminal AI?

What industry use cases are available?

First, let's talk about NPU. NPU is also called neural network processor, which is specially designed to accelerate deep learning tasks and machine learning algorithms. Deep learning is a branch of AI, which occupies a core position in machine learning. It is used to process image recognition speech recognition , natural language processing and other neural network model scenarios, while NPU can significantly accelerate the reasoning and training process of the deep learning model. In addition, NPU has a higher energy efficiency ratio, which is more suitable for edge computing devices and mobile terminals.

Heterogeneous computing refers to the technology of using different types of processors or computing units to accomplish computing tasks in a computing system. These units include CPU, GPU, and NPU accelerator 。 Its purpose is to improve the overall performance and efficiency of the system and the ability to adapt to various complex computing scenarios by combining the advantages of different processors, while addressing the limitations of a single architecture in power consumption, heat dissipation, etc.

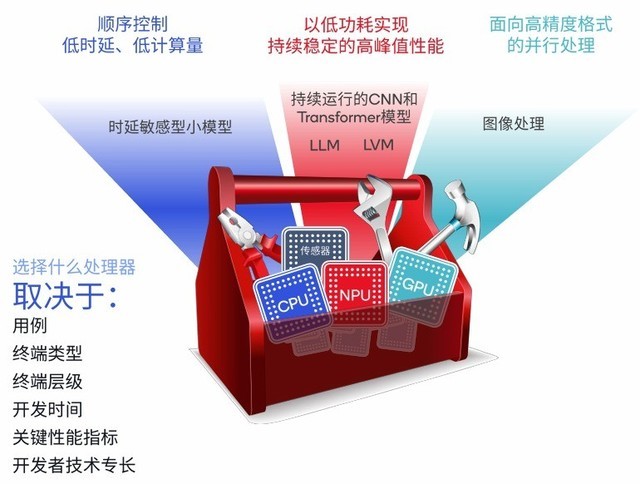

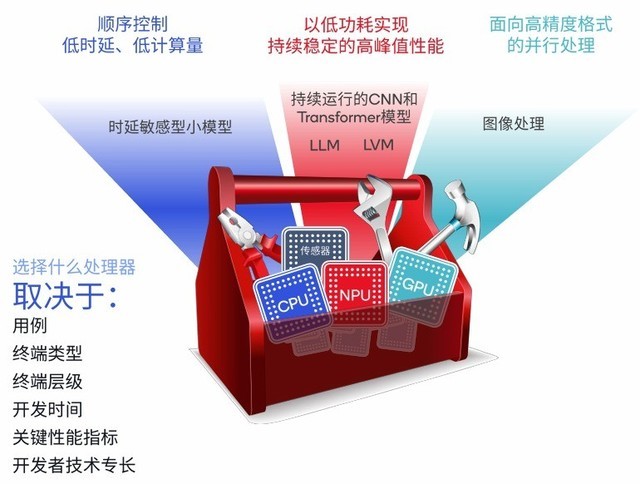

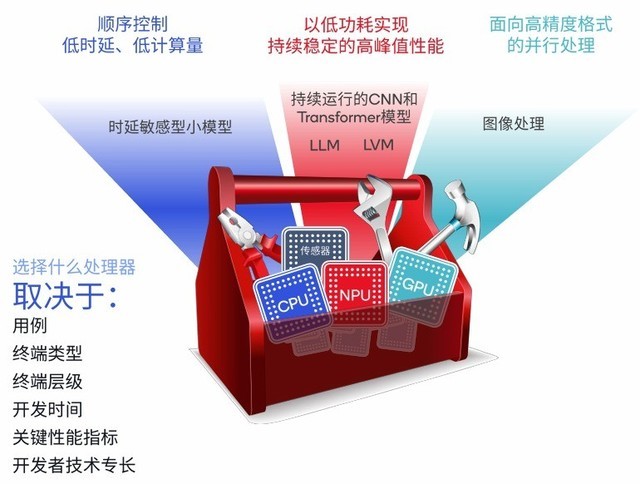

In short, it is to allocate different types of computing tasks in generative AI tasks to the most suitable computing unit through heterogeneous computing. For example, faced with a wealth of generative AI use cases, CPU is suitable for application scenarios requiring low latency, or relatively small traditional models, such as convolutional neural network model (CNN) and some specific large language models (LLM); GPU is good at parallel processing for high-precision formats, such as image and video processing with very high image quality requirements; In the continuous use case, it is necessary to achieve sustained and stable high peak performance with low power consumption, and NPU can play its greatest advantage.

Will NPU and heterogeneous computing be more suitable for generative AI?

The answer is yes. In fact, generative AI involves a lot of data processing and complex computing tasks, especially the training and reasoning of deep learning models.

These tasks require very high computing resources, and relying on traditional CPUs or GPUs alone may not meet the performance requirements. NPU is specially designed for neural network computing, which can simulate the working mode of human neurons and synapses, and specially optimize a large number of neural network models involved in AI computing, so as to improve processing efficiency and reduce energy consumption.

Secondly, the emergence of heterogeneous computing has further improved the computing power of generative AI. Heterogeneous computing enables them to work in parallel by integrating computing units of different architectures (such as CPU, GPU, NPU, etc.), thus giving full play to their respective advantages.

In addition, with the continuous expansion and complexity of generative AI applications, the demand for computing power continues to grow. The combination of NPU and heterogeneous computing can better meet this demand. Take Qualcomm as an example, CPU, GPU and other computing units have iterations every year, and each iteration will bring huge performance upgrades, promoting the further development and application of generative AI technology.

Next, let's talk about the advantages of AI on the Qualcomm terminal side?

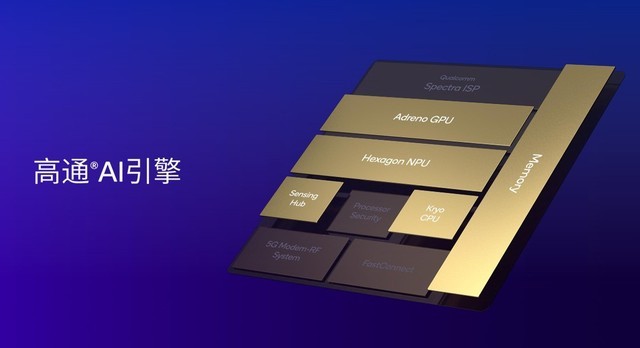

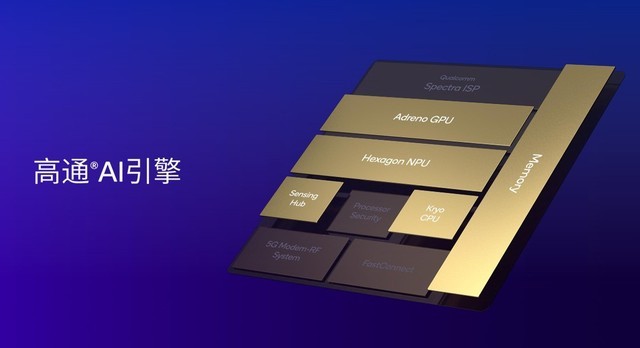

First of all, the Qualcomm AI engine has always been designed for heterogeneous computing. It includes CPU, GPU, NPU (neural network processor), Qualcomm sensor hub and other processor components. Therefore, the best processor can be flexibly selected during AI processing. In addition, as an "AI dedicated" processor, the NPU can continue to work when the CPU and GPU are occupied too much, maintaining the integrity and continuity of the AI experience.

At the same time, Qualcomm NPUs have industry-leading performance per watt. For example, in the Stable Diffusion or other diffusion models, Qualcomm NPUs have excellent performance per watt. Other key cores of Qualcomm AI engine, such as Adreno GPU, also have industry-leading performance per watt.

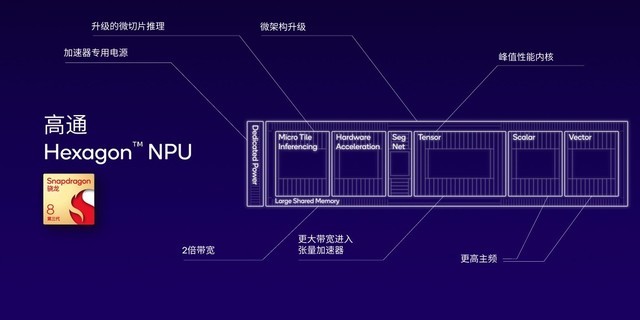

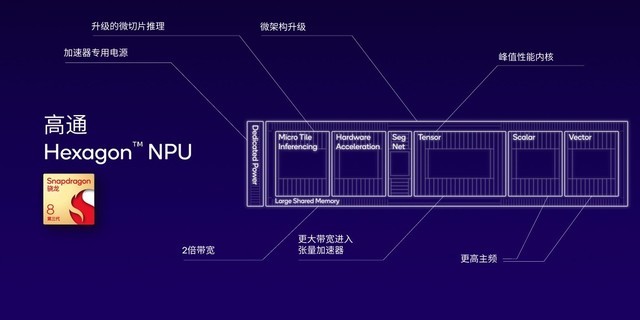

In terms of performance, the current Hexagon NPU can run a model with up to 10 billion parameters on the terminal side. Both the speed of the first token generation and the rate of token generation per second are at the leading level in the industry. Hexagon NPU also introduces microsection reasoning technology, which increases the large shared memory that can support all engine components to achieve the leading LLM processing capability.

In addition, the high pass sensor hub can run always on use cases with extremely low power consumption. By integrating the low-power AI capability into the chip subsystem, the chip can obtain a large number of situational information on the terminal side, focusing on a personalized AI experience, which is also one of the unique advantages of the terminal side AI, and personalized information will be retained on the terminal, which is unavailable to the cloud.

In terms of memory, Qualcomm products such as the third generation Snapdragon 8 have supported the industry's most leading LPDDR5x memory, with a frequency of up to 4.8GHz. They can support the operation of large language models such as Baichuan and Llama 2 at a very high speed of chip memory reading, and achieve a very fast token generation rate.

Next, we will introduce a specific use case.

The first is AI travel assistant, which can directly put forward the demand for planning travel itinerary to the model. The AI assistant can immediately give flight itinerary suggestions, conduct voice conversation with the user to adjust the itinerary, and finally create a complete flight schedule through the Skyscanner plug-in.

And in Voice Assistant On the other hand, based on generative AI, a virtual avatar AI assistant on the terminal side is created, which can achieve voice interaction and communication with users. It can convert between voice and text according to different models, and finally output voice. At the same time, it can match voice with the mouth shape of the virtual avatar through the integration of deformation animation technology to achieve voice and speech synchronization. Later, the virtual avatar rendering was performed through the fantasy engine MetaHuman, and this part of rendering was completed on the Adreno GPU.

last:

Every time I talk about the development of Qualcomm AI on the terminal side, one thing cannot be ignored, that is, scale. In intelligence mobile phone It is a well-known fact that Qualcomm's SoC market share has been leading all the year round. In other diversified scenarios such as consumer electronics, industrial Internet of Things, cloud computing, etc., there are also billions of terminal devices equipped with Snapdragon chips or Qualcomm services, This undoubtedly creates a huge ecosystem for the widespread popularization and in-depth development of terminal AI technology.

At the same time, Qualcomm is not only committed to innovative design at the hardware level, but also continues to make efforts in the full stack AI research, including algorithm optimization Software In terms of development kit (SDK) upgrade and developer service support, ensure that its AI solution can run efficiently on various terminals, and constantly promote the improvement of AI model's end-to-end miniaturization, rapid response and low-power execution capability. Through this software and hardware integration strategy, Qualcomm has successfully promoted the commercialization and large-scale expansion of terminal AI technology, and promoted the progress and development of the entire industry.

This article is an original article. If it is reproduced, please indicate the source: Interpretation of Qualcomm AI technology white paper, see how NPU and heterogeneous computing can open a new era of terminal AI https://mobile.zol.com.cn/860/8601967.html

https://mobile.zol.com.cn/860/8601967.html

mobile.zol.com.cn

true

Zhongguancun Online

https://mobile.zol.com.cn/860/8601967.html

report

four thousand five hundred and fifty-eight

With the rapid popularization of generative AI technology and the increasing demand for terminal AI, Qualcomm, as the world's leading chip enterprise and AI technology driver, recently released a new white paper, "Enabling terminal generative AI through NPU and heterogeneous computing", which deeply analyzed the NPU technology brought by Qualcomm, and discussed how to integrate NPU and heterogeneous computing, Realize diversified generation on the terminal side