Welcome to join the communication group~You can ask questions, make suggestions, and communicate with each other QQ group: 975907202

-

bert -

roberta -

roberta-large -

gpt2 -

t5 -

Huawei nezha model -

Bart Chinese

-

Seq2seq For example, writing poems, couplets, automatic titles, automatic abstracts, etc. -

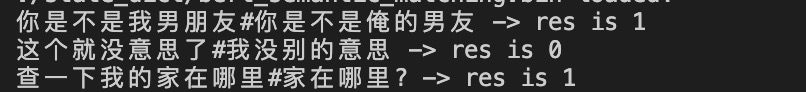

Cls_classifier classifies by extracting the cls vector at the beginning of a sentence, such as sentiment analysis, text classification, semantic matching, etc. -

Sequence_labeling sequence tagging tasks, such as named entity recognition, part of speech tagging, Chinese word segmentation, etc. -

Sequence_labeling_crf is added to the sequence tagging task of CRF Loss for better results. -

Relation_extract relation extraction, such as triple extraction task. (It's not exactly the same as the example of Mr. Su Jianlin.) -

Simbert SimBert model, generate similar sentences, and judge the similarity of similar sentences. -

Multi_label_cls multi label classification.

-

The roberta model, model and dictionary files need to go to https://drive.google.com/file/d/1iNeYFhCBJWeUsIlnW_2K6SMwXkM4gLb_/view Download here. For details, please refer to the github warehouse https://github.com/ymcui/Chinese-BERT-wwm The roberta large model can also be downloaded from it. -

The bert model (large is not supported at present). Download the bert Chinese pre training weight "bert base chinese":“ https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-chinese-pytorch_model.bin ", Download bert Chinese dictionary" bert base chinese ":" https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-chinese-vocab.txt ". -

NEZHA model, dictionary weight location (currently only supports base): NEZHA base model download: link: https://pan.baidu.com/s/1Z0SJbISsKzAgs0lT9hFyZQ Extraction code: 4awe -

For the gpt2 model, you can view the gpt_test file in the test file for text continuation test. The download address of the gpt2 Chinese general model and dictionary is: https://pan.baidu.com/s/1vTYc8fJUmlQrre5p0JRelw Password: f5un -

For the English model of gpt2, refer to https://huggingface.co/pranavpsv/gpt2-genre-story-generator For the pre training model, see gpt2_english_story_train.py in example for the specific training code -

The t5 model is supported in both English and Chinese. It can be directly loaded using the transformers package. See the relevant examples in the examples folder for details. Pre training parameters download: https://github.com/renmada/t5-pegasus-pytorch -

SimBert model supports the generation of similar sentences. Pre training models can use bert, roberta and nezha. -

Download address of bart Chinese model: https://huggingface.co/fnlp/bart-base-chinese

-

Install this frame pip install bert-seq2seq -

Install pytorch -

Installing tqdm can be used to display the progress bar pip install tqdm -

Prepare your own data, just modify the read_data function in the example code, construct the input and output, and then start the training. -

Run the corresponding * _train.py file under the example folder. Run different train.py files for different tasks. You need to modify the structure of input and output data, and then conduct training. See various examples in examples